This tutorial will help you learn how to manage Amazon DynamoDB using Boto3, the AWS SDK for Python. Boto3 simplifies interacting with AWS services, including DynamoDB, for developers. The guide covers everything from installing and setting up Boto3 to performing advanced DynamoDB operations for novice and experienced developers.

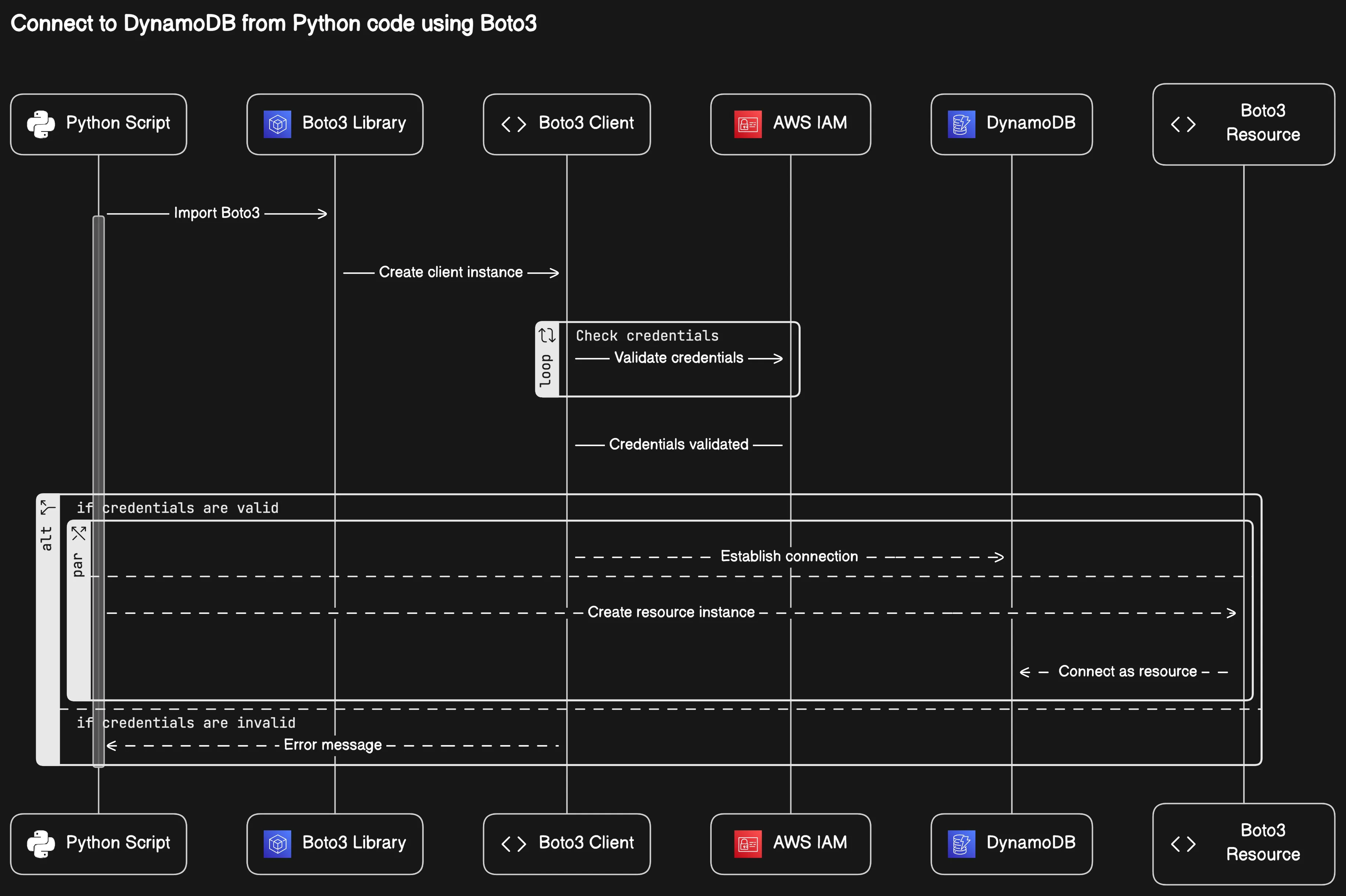

How To Connect To DynamoDB Using Python

To connect to Amazon DynamoDB using Python, you primarily use the Boto3 library, which allows you to create a client or a resource object. These objects enable you to interact with DynamoDB. Here’s how you can do it:

import boto3

# Create a DynamoDB client using boto3

client = boto3.client(

'dynamodb',

region_name='your-region'

)

# List tables using the client

response = client.list_tables()

print("Tables:", response['TableNames'])

import boto3

# Create a DynamoDB resource using boto3

resource = boto3.resource(

'dynamodb',

region_name='your-region'

)

# Access a table

table = resource.Table('your-table-name')

# Print the table name to confirm access

print("Table Name:", table.table_name)Create Tables in DynamoDB using Boto3

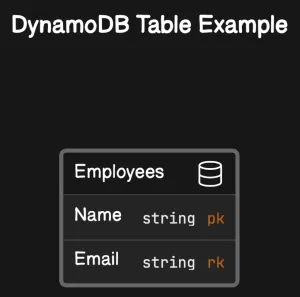

Let’s create a DynamoDB table named Employees.

This table will have a composite primary key consisting of:

Namea partition key (also known as the “hash key”) withAttributeTypeset toSfor string.Emaila sort key (also known as the “range key”) withAttributeTypeset toSfor string.

import boto3

# Initialize a DynamoDB client using boto3

dynamodb_client = boto3.client(

'dynamodb',

region_name='us-east-1'

)

# Define the table creation parameters

table_creation_params = {

'TableName': 'Employees',

'KeySchema': [

{

'AttributeName': 'Name',

'KeyType': 'HASH' # Partition key

},

{

'AttributeName': 'Email',

'KeyType': 'RANGE' # Sort key

}

],

'AttributeDefinitions': [

{

'AttributeName': 'Name',

'AttributeType': 'S' # String type

},

{

'AttributeName': 'Email',

'AttributeType': 'S' # String type

}

],

'ProvisionedThroughput': {

'ReadCapacityUnits': 1,

'WriteCapacityUnits': 1

}

}

# Create the DynamoDB table

response = dynamodb_client.create_table(**table_creation_params)

# Wait for the table to be created

waiter = dynamodb_client.get_waiter('table_exists')

waiter.wait(TableName='Employees')

print(f"Table '{response['TableDescription']['TableName']}' created successfully.")import boto3

# Initialize a DynamoDB resource using boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

# Create the DynamoDB table

table = dynamodb.create_table(

TableName='Employees',

KeySchema=[

{

'AttributeName': 'Name',

'KeyType': 'HASH' # Partition key

},

{

'AttributeName': 'Email',

'KeyType': 'RANGE' # Sort key

}

],

AttributeDefinitions=[

{

'AttributeName': 'Name',

'AttributeType': 'S' # String type

},

{

'AttributeName': 'Email',

'AttributeType': 'S' # String type

}

],

ProvisionedThroughput={

'ReadCapacityUnits': 1,

'WriteCapacityUnits': 1

}

)

# Wait for the table to be created

waiter = table.meta.client.get_waiter('table_exists')

waiter.wait(TableName='Employees')

print(f"Table '{table.table_name}' created successfully.")ProvisionedThroughput means the maximum number of consistent reads and writes per second on your table.

List Tables using Boto3 DynamoDB

To list all DynamoDB tables, you can use the following code for client and resource:

import boto3

# Initialize a DynamoDB client

client = boto3.client(

'dynamodb',

region_name='your-region'

)

# Set the initial start table name to None

start_table_name = None

# Loop to handle the paging

while True:

if start_table_name:

# If we have a start_table_name,

# use it in the exclusive_start_table_name

# parameter

response = client.list_tables(

ExclusiveStartTableName=start_table_name

)

else:

# First call to list_tables does not use

# exclusive_start_table_name

response = client.list_tables()

# Print the table names

for table_name in response.get('TableNames', []):

print(table_name)

# Check if there's more data to process

start_table_name = response.get('LastEvaluatedTableName')

if not start_table_name:

breakimport boto3

# Initialize a DynamoDB resource

resource = boto3.resource(

'dynamodb',

region_name='your-region'

)

# List tables using the resource

tables = list(

dynamodb_resource.tables.all()

)

for table in tables:

print(table.name)Adding items to a DynamoDB table using Boto3

To add a new item data to a table, use the put_item() method.

Here’s a step-by-step guide on how to add a single item to a DynamoDB table:

- Import the Boto3 library and set up the DynamoDB resource.

import boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='aws-region'

)- Select your DynamoDB table by name.

table = dynamodb.Table('your-table-name')- Add an item to the table using the

put_itemmethod.

response = table.put_item(

Item={

'primary_key': 'value',

'attribute1': 'value1',

'attribute2': 'value2',

# ... additional attributes ...

}

)Replace 'primary_key', 'attribute1', 'attribute2', and their corresponding 'value' placeholders with your table’s primary key and item attributes.

- Handle the response to ensure the item was added successfully.

if response['ResponseMetadata']['HTTPStatusCode'] == 200:

print("Item added successfully!")

else:

print("Error adding item.")When using the put_item method, you do not need to specify attribute types, as you would when using the lower-level client interface. This makes the put_item method with the resource interface more user-friendly.

Let’s add an item with a primary Name and Email to our table.

import boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

response = table.put_item(

Item = {

'Name': 'Andrei Maksimov',

'Email': 'info@hands-on.cloud'

}

)

if response['ResponseMetadata']['HTTPStatusCode'] == 200:

print("Item added successfully!")

else:

print("Error adding item.")Remember to handle exceptions and errors appropriately in your production code to ensure robustness and reliability when interacting with DynamoDB.

Following these steps, you can easily add items to your DynamoDB table using Python and Boto3, making your application’s data management efficient and scalable.

Batch operations in DynamoDB using Boto3

Batch operations in Amazon DynamoDB allow you to perform multiple read or write operations in a single API call, which can greatly enhance the efficiency of your database interactions. When using the Boto3 library in Python, batch operations can be handled using the batch_writer() function.

Using batch_writer()

The batch_writer() function simplifies the process of performing batch operations. It automatically handles the batching of write requests, the division of items into the appropriate size for DynamoDB, and the necessary retries in case of request failures. Here’s a basic example of how to use batch_writer():

import boto3

# Create a DynamoDB resource

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('YourTableName')

# Use the batch writer to write items

with table.batch_writer() as batch:

batch.put_item(Item={'PrimaryKey': 'Value1', 'Attribute': 'Value2'})

batch.delete_item(Key={'PrimaryKey': 'Value3'})

Let’s write several records to our demo table:

import boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

with table.batch_writer() as batch:

batch.put_item(

Item={

"Name": "Luzze John",

"Email": "john@hands-on.cloud",

"Department": "IT",

"Section": {

"QA": "QA-1",

"Reporting Line": "L1"

}

}

)

batch.put_item(

Item={

"Name": "Lugugo Joshua",

"Email": "joshua@hands-on.cloud",

"Department": "IT",

"Section": {

"Development": "SD-1",

"Reporting Line": "L1"

}

}

)

batch.put_item(

Item={

"Name": "Robert Nsamba",

"Email": "robert@hands-on.cloud",

"Department": "IT",

"Section": {

"PM": "PM-1",

"Reporting Line": "L1"

}

}

)Benefits of Batch Operations

- Reduced Network Calls: Grouping multiple write requests into one API call reduces the number of network calls, which can improve performance.

- Automatic Retries: The

batch_writer()handles retries for you, making your code simpler and more robust. - Error Handling: If DynamoDB returns any unprocessed items, you should retry the batch operation on those items. It is strongly recommended to use an exponential backoff algorithm to avoid throttling and increase the likelihood of success.

Limitations and Considerations

- Request Size: Each batch operation can contain up to 25 individual put or delete requests.

- Throttling: If you exceed the provisioned throughput for a table, DynamoDB might throttle batch operations. Implementing exponential backoff is crucial for handling such scenarios.

Best Practices

- Always check for unprocessed items in the response and implement a retry strategy.

- Monitor the consumed write capacity units to ensure you stay within your provisioned throughput limits.

Batch operations are a powerful feature of DynamoDB when used correctly. They can significantly improve the performance of your application by minimizing the number of write requests made to the database. When using Boto3 to interact with DynamoDB, it’s important to understand and correctly implement batch operations to leverage their potential fully.

Reading data from a DynamoDB table using Boto3

Reading data from an Amazon DynamoDB table using the Boto3 library in Python is a common task when working with AWS services. The Boto3 library provides several methods to retrieve data, such as get_item, query, and scan. Below are the steps and examples to read data from a DynamoDB table.

Using get_item to Retrieve a Single Item

To read a single item from the DynamoDB table, use the get_item() method with the key-value pair of items in your table.

import json

import boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

# boto3 dynamodb getitem

response = table.get_item(

Key={

'Name': 'Andrei Maksimov',

'Email': 'info@hands-on.cloud'

})

print(json.dumps(response['Item'], indent=4))Using scan to Access All Items

To all read items in the table, you can use the scan() method to return all items. It is less efficient than query but useful when you need to retrieve all items without query conditions.

import json

import boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

# Scan the table

response = table.scan()

data = response['Items']

# Continue scanning if necessary

while 'LastEvaluatedKey' in response:

response = table.scan(ExclusiveStartKey=response['LastEvaluatedKey'])

data.extend(response['Items'])

for item in data:

print(json.dumps(item, indent=4))Filtering Results

You can apply filters to both query and scan operations to narrow down the results based on specific attributes.

import json

import boto3

from boto3.dynamodb.conditions import Key, Attr

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

# Scan with filter expressions

response = table.scan(

FilterExpression=Attr('Name').eq('Andrei Maksimov')

)

filtered_items = response['Items']

# Continue scanning if necessary

while 'LastEvaluatedKey' in response:

response = table.scan(ExclusiveStartKey=response['LastEvaluatedKey'])

filtered_items.extend(response['Items'])

for item in filtered_items:

print(json.dumps(item, indent=4))When reading data from a DynamoDB table using Boto3, handling potential pagination is important due to the 1MB limit on the data returned in a single request. Use the LastEvaluatedKey from the response to manage subsequent requests for additional data.

For more detailed examples and best practices, refer to the official Boto3 documentation.

Remember to handle exceptions and errors gracefully to ensure a robust application. Use the try and except blocks to catch exceptions from the AWS service and take appropriate action.

Following these guidelines, you can effectively read data from a DynamoDB table using Python and Boto3, ensuring your application can retrieve the necessary information from your AWS database.

Updating items in a DynamoDB table using Boto3

Updating an item in a DynamoDB table using the Boto3 library in Python involves specifying the key of the item you want to update and providing an update expression that defines the changes to apply. Here’s a step-by-step guide to updating items:

- Import Boto3 and Create a DynamoDB Resource: Start by importing the Boto3 library and creating a DynamoDB resource to interact with your table.

import boto3 dynamodb = boto3.resource( 'dynamodb', region_name='aws-region' ) table = dynamodb.Table('YourTableName') - Specify the Key of the Item to Update: Identify the item you want to update by specifying its primary key.

key = {'PrimaryKey': 'Value'} - Define an Update Expression: Create an update expression specifying the updated attributes and their new values.

update_expression = 'SET #attr1 = :val1, #attr2 = :val2' - Provide Expression Attribute Names and Values: Define dictionaries for expression attribute names and values to avoid conflicts with reserved words and provide actual values for the update.

expression_attribute_names = {'#attr1': 'AttributeName1', '#attr2': 'AttributeName2'} expression_attribute_values = {':val1': 'NewValue1', ':val2': 'NewValue2'} - Perform the Update: Use the

update_itemmethod to apply the update to the item.response = table.update_item( Key=key, UpdateExpression=update_expression, ExpressionAttributeNames=expression_attribute_names, ExpressionAttributeValues=expression_attribute_values ) - Handle the Response: The response from the

update_itemmethod contains information about the update operation. Handle this response as needed.

Note: It’s important to handle potential exceptions that may occur during the update process, such as ConditionalCheckFailedException if a condition specified in the update is not met.

For more detailed examples and documentation, refer to the Boto3 DynamoDB update_item method in the AWS SDK for Python (Boto3) API Reference.

Following these steps, you can effectively update items in your DynamoDB table using Python and Boto3. Always test your update expressions and handle exceptions appropriately to ensure a smooth operation.

Let’s take a look at a more specific example of modifying a record in our table:

import boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

response = table.update_item(

Key={'Name': 'Luzze John', 'Email': 'john@handson.cloud'},

ExpressionAttributeNames={

"#section": "Section",

"#qa": "QA",

},

ExpressionAttributeValues={

':id': 'QA-2'

},

UpdateExpression="SET #section.#qa = :id",

)

print(response)Deleting items from a DynamoDB table using Boto3

When working with AWS DynamoDB, there may come a time when you need to delete items from your table. This can be accomplished with the delete_item() method. Below is a guide on how to use this method effectively.

Prerequisites

Before attempting to delete an item, ensure that you have the necessary permissions (dynamodb:DeleteItem) and that you have correctly configured your Boto3 client or resource.

Using delete_item()

To delete an item, specify the primary key of the item you wish to remove. Here’s a simple example:

import boto3

# Initialize a Boto3 DynamoDB resource

dynamodb = boto3.resource(

'dynamodb',

region_name='aws-region'

)

# Reference the table

table = dynamodb.Table('YourTableName')

# Delete the item with the specified primary key

response = table.delete_item(

Key={

'PrimaryKeyName': 'PrimaryKeyValue'

}

)Let’s take a look at a specific example:

import json

import boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

response = table.delete_item(

Key={

'Name': 'Peter Matovu',

'Email': 'petermatovu@hands-on.cloud'

}

)

print(json.dumps(response, indent=4))Conditional Deletes

You can also perform conditional deletes, where the item is deleted only if it meets certain conditions:

response = table.delete_item(

Key={

'PrimaryKeyName': 'PrimaryKeyValue'

},

ConditionExpression="attribute_exists(AttributeName)"

)Error Handling

Always include error handling to manage potential exceptions that may arise during the delete operation:

try:

# Attempt to delete the item

response = table.delete_item(

Key={

'PrimaryKeyName': 'PrimaryKeyValue'

}

)

except botocore.exceptions.ClientError as error:

# Handle specific error messages

if error.response['Error']['Code'] == "ConditionalCheckFailedException":

print("Item does not exist or condition not met.")

else:

raiseDeleting items from a DynamoDB table using Boto3 is straightforward, provided you have the correct permissions and know the primary key of the item you wish to delete. Always handle errors gracefully to ensure a robust application.

For more detailed information, refer to the official Boto3 documentation on the delete_item() method.

Querying a DynamoDB table using Boto3

Querying a DynamoDB table with Boto3 involves using the query() method provided by the Boto3 DynamoDB service resource or client. This method allows you to retrieve items from your table that match a specific primary key value or a combination of a partition key and a sort key value.

Here’s a step-by-step guide to querying a DynamoDB table using Boto3:

- Import Boto3 and Establish a Connection

Begin by importing the Boto3 library and establishing a connection to DynamoDB.import boto3 dynamodb = boto3.resource( 'dynamodb', region_name='aws-region' ) - Reference Your Table

Reference the table you wish to query.table = dynamodb.Table('YourTableName') - Use the

query()Method

Use thequery()method to perform the query operation. You’ll need to specify theKeyConditionExpressionto provide the criteria for the query.

– Replace'yourPartitionKey'with the name of your partition key.

– Replace'value'with the value you want to match.response = table.query( KeyConditionExpression=Key('yourPartitionKey').eq('value') ) items = response['Items'] - Handle the Response

The response from thequery()method includes a list of items that match the query criteria. You can iterate over this list to process your results.for item in items: print(item) - Consider Using Secondary Indexes

If you need to query on attributes that are not part of the primary key, you may need to use a secondary index. - Paginate Results

If your query returns a lot of data, you may need to handle pagination to retrieve all results. - Error Handling

Implement error handling to catch and respond to any issues arising during the query operation.

Let’s take a look at a specific example:

import json

import boto3

from boto3.dynamodb.conditions import Key

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

# boto3 ddb query

response = table.query(

KeyConditionExpression=Key('Name').eq('Luzze John')

)

print("The query returned the following items:")

for item in response['Items']:

print(json.dumps(item, indent=4))Best Practices

- Use the KeyConditionExpression key to filter results based on the primary key attributes.

- Utilize secondary indexes for querying on non-primary key attributes.

- Be mindful of read capacity units (RCUs) when querying to manage costs and performance.

Remember always to secure your AWS credentials and follow security best practices when interacting with AWS services programmatically.

Using key condition expressions in DynamoDB queries with Boto3

Key condition expressions are a fundamental aspect of querying data in Amazon DynamoDB when using the Boto3 library in Python. They allow you to specify the key values you want to match in your query, providing a powerful way to retrieve only the items that meet certain criteria.

Understanding Key Condition Expressions

When querying a DynamoDB table, you can use the KeyConditionExpression to define the criteria for the query based on the table’s primary key attributes. This expression can include comparison operators such as =, <, <=, >, >=, BETWEEN, and begins_with.

Here’s a basic example of how to use a key condition expression in a query:

from boto3.dynamodb.conditions import Key

# Initialize a DynamoDB resource using Boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='your-region'

)

# Reference the desired DynamoDB table

table = dynamodb.Table('YourTableName')

# Query the table using a key condition expression

response = table.query(

KeyConditionExpression=Key('PartitionKeyName').eq('PartitionKeyValue') & Key('SortKeyName').begins_with('SortKeyPrefix')

)

# Access the matched items

items = response['Items']For our specific Employees table:

import json

import boto3

from boto3.dynamodb.conditions import Key

# Initialize a DynamoDB resource using Boto3

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

# Reference the desired DynamoDB table

table = dynamodb.Table('Employees')

# Query the table using a key condition expression

response = table.query(

KeyConditionExpression=Key('Name').eq('Andrei Maksimov') & Key('Email').begins_with('info')

)

# Access the matched items

items = response['Items']

for item in items:

print(json.dumps(item, indent=4))Best Practices for Key Condition Expressions

- Use the

Keyobject: TheKeyobject fromboto3.dynamodb.conditionshelps you construct expressions easily and correctly. - Combine conditions: You can combine multiple conditions using logical operators like

&(AND). - Be efficient: Only query the data you need by being specific with your key condition expressions to minimize read throughput and improve performance.

Advanced Usage

For more complex queries, you can use other functions provided by the Key object, such as between and begins_with, to refine your search criteria. Additionally, you can use the Attr object to filter query results based on non-key attributes.

Remember that the sort key value always sorts the query results if one is present in the table’s key schema. This can be leveraged to retrieve data in a particular order without additional sorting.

For more detailed information on using expressions in DynamoDB, refer to the official AWS documentation on expressions.

By mastering key condition expressions, you can effectively utilize Boto3 to interact with DynamoDB and harness the full potential of your database queries.

Projecting DynamoDB queries to return a subset of data with Boto3

When working with Amazon DynamoDB, you may often need to retrieve only a specific subset of attributes from your items rather than fetching the entire data set. This is where projection expressions come into play, especially when using the AWS SDK for Python Boto3.

Projection expressions allow you to define a list of attributes you want to return for each item in your query or scan results. This can improve performance and reduce read-throughput costs as less data is transferred over the network.

Here’s how you can use projection expressions in your Boto3 DynamoDB queries:

import boto3

# Initialize a Boto3 DynamoDB client

dynamodb = boto3.client(

'dynamodb',

region_name='aws-region'

)

# Define the projection expression (the attributes you want to get)

projection = "Attribute1, Attribute2, Attribute3"

# Perform a query with the projection expression

response = dynamodb.query(

TableName='YourTableName',

ProjectionExpression=projection,

KeyConditionExpression='YourKeyConditionExpression'

)

# Access the projected attributes from the response

items = response['Items']

for item in items:

# Process the item (item will only contain the projected attributes)

print(item)In our table, the attribute “Name” is reserved, and we need to use ExpressionAttributeNames to set it up as an alias:

import json

import boto3

# Initialize a Boto3 DynamoDB client

dynamodb = boto3.client(

'dynamodb',

region_name='us-east-1'

)

# Define the projection expression (the attributes you want to get)

projection = "#nm, Email"

# Perform a query with the projection expression and expression attribute names

response = dynamodb.query(

TableName='Employees',

ProjectionExpression=projection,

KeyConditionExpression='#nm = :name',

ExpressionAttributeNames={

'#nm': 'Name'

},

ExpressionAttributeValues={

':name': {'S': 'Andrei Maksimov'}

}

)

# Access the projected attributes from the response

items = response['Items']

for item in items:

# Process the item (item will only contain the projected attributes)

print(json.dumps(item, indent=4))Key Points to Remember

- Projection expressions specify the attributes you want to return in your query results.

- You can use aliases for attribute names if they conflict with DynamoDB-reserved words.

- When querying a Global Secondary Index (GSI), you can only project attributes already part of the index.

- Local Secondary Indexes (LSIs) allow for the projection of additional attributes not present in the index key schema.

Benefits of Using Projection Expressions

- Reduced Data Transfer: Only the specified attributes are retrieved, which can lower the amount of data transferred.

- Cost Efficiency: Since DynamoDB pricing is partly based on the amount of data read, projecting only the necessary attributes can reduce costs.

- Improved Performance: Less data to read can result in faster response times.

For more detailed examples and best practices, refer to the Boto3 Documentation on DynamoDB.

By strategically using projection expressions in your Boto3 queries, you can optimize your DynamoDB interactions for better performance and cost-effectiveness.

Running PartiQL statements on a DynamoDB table using Boto3

PartiQL is a SQL-compatible query language that enables developers to interact with Amazon DynamoDB using familiar SQL syntax. This is particularly useful for those who are already comfortable with SQL and wish to apply similar patterns when working with DynamoDB. Boto3, the AWS SDK for Python, supports executing PartiQL statements, allowing for seamless integration within Python applications.

Using PartiQL with Boto3

To run PartiQL statements on a DynamoDB table using Boto3, you would typically follow these steps:

- Set up your Boto3 client:

import boto3

dynamodb = boto3.client(

'dynamodb',

region_name='aws-region'

)- Execute a PartiQL statement:

You can execute a PartiQL statement using theexecute_statementmethod provided by the Boto3 DynamoDB client.

response = dynamodb.execute_statement(

Statement='YOUR_PARTIQL_STATEMENT'

)Replace YOUR_PARTIQL_STATEMENT with your actual PartiQL query, such as SELECT * FROM YourTableName WHERE YourCondition.

- Handle the response:

The response from theexecute_statementmethod will contain the results of your PartiQL query, which you can process as needed within your application.

Let’s query one of the records from our demo table:

import json

import boto3

# Initialize a Boto3 DynamoDB client

dynamodb = boto3.client(

'dynamodb',

region_name='us-east-1'

)

response = dynamodb.execute_statement(

Statement="SELECT * FROM Employees WHERE Name='Andrei Maksimov'"

)

# Access the projected attributes from the response

items = response['Items']

for item in items:

# Process the item (item will only contain the projected attributes)

print(json.dumps(item, indent=4))PartiQL Considerations

- Pagination: PartiQL queries may return paginated results. You’ll need to handle pagination in your code to retrieve additional results if they exist.

- Parameters: When using PartiQL statements with placeholders, you can pass parameters to prevent injection attacks and provide value for your placeholders.

- Error Handling: Always include error handling to manage potential exceptions arising from invalid statements or issues with the DynamoDB service.

For detailed examples and further reading on running PartiQL statements using Boto3, please refer to the official AWS documentation.

PartiQL Best Practices

- Use PartiQL for complex queries that are cumbersome to express with the traditional DynamoDB API.

- Ensure that your PartiQL statements are optimized for performance, as inefficient queries can consume more read/write capacity than necessary.

- Regularly review AWS updates on PartiQL and Boto3, as the services are frequently updated with new features and improvements.

Conditional Operations in DynamoDB using Boto3

Conditional operations in Amazon DynamoDB allow you to specify conditions that must be met for the operation to succeed. This is particularly useful to ensure data integrity and avoid unintentionally overwriting items. Using the Boto3 library in Python, you can leverage conditional expressions in your DynamoDB operations.

Here’s how you can perform conditional operations with Boto3:

Conditional PutItem Operation

To add a new item, you can only use a conditional PutItem operation if an item with the specified primary key does not already exist.

import boto3

from botocore.exceptions import ClientError

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('YourTableName')

try:

response = table.put_item(

Item={

'PrimaryKey': 'value',

'Attribute': 'data'

},

ConditionExpression='attribute_not_exists(PrimaryKey)'

)

except ClientError as e:

if e.response['Error']['Code'] == 'ConditionalCheckFailedException':

print("Item already exists.")For our demo table:

import boto3

from botocore.exceptions import ClientError

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

try:

response = table.put_item(

Item={

'Name': 'Andrei Maksimov',

'Email': 'info@hands-on.cloud'

},

ConditionExpression='attribute_not_exists(#nm)',

ExpressionAttributeNames={

'#nm': 'Name'

}

)

print(response)

except ClientError as e:

if e.response['Error']['Code'] == 'ConditionalCheckFailedException':

print("Item already exists.")Conditional UpdateItem Operation

To update an item only if it meets certain conditions, you can use a condition expression with the UpdateItem operation.

response = table.update_item(

Key={

'PrimaryKey': 'value'

},

UpdateExpression='SET #attr = :val',

ConditionExpression='#attr = :oldval',

ExpressionAttributeNames={

'#attr': 'AttributeName'

},

ExpressionAttributeValues={

':val': 'newValue',

':oldval': 'oldValue'

}

)Let’s update the department for John and transition him to the Legal department only if he is in the IT department:

import boto3

from botocore.exceptions import ClientError

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

try:

response = table.update_item(

Key={

'Name': 'Luzze John',

'Email': 'john@hands-on.cloud'

},

UpdateExpression='SET #dep = :newDep',

ConditionExpression='#dep = :oldDep',

ExpressionAttributeNames={

'#dep': 'Department'

},

ExpressionAttributeValues={

':newDep': 'Legal',

':oldDep': 'IT'

}

)

print(response)

except ClientError as e:

if e.response['Error']['Code'] == 'ConditionalCheckFailedException':

print("Condition check failed, item not updated.")

else:

print(f"Error updating item: {e}")Conditional DeleteItem Operation

Similarly, you can ensure that an item is deleted only when it meets certain criteria by using a condition expression in a DeleteItem operation.

response = table.delete_item(

Key={

'PrimaryKey': 'value'

},

ConditionExpression='attribute_exists(PrimaryKey) AND #attr = :val',

ExpressionAttributeNames={

'#attr': 'AttributeName'

},

ExpressionAttributeValues={

':val': 'valueToMatch'

}

)Let’s delete Jonh from our table:

import boto3

from botocore.exceptions import ClientError

dynamodb = boto3.resource(

'dynamodb',

region_name='us-east-1'

)

table = dynamodb.Table('Employees')

try:

response = table.delete_item(

Key={

'Name': 'Luzze John',

'Email': 'john@hands-on.cloud'

},

ConditionExpression='attribute_exists(#nm) AND #dep = :depVal',

ExpressionAttributeNames={

'#nm': 'Name',

'#dep': 'Department'

},

ExpressionAttributeValues={

':depVal': 'Legal'

}

)

print(response)

except ClientError as e:

if e.response['Error']['Code'] == 'ConditionalCheckFailedException':

print("Condition check failed, item not updated.")

else:

print(f"Error updating item: {e}")Using conditional expressions can help prevent data loss and ensure that your DynamoDB operations are performed as intended. For more detailed information on conditional expressions and their syntax, refer to the Amazon DynamoDB Developer Guide.

Remember to handle exceptions such as ConditionalCheckFailedException to manage cases where the conditions are not met.

By incorporating these conditional operations into your DynamoDB interactions, you can build more robust and reliable applications with Python and Boto3.

Global Secondary Index

A Global Secondary Index allows you to query attributes not part of the main table’s primary key. This will help you avoid the slowness and inefficiencies associated with a full table scan operation.

In addition, the global secondary index will contain attributes from the main table but will be organized by a primary key of its own, enabling faster queries.

To learn more about Global Secondary Indexes, consider looking at the information shared in the AWS documentation on this link.

Creating a Global Secondary Index using Boto3 Dynamodb

In the following example, I will create a global secondary index to the CapacityBuildingLevel attribute with all employees who have achieved specific capacity-building levels.

import boto3

AWS_REGION='us-east-1'

client = boto3.client('dynamodb', region_name=AWS_REGION)

try:

resp = client.update_table(

TableName="Employees",

AttributeDefinitions=[

{

"AttributeName": "CapacityBuildingLevel",

"AttributeType": "S"

},

],

GlobalSecondaryIndexUpdates=[

{

"Create": {

"IndexName": "CapacityBuildingIndex",

"KeySchema": [

{

"AttributeName": "CapacityBuildingLevel",

"KeyType": "HASH"

}

],

"Projection": {

"ProjectionType": "ALL"

},

"ProvisionedThroughput": {

"ReadCapacityUnits": 1,

"WriteCapacityUnits": 1,

}

}

}

],

)

print("Secondary index added!")

except Exception as e:

print("Error updating table:")

print(e)

Querying a Global Secondary Index

Next, you can use the created secondary index to retrieve all Employees with the specific CapacityBuildingLevel set to 4.

import time

import boto3

from boto3.dynamodb.conditions import Key

AWS_REGION='us-east-1'

dynamodb = boto3.resource('dynamodb', region_name=AWS_REGION)

table = dynamodb.Table('Employees')

while True:

if not table.global_secondary_indexes or \

table.global_secondary_indexes[0]['IndexStatus'] != 'ACTIVE':

print('Waiting for index to backfill...')

time.sleep(5)

table.reload()

else:

break

# boto3 dynamo query

resp = table.query(

IndexName="CapacityBuildingIndex",

KeyConditionExpression=Key('CapacityBuildingLevel').eq('Level 4'),

)

print("The query returned the following items:")

for item in resp['Items']:

print(item)

Backup a DynamoDB Table using Boto3

To create on-demand backups for the DynamoDB table using Boto3, use the create_backup() method and pass the Table name and the destination backup Table name.

import boto3

client = boto3.client('dynamodb')

response = client.create_backup(

TableName='Employees',

BackupName='Employees-Backup-01'

)

print(response)For more information about automating DynamoDB Backup/Restore procedure using Boto3 library, consider the following article:

Deleting a DynamoDB table using Boto3

When working with AWS DynamoDB, there may come a time when you need to delete a table. This can be done programmatically using the Boto3 library in Python. Below is a step-by-step guide on how to delete a DynamoDB table using Boto3:

- Import Boto3: First, ensure that you have Boto3 installed and import it into your Python script.

import boto3 - Create a DynamoDB Client: Use Boto3 to create a DynamoDB client, which will allow you to interact with the database.

dynamodb = boto3.client('dynamodb') - Delete the Table: Call the

delete_tablemethod on the client, passing in the name of the table you wish to delete.response = dynamodb.delete_table(TableName='YourTableName') - Handle the Response: The response from the

delete_tablemethod will contain information about the deleted table. You can print this response or handle it as needed. - Wait for Deletion: Optionally, you can wait for the table to be deleted by using a waiter.

waiter = dynamodb.get_waiter('table_not_exists') waiter.wait(TableName='YourTableName')

Note: When you delete a table, all of its items and any associated indexes are also deleted. If you have DynamoDB Streams enabled on the table, the corresponding stream is also deleted.

import boto3

dynamodb = boto3.client(

'dynamodb',

region_name='us-east-1'

)

try:

table_name = "Employees"

resp = dynamodb.delete_table(

TableName=table_name,

)

waiter = dynamodb.get_waiter('table_not_exists')

waiter.wait(TableName=table_name)

print("Table deleted successfully!")

except Exception as e:

print("Error deleting table:")

print(e)Best Practices

- Always backup your data before deleting a table, as this action is irreversible.

- Ensure that you have the necessary permissions (

dynamodb:DeleteTable) to delete the table. - Consider the impact of deleting a table on your application and downstream services.

For more detailed information and examples, refer to the Boto3 documentation.

Remember to follow these steps carefully to avoid accidental data loss and to ensure that your application continues to function correctly after the table is deleted.

Error handling and troubleshooting with Boto3 and DynamoDB

When working with AWS DynamoDB through Boto3 in Python, it’s crucial to implement robust error handling and troubleshooting mechanisms. Here are some best practices and tips for managing errors and ensuring your application can recover gracefully from issues.

Common DynamoDB Errors

- ProvisionedThroughputExceededException: Occurs when the request rate is too high for the provisioned throughput capacity.

- ResourceNotFoundException: Triggered when a requested resource (e.g., a table) is not found.

- ConditionalCheckFailedException: Raised when a conditional update fails.

- ValidationException: Happens when an input value does not meet the expected format or constraints.

Error Handling Techniques

- Try-Except Blocks: Use

try-exceptblocks to catchClientErrorexceptions that Boto3 throws when AWS service errors occur.

import boto3

from botocore.exceptions import ClientError

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('YourTableName')

try:

response = table.get_item(Key={'PrimaryKey': 'Value'})

except ClientError as e:

error_code = e.response['Error']['Code']

if error_code == 'ResourceNotFoundException':

print("Table not found!")

else:

print(f"Unexpected error: {e}")- Error Retries and Exponential Backoff: Implement retries with exponential backoff to handle transient errors. Boto3 has built-in support for automatic retries, but you can customize the retry strategy as needed.

Troubleshooting Tips

- Request IDs: Each error response from DynamoDB includes a Request ID, which can be used to work with AWS Support for diagnosing issues.

- Error Codes and Descriptions: Pay attention to the error codes and descriptions provided in the exception messages to understand the nature of the problem.

Best Practices

- Monitor Throttling Errors: Keep an eye on

ProvisionedThroughputExceededExceptionerrors to adjust provisioned capacity or optimize query patterns. - Validate Data: Ensure that the data you send in requests conforms to DynamoDB’s data model and validation rules to prevent

ValidationExceptionerrors. - Handle Conditional Writes: Be prepared to handle

ConditionalCheckFailedExceptionwhen performing conditional writes, especially in high concurrency scenarios.

For more detailed information on error handling and troubleshooting with DynamoDB and Boto3, refer to the AWS documentation.

Remember, effective error handling is not just about catching errors; it’s about creating a resilient application that provides a seamless experience for the end-user, even when faced with unexpected issues.

Best Practices for Using Boto3 with DynamoDB

When working with AWS DynamoDB using the Boto3 library in Python, it’s important to follow best practices to ensure efficient, secure, and cost-effective interactions with the database service. Below are some key best practices to consider:

- Secure Access: Always use IAM roles and policies for accessing DynamoDB rather than hardcoding access and secret keys in your code. This enhances security and allows for better access management.

- Resource Management: Use the

boto3.resourceinterface for higher-level, abstracted access to DynamoDB. This allows for more Pythonic code and easier management of DynamoDB items. - Client vs. Resource: Understand when to use

boto3.clientversusboto3.resource. Useclientfor lower-level service operations andresourcefor higher-level object-oriented service access. - Error Handling: Implement robust error handling to catch and respond to service exceptions. This includes handling specific exceptions like

ClientErrorand using retries where appropriate. - Efficient Queries: Optimize query patterns to minimize read and write operations. Use queries instead of scans whenever possible, as scans can be more costly and less performant.

- Data Types: Be mindful of the data types used in DynamoDB. For example, DynamoDB treats numbers as Decimal types in Python, so ensure your application handles this correctly.

- Batch Operations: Utilize batch operations like

batch_get_itemorbatch_write_itemto reduce the number of round trips to the server. - Pagination: Implement pagination in your queries to handle large datasets efficiently.

- Provisioned Throughput: Monitor and adjust provisioned throughput settings to match your application’s needs and control costs.

- Global Tables: If using DynamoDB Global Tables, follow best practices for global table design to ensure data consistency and replication efficiency.

- Local Development: Use DynamoDB Local for offline development and testing to avoid incurring unnecessary costs.

- Monitoring and Logging: Enable CloudWatch for monitoring and logging to track performance metrics and operational health.

By adhering to these best practices, developers can create robust, scalable, and secure applications that effectively leverage DynamoDB’s capabilities through the Boto3 library in Python.

Real-world examples and use cases of using Boto3 with DynamoDB

Amazon DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability. When combined with Boto3, the AWS SDK for Python, developers can easily integrate their Python applications with DynamoDB. Here are some real-world examples and use cases where Boto3 is used with DynamoDB:

- Web Application Backends: DynamoDB is a highly scalable and low-latency database for web applications. Using Boto3, developers can store and retrieve user profiles, session data, and other dynamic content.

- Mobile Backends: For mobile applications that require a database to store user data, game states, or mobile analytics, DynamoDB can be accessed via Boto3 to provide a seamless experience across devices.

- IoT Applications: IoT applications often collect vast amounts of data from sensors and devices. Boto3 can be used to write this data to DynamoDB tables, which can be analyzed and monitored in real-time.

- E-commerce Platforms: DynamoDB can handle high-traffic websites and store inventory levels, user shopping carts, and order histories. Boto3 allows e-commerce platforms to interact with DynamoDB to process transactions and track customer data.

- Serverless Architectures: In serverless architectures, such as those built using AWS Lambda, Boto3 interacts with DynamoDB for event-driven processing, such as updating database entries when a new file is uploaded to Amazon S3.

- Data Caching: DynamoDB’s fast performance suits it for caching application data. Boto3 can be used to implement caching layers that reduce the load on relational databases or to speed up read-heavy applications.

- Gaming Industry: Games with leaderboards, user states, and real-time analytics can leverage DynamoDB for its high throughput and low-latency characteristics. Boto3 enables game developers to store and retrieve gaming data efficiently.

- Session Storage: For applications that need to maintain a session state across multiple servers, DynamoDB offers a reliable and scalable solution. Boto3 facilitates session management by allowing quick access to session data.

Each of these use cases demonstrates the versatility of DynamoDB when used in conjunction with Boto3. The ability to create tables, insert items, and query data programmatically through Python scripts makes Boto3 a powerful tool for developers working with AWS services.

Performance Optimization and Cost Management with DynamoDB and Boto3

Optimizing the performance and managing costs of Amazon DynamoDB when using Boto3 in Python is crucial for building scalable and cost-effective applications. Here are some best practices to consider:

Performance Optimization

- Choose the Right Keys: Select partition keys that distribute your data evenly across the shards. Avoid hotspots by ensuring a uniform workload across all partitions.

- Use Indexes Wisely: Implement Global Secondary Indexes (GSIs) and Local Secondary Indexes (LSIs) to optimize query performance, but be mindful of the additional costs.

- Read and Write Capacity Management lets you change settings based on your app’s needs to prevent slowdowns and unnecessary excess.

- Data Compression allows you to reduce the amount of data transferred and stored, save costs, and improve performance.

Cost Management

- Provisioned vs. On-Demand: Choose provisioned capacity wherever possible. Use on-demand capacity only when the load pattern is unpredictable.

- CloudWatch Monitoring allows you to track your DynamoDB usage and costs.

- Auto Scaling automatically adjusts your table’s capacity based on utilization. This ensures you pay for what you use.

- DynamoDB Cost Explorer allows you to analyze your table’s cost and usage data and identify opportunities for cost savings.

Refer to the DynamoDB Well-Architected Lens and the Best Practices for Querying and Scanning Data for more information.

Regularly review and apply these best practices as your application evolves and AWS services update. Doing so ensures that using DynamoDB with Boto3 remains efficient, performant, and cost-effective.

Security Considerations When Using Boto3 with DynamoDB

When integrating AWS DynamoDB with Python using Boto3, security is paramount. Below are key considerations to ensure your data remains secure:

- Encryption: DynamoDB supports encryption at rest, protecting your data from unauthorized access. Ensure that encryption is enabled to secure your data.

- IAM Roles and Policies allow control access to your DynamoDB resources. Assign the least privilege necessary to perform a task.

- Credential Management: Avoid hard-coding AWS credentials in your code. Instead, use environment variables or AWS Secrets Manager to manage credentials securely.

- Network Security: Use SSL/TLS for data in transit between your application and AWS services to prevent data interception. Enforce a minimum TLS version of 1.2 for enhanced security.

- Logging and Monitoring: AWS CloudTrail and Amazon CloudWatch help audit and detect unusual access patterns.

- Exception handling in your Boto3 code allows you to avoid exposing sensitive information through stack traces.

- Avoiding Security Risks: Be cautious when writing user-provided data to DynamoDB to prevent injection attacks. Validate and sanitize all input.

FAQ

How do I get the DynamoDB table status?

To get DynamoDB table status, you must use the table_status property of the DynamoDB Table class:

import boto3

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('name')

print(f'Table status: {table.table_status}')