VPC Endpoint Cross Region Access using Terraform

While building multi-region AWS infrastructures according to the corporate security requirements, AWS infrastructure engineers usually face a challenge in providing access to resources and services deployed in different AWS Regions using an AWS PrivateLink. You can only use VPC endpoints to access resources in the same AWS Region as the endpoint. In the AWS Architecture blog, Michael Haken, Principal Solutions Architect on the AWS Strategic Accounts team, recently shared guidance on Using VPC Endpoints in Multi-Region Architectures with Route 53 Resolver. This article covers how to automate VPC Endpoint cross region access using Route53 Resolvers, Route53 Resolver Rules, and Terraform

Table of contents

Prerequisites for VPC Endpoint Cross Region Access

In this article, we’ll use Terraform to set up the following AWS services:

- Amazon VPCs

- Amazon S3 Buckets

- Amazon VPC Peering connections

- Amazon VPC Endpoints

- Amazon Route53 Resolver Endpoints

- Amazon EC2

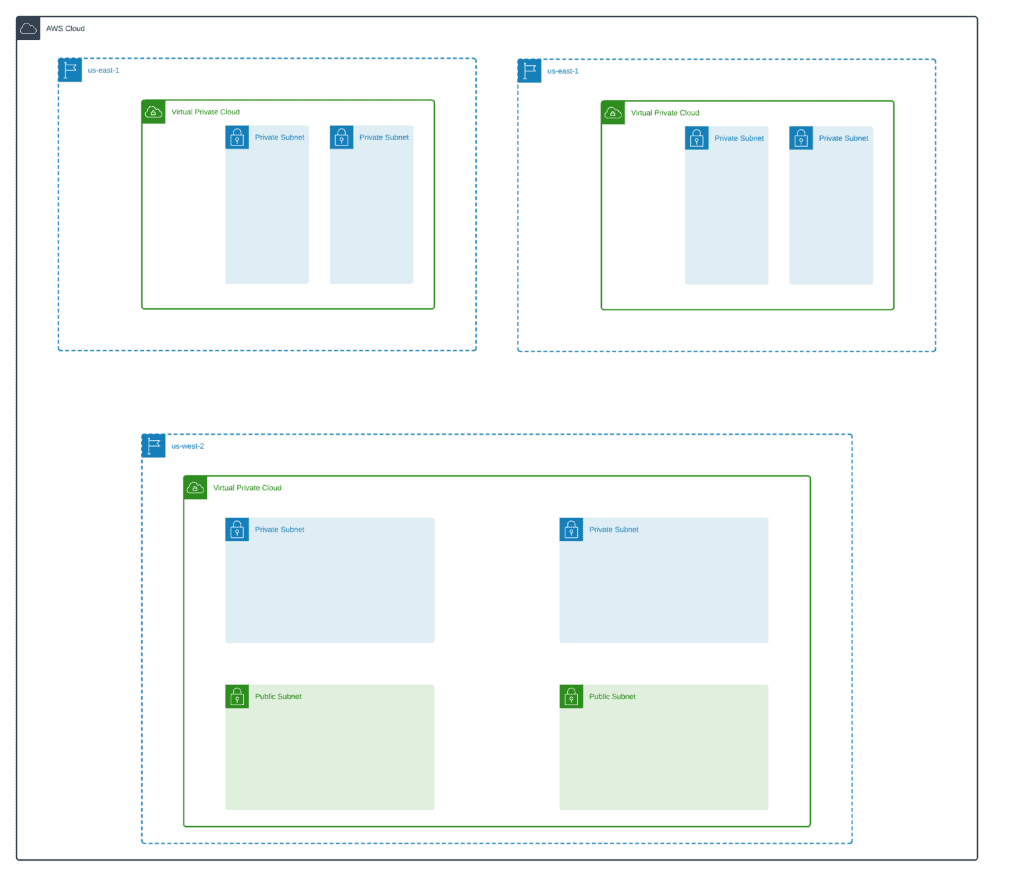

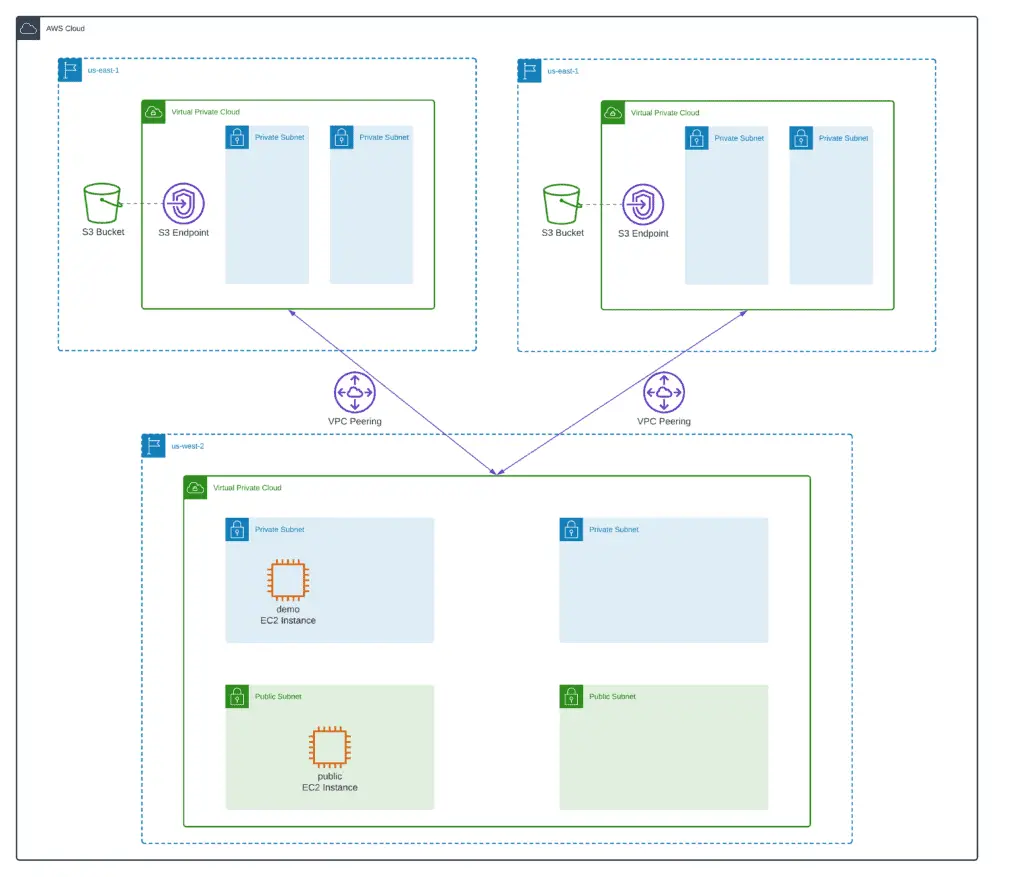

As in the original article, we’ll create a similar infrastructure but deploy only the required VPC and Route53 Resolver Endpoints.

Here’s an architecture diagram of our infrastructure:

We’ll use Amazon EC2 instances with the following roles:

- Demo EC2 instance to test access to VPC Endpoints from us-west-2 to us-east-1 and us-east-2 AWS Regions

- Public EC2 instance will serve the role of bastion host and allow SSH access to the demo EC2 instance from the Internet

You can find all source code in our GitHub repository:

- vpc-endpoints-multi-region-access – Terraform modules to deploy all infrastructure components

Note: all Terraform modules rely on the remote state stored in the S3 bucket and use DynamoDB for execution lock. You can deploy this infrastructure using the 0_remote_state module.

We strongly suggest reviewing additional information on using AWS PrivateLink for Amazon S3 before automating this solution using Terraform.

AWS Regions

We’ll use the following AWS Regions:

- us-east-1 (N. Virginia)

- us-east-2 (Ohio)

- us-west-2 (Oregon)

Let’s declare Terraform providers (provider.tf):

provider "aws" {

region = "us-east-1"

}

provider "aws" {

alias = "us-east-2"

region = "us-east-2"

}

provider "aws" {

alias = "us-west-2"

region = "us-west-2"

}Deploying VPCs

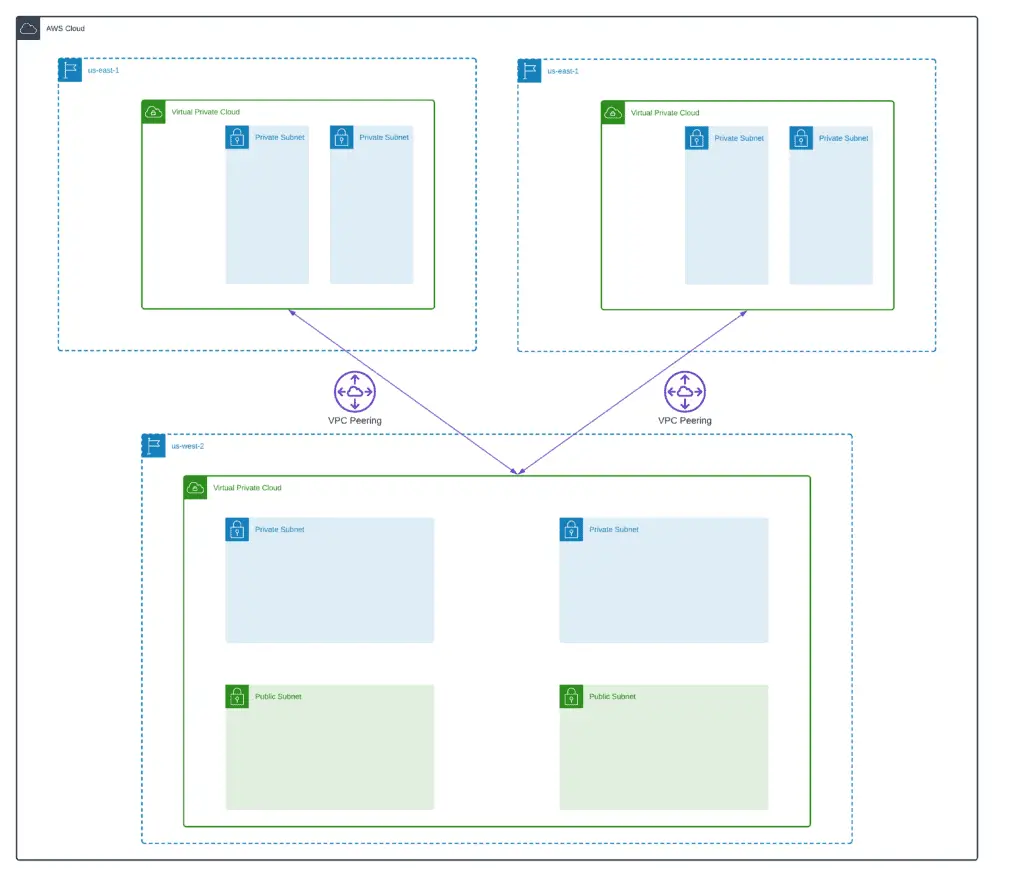

In this section, we’ll deploy 3 VPCs in 3 AWS regions and peer them together as shown on the architecture diagram.

This time, we’ll not build a VPC from scratch, but we’ll use an already existing Terraform module – terraform-aws-modules/vpc/aws.

If you’re interested in more in-depth information on creating a VPC infrastructure using Terraform, please, check out our previous posts:

- Terraform – Managing AWS VPC – Creating public subnet

- Terraform – Managing AWS VPC – Creating private subnets

Let’s define VPC parameters and some other common local variables(main.tf):

data "aws_caller_identity" "current" {}

locals {

prefix = "vpc-endpoints-multi-region-access"

aws_account = data.aws_caller_identity.current.account_id

common_tags = {

Project = local.prefix

ManagedBy = "Terraform"

}

vpcs = {

us-east-1 = {

cidr = "10.0.0.0/16"

region = "us-east-1"

name = "${local.prefix}-us-east-1"

azs = ["us-east-1a", "us-east-1b"]

private_subnets = ["10.0.0.0/24", "10.0.1.0/24"]

}

us-east-2 = {

cidr = "10.1.0.0/16"

region = "us-east-2"

name = "${local.prefix}-us-east-2"

azs = ["us-east-2a", "us-east-2b"]

private_subnets = ["10.1.0.0/24", "10.1.1.0/24"]

}

us-west-2 = {

cidr = "10.2.0.0/16"

region = "us-west-2"

name = "${local.prefix}-us-west-2"

azs = ["us-west-2a", "us-west-2b"]

public_subnets = ["10.2.10.0/24", "10.2.11.0/24"]

private_subnets = ["10.2.0.0/24", "10.2.1.0/24"]

}

}

}Now, we can declare our VPCs(main.tf):

module "vpc_us_east_1" {

source = "terraform-aws-modules/vpc/aws"

version = "3.10.0"

name = local.vpcs.us-east-1.name

cidr = local.vpcs.us-east-1.cidr

enable_dns_hostnames = true

enable_dns_support = true

azs = local.vpcs.us-east-1.azs

private_subnets = local.vpcs.us-east-1.private_subnets

tags = local.common_tags

}

module "vpc_us_east_2" {

source = "terraform-aws-modules/vpc/aws"

version = "3.10.0"

name = local.vpcs.us-east-2.name

cidr = local.vpcs.us-east-2.cidr

enable_dns_hostnames = true

enable_dns_support = true

azs = local.vpcs.us-east-2.azs

private_subnets = local.vpcs.us-east-2.private_subnets

tags = local.common_tags

providers = {

aws = aws.us-east-2

}

}

module "vpc_us_west_2" {

source = "terraform-aws-modules/vpc/aws"

version = "3.10.0"

name = local.vpcs.us-west-2.name

cidr = local.vpcs.us-west-2.cidr

enable_dns_hostnames = true

enable_dns_support = true

azs = local.vpcs.us-west-2.azs

public_subnets = local.vpcs.us-west-2.public_subnets

private_subnets = local.vpcs.us-west-2.private_subnets

tags = local.common_tags

providers = {

aws = aws.us-west-2

}

}The most important part here is enabling DNS Support for your VPCs because otherwise, you’ll not be able to resolve VPC endpoint interfaces DNS names.

VPCs Peering

A soon as we declare VPCs, we need to peer them accordingly (main.tf).

# Peering connection: us_west-2 <-> us_east_1

resource "aws_vpc_peering_connection" "us_west-2-us_east_1" {

vpc_id = module.vpc_us_west_2.vpc_id

peer_vpc_id = module.vpc_us_east_1.vpc_id

peer_owner_id = local.aws_account

peer_region = "us-east-1"

auto_accept = false

tags = local.common_tags

provider = aws.us-west-2

}

resource "aws_vpc_peering_connection_accepter" "us_east_1-us_west-2" {

provider = aws

vpc_peering_connection_id = aws_vpc_peering_connection.us_west-2-us_east_1.id

auto_accept = true

tags = local.common_tags

}

resource "aws_route" "us_west-2-us_east_1" {

count = length(module.vpc_us_west_2.private_route_table_ids)

route_table_id = module.vpc_us_west_2.private_route_table_ids[count.index]

destination_cidr_block = module.vpc_us_east_1.vpc_cidr_block

vpc_peering_connection_id = aws_vpc_peering_connection.us_west-2-us_east_1.id

provider = aws.us-west-2

}

resource "aws_route" "us_east_1-us_west-2" {

count = length(module.vpc_us_east_1.private_route_table_ids)

route_table_id = module.vpc_us_east_1.private_route_table_ids[count.index]

destination_cidr_block = module.vpc_us_west_2.vpc_cidr_block

vpc_peering_connection_id = aws_vpc_peering_connection_accepter.us_east_1-us_west-2.id

}

# Peering connection: us_west-2 <-> us_east_1

resource "aws_vpc_peering_connection" "us_west-2-us_east_2" {

vpc_id = module.vpc_us_west_2.vpc_id

peer_vpc_id = module.vpc_us_east_2.vpc_id

peer_owner_id = local.aws_account

peer_region = "us-east-2"

auto_accept = false

tags = local.common_tags

provider = aws.us-west-2

}

resource "aws_vpc_peering_connection_accepter" "us_east_2-us_west-2" {

provider = aws.us-east-2

vpc_peering_connection_id = aws_vpc_peering_connection.us_west-2-us_east_2.id

auto_accept = true

tags = local.common_tags

}

resource "aws_route" "us_west-2-us_east_2" {

count = length(module.vpc_us_west_2.private_route_table_ids)

route_table_id = module.vpc_us_west_2.private_route_table_ids[count.index]

destination_cidr_block = module.vpc_us_east_2.vpc_cidr_block

vpc_peering_connection_id = aws_vpc_peering_connection.us_west-2-us_east_2.id

provider = aws.us-west-2

}

resource "aws_route" "us_east_2-us_west-2" {

count = length(module.vpc_us_east_2.private_route_table_ids)

route_table_id = module.vpc_us_east_2.private_route_table_ids[count.index]

destination_cidr_block = module.vpc_us_west_2.vpc_cidr_block

vpc_peering_connection_id = aws_vpc_peering_connection_accepter.us_east_2-us_west-2.id

provider = aws.us-east-2

}For every peering connection and pair of VPCs, we’re also defining routes through the peering connection to allow networking traffic flows.

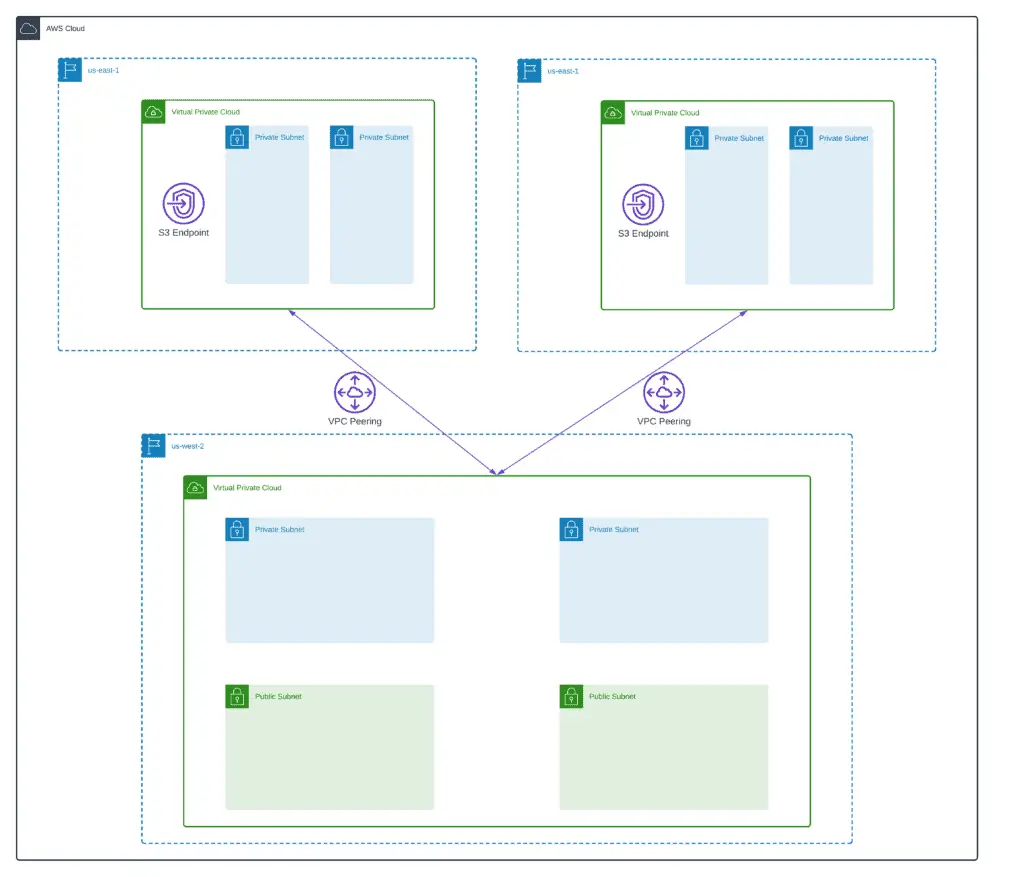

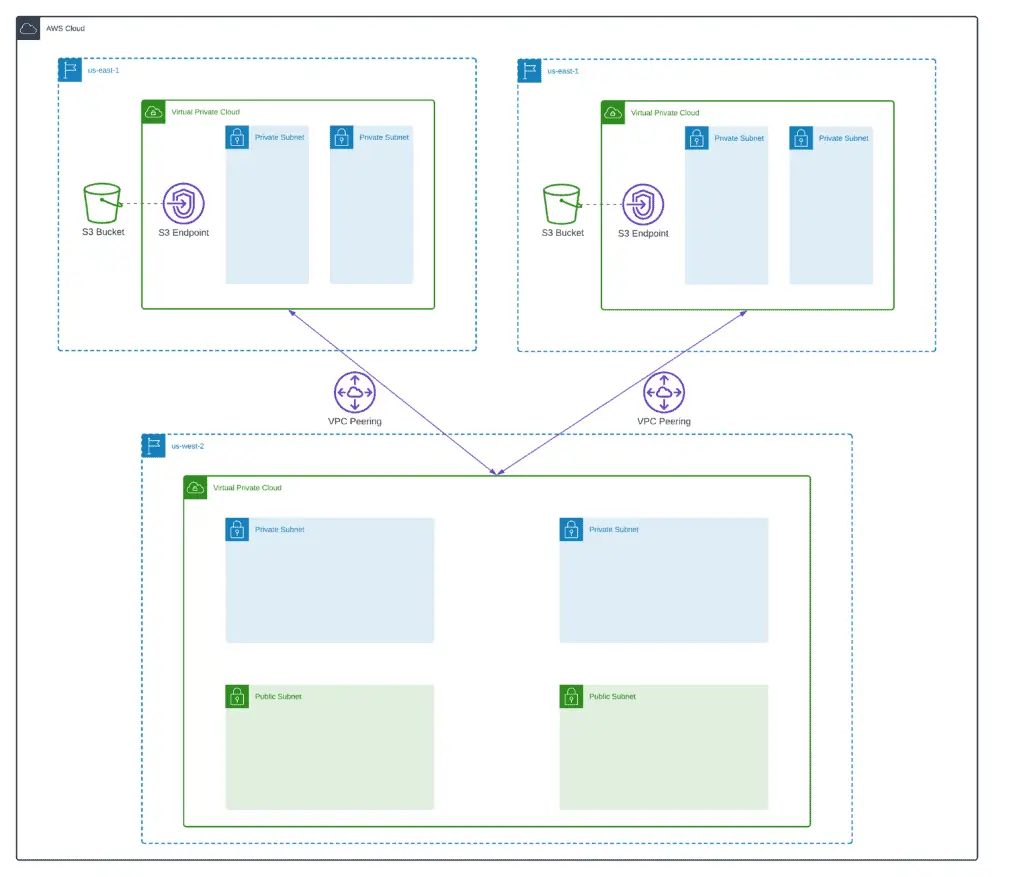

Setting up VPC Endpoints

The last part of the VPC configuration is to create the required VPC endpoints. We’ll use terraform-aws-modules/vpc/aws//modules/vpc-endpoints Terraform module to simplify endpoints management (vpc_endpoints.tf).

# us-east-1

resource "aws_security_group" "endpoints_us_east_1" {

name = "${local.prefix}-endpoints"

description = "Allow all HTTPS traffic"

vpc_id = module.vpc_us_east_1.vpc_id

ingress = [

{

description = "HTTPS Traffic"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

egress = [

{

description = "ALL Traffic"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

tags = local.common_tags

}

module "endpoints_us_east_1" {

source = "terraform-aws-modules/vpc/aws//modules/vpc-endpoints"

version = "3.10.0"

vpc_id = module.vpc_us_east_1.vpc_id

security_group_ids = [aws_security_group.endpoints_us_east_1.id]

endpoints = {

s3 = {

service = "s3"

subnet_ids = module.vpc_us_east_1.private_subnets

tags = { Name = "s3-vpc-endpoint" }

},

}

tags = local.common_tags

}

# us-east-2

resource "aws_security_group" "endpoints_us_east_2" {

name = "${local.prefix}-endpoints"

description = "Allow all HTTPS traffic"

vpc_id = module.vpc_us_east_2.vpc_id

ingress = [

{

description = "HTTPS Traffic"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

egress = [

{

description = "ALL Traffic"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

tags = local.common_tags

provider = aws.us-east-2

}

module "endpoints_us_east_2" {

source = "terraform-aws-modules/vpc/aws//modules/vpc-endpoints"

version = "3.10.0"

vpc_id = module.vpc_us_east_2.vpc_id

security_group_ids = [aws_security_group.endpoints_us_east_2.id]

endpoints = {

s3 = {

service = "s3"

subnet_ids = module.vpc_us_east_2.private_subnets

tags = { Name = "s3-vpc-endpoint" }

},

}

tags = local.common_tags

providers = {

aws = aws.us-east-2

}

}

# us-west-2

resource "aws_security_group" "endpoints_us_west_2" {

name = "${local.prefix}-endpoints"

description = "Allow all HTTPS traffic"

vpc_id = module.vpc_us_west_2.vpc_id

ingress = [

{

description = "HTTPS Traffic"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

egress = [

{

description = "ALL Traffic"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

tags = local.common_tags

provider = aws.us-west-2

}

module "endpoints_us_west_2" {

source = "terraform-aws-modules/vpc/aws//modules/vpc-endpoints"

version = "3.10.0"

vpc_id = module.vpc_us_west_2.vpc_id

security_group_ids = [aws_security_group.endpoints_us_west_2.id]

endpoints = {

s3 = {

service = "s3"

subnet_ids = module.vpc_us_west_2.private_subnets

tags = { Name = "s3-vpc-endpoint" }

},

ssm = {

service = "ssm"

subnet_ids = module.vpc_us_west_2.private_subnets

tags = { Name = "ssm-vpc-endpoint" }

},

ssmmessages = {

service = "ssmmessages"

subnet_ids = module.vpc_us_west_2.private_subnets

tags = { Name = "ssmmessages-vpc-endpoint" }

},

ec2messages = {

service = "ec2messages"

subnet_ids = module.vpc_us_west_2.private_subnets

tags = { Name = "ec2messages-vpc-endpoint" }

},

}

tags = local.common_tags

providers = {

aws = aws.us-west-2

}

}Deploying S3 Buckets

As soon as Michael Haken have chosen the S3 service as an example, we’ll not deviate from his original demo and deploy S3 buckets in us-east-1 and us-east-2 regions (s3.tf).

# Demo S3 bucket us-east-1

resource "aws_s3_bucket" "s3_us_east_1" {

bucket = "${local.prefix}-s3-us-east-1"

acl = "private"

force_destroy = true

versioning {

enabled = false

}

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

lifecycle {

prevent_destroy = false

}

tags = local.common_tags

}

resource "aws_s3_bucket_public_access_block" "s3_us_east_1" {

bucket = aws_s3_bucket.s3_us_east_1.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

# Demo S3 bucket us-east-2

resource "aws_s3_bucket" "s3_us_east_2" {

bucket = "${local.prefix}-s3-us-east-2"

acl = "private"

force_destroy = true

versioning {

enabled = false

}

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

lifecycle {

prevent_destroy = false

}

tags = local.common_tags

provider = aws.us-east-2

}

resource "aws_s3_bucket_public_access_block" "s3_us_east_2" {

bucket = aws_s3_bucket.s3_us_east_2.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

provider = aws.us-east-2

}Nothing fancy here is just a couple of encrypted S3 buckets with blocked public access.

Deploying EC2 Instances

To test multi-region access to VPC endpoints, we need two EC2 instances (ec2.tf):

- Demo EC2 instance to test access to VPC Endpoints from us-west-2 to us-east-1 and us-east-2 AWS Regions

- Public EC2 instance will serve the role of bastion host and allow SSH access to the demo EC2 instance from the Internet

We’re using Amazon Linux 2 AMI and attaching Systems Manager Session Manager and S3 read-only permissions to our EC2 instances.

locals {

ec2_instance_type = "t3.micro"

ssh_key_name = "Lenovo-T410"

}

# Latest Amazon Linux 2

data "aws_ami" "amazon-linux-2" {

owners = ["amazon"]

most_recent = true

filter {

name = "name"

values = ["amzn2-ami-hvm-*-x86_64-ebs"]

}

provider = aws.us-west-2

}

resource "aws_security_group" "ssh" {

name = "${local.prefix}-ssh"

description = "Allow SSH inbound traffic"

vpc_id = module.vpc_us_west_2.vpc_id

ingress = [

{

description = "SSH"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

egress = [

{

description = "ALL Traffic"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

tags = {

Name = "allow_ssh"

}

provider = aws.us-west-2

}

# EC2 demo Instance Profile

resource "aws_iam_instance_profile" "ec2_demo" {

name = "${local.prefix}-ec2-demo-instance-profile"

role = aws_iam_role.ec2_demo.name

}

resource "aws_iam_role" "ec2_demo" {

name = "${local.prefix}-ec2-demo-role"

path = "/"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

# Allow Systems Manager to manage EC2 instance

resource "aws_iam_policy_attachment" "ec2_ssm" {

name = "${local.prefix}-ec2-demo-role-attachment"

roles = [aws_iam_role.ec2_demo.name]

policy_arn = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

}

resource "aws_iam_policy_attachment" "ec2_s3_read_only" {

name = "${local.prefix}-ec2-demo-role-attachment"

roles = [aws_iam_role.ec2_demo.name]

policy_arn = "arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess"

}

# EC2 demo instance

resource "aws_network_interface" "ec2_demo" {

subnet_id = module.vpc_us_west_2.private_subnets[0]

private_ips = ["10.2.0.101"]

security_groups = [aws_security_group.ssh.id]

provider = aws.us-west-2

}

resource "aws_instance" "ec2_demo" {

ami = data.aws_ami.amazon-linux-2.id

instance_type = local.ec2_instance_type

availability_zone = "us-west-2a"

iam_instance_profile = aws_iam_instance_profile.ec2_demo.name

network_interface {

network_interface_id = aws_network_interface.ec2_demo.id

device_index = 0

}

key_name = local.ssh_key_name

tags = {

Name = "${local.prefix}-ec2-demo"

}

provider = aws.us-west-2

depends_on = [

module.endpoints_us_west_2

]

}

# EC2 public instance

resource "aws_instance" "public" {

ami = data.aws_ami.amazon-linux-2.id

instance_type = local.ec2_instance_type

availability_zone = "us-west-2a"

subnet_id = module.vpc_us_west_2.public_subnets[0]

iam_instance_profile = aws_iam_instance_profile.ec2_demo.name

vpc_security_group_ids = [aws_security_group.ssh.id]

key_name = local.ssh_key_name

tags = {

Name = "${local.prefix}-ec2-public"

}

provider = aws.us-west-2

}Check the “Managing AWS IAM using Terraform” article for more information about the topic.

Setting up Route53 Resolver Endpoints

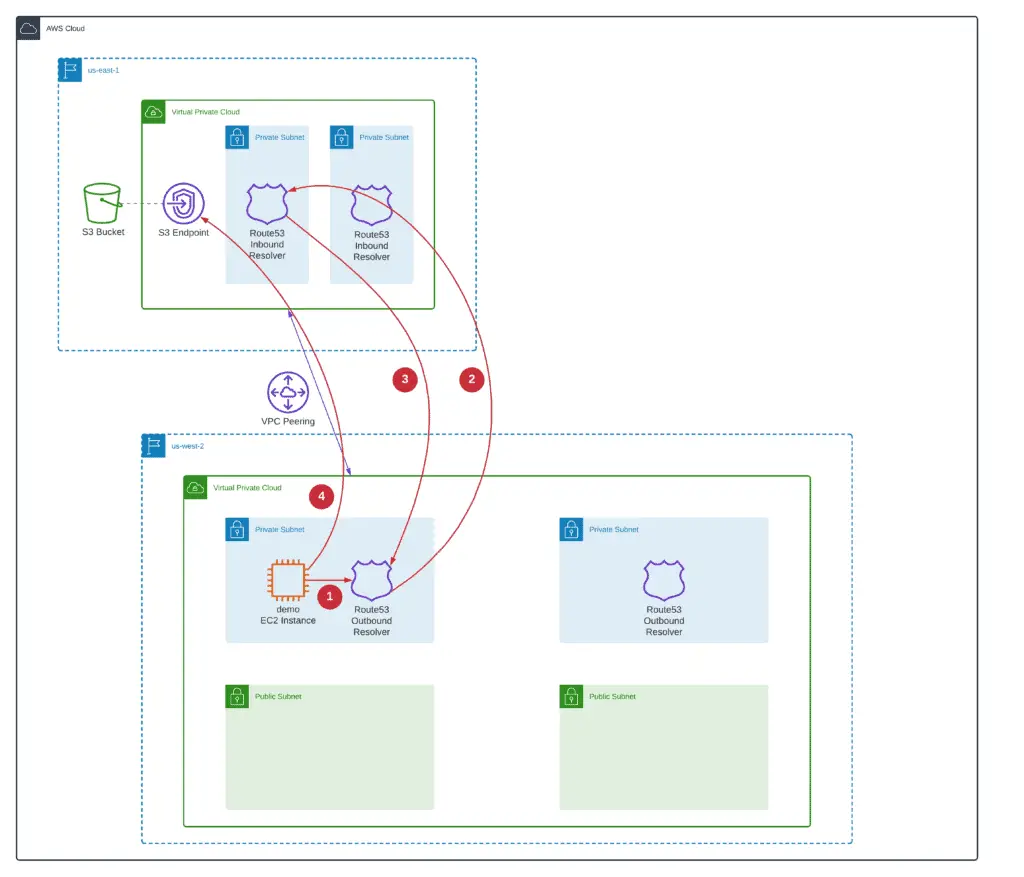

In this section, we’ll deploy the required Route53 Resolver Endpoints and configure Route53 Resolver Endpoint Rules to allow the demo EC2 instance deployed in the private subnet to get access to S3 buckets in different AWS Regions through available VPC Endpoints.

There are two types of Route53 Resolver Endpoints available:

- Inbound Resolver – forwards DNS queries to the DNS service for a VPC from your network (allows external DNS servers or clients to query an internal .2 VPC DNS resolver)

- Outbound Resolver – forwards DNS queries from the DNS service for a VPC to your network (allows an internal .2 VPC DNS resolver to query external DNS servers)

To allow demo EC2 instance resolve within us-west-2 VPC resolve VPC endpoints from us-east-1 and us-east-2, we need to:

- Set up Internal Resolver endpoints in us-east-1 and us-east-2 regions’ VPCs

- Set up Outbound Resolver endpoint in the us-west-2 VPC

After that, we need to create Outbound Resolver Rules to forward DNS queries from us-west-2 VPC to Inbound Resolver Endpoints in us-east-1 or us-east-2 VPCs.

Here’s how the demo EC2 instance will get access to a specific VPC endpoint:

- Demo EC2 instance will query region-specific VPC Endpoint IP address from .2 VPC DNS resolver. The request will be passed to the Route53 Outbound Resolver

- Based on Resolver Rules attached to the Outbound DNS Resolver, the Outbound DNS Resolver will forward the DNS query to the Route53 Inbound Resolver in the region-specific VPC

- Route53 Inbound DNS resolver will reply the IP address of the region-specific VPC endpoint to the Route53 Outbound Resolver. The demo EC2 instance will get a region-specific VPC endpoint IP address from the .2 DNS resolver

- The demo EC2 instance will start sending API calls to the region-specific VPC endpoint

Resolver Endpoints

Now, let’s set up Route53 Resolver Endpoints and required Security Groups allowing DNS traffic (route53_resolver_rules.tf).

# us-east-1

resource "aws_security_group" "route53_endpoint_us_east_1" {

name = "${local.prefix}-route53-endpoint"

description = "Allow all DNS traffic"

vpc_id = module.vpc_us_east_1.vpc_id

ingress = [

{

description = "DNS Traffic"

from_port = 53

to_port = 53

protocol = "tcp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

},

{

description = "DNS Traffic"

from_port = 53

to_port = 53

protocol = "udp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

egress = [

{

description = "ALL Traffic"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

tags = local.common_tags

}

resource "aws_route53_resolver_endpoint" "inbound_us_east_1" {

name = "${local.prefix}-inbound-resolver-endpoint"

direction = "INBOUND"

security_group_ids = [

aws_security_group.route53_endpoint_us_east_1.id,

]

ip_address {

subnet_id = module.vpc_us_east_1.private_subnets[0]

}

ip_address {

subnet_id = module.vpc_us_east_1.private_subnets[1]

}

tags = local.common_tags

}

# us-east-2

resource "aws_security_group" "route53_endpoint_us_east_2" {

name = "${local.prefix}-route53-endpoint"

description = "Allow all DNS traffic"

vpc_id = module.vpc_us_east_2.vpc_id

ingress = [

{

description = "DNS Traffic"

from_port = 53

to_port = 53

protocol = "tcp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

},

{

description = "DNS Traffic"

from_port = 53

to_port = 53

protocol = "udp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

egress = [

{

description = "ALL Traffic"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

tags = local.common_tags

provider = aws.us-east-2

}

resource "aws_route53_resolver_endpoint" "inbound_us_east_2" {

name = "${local.prefix}-inbound-resolver-endpoint"

direction = "INBOUND"

security_group_ids = [

aws_security_group.route53_endpoint_us_east_2.id,

]

ip_address {

subnet_id = module.vpc_us_east_2.private_subnets[0]

}

ip_address {

subnet_id = module.vpc_us_east_2.private_subnets[1]

}

tags = local.common_tags

provider = aws.us-east-2

}

# us-west-2

resource "aws_security_group" "route53_endpoint_us_west_2" {

name = "${local.prefix}-route53-endpoint"

description = "Allow all DNS traffic"

vpc_id = module.vpc_us_west_2.vpc_id

ingress = [

{

description = "DNS Traffic"

from_port = 53

to_port = 53

protocol = "tcp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

},

{

description = "DNS Traffic"

from_port = 53

to_port = 53

protocol = "udp"

cidr_blocks = ["10.0.0.0/8"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

egress = [

{

description = "ALL Traffic"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = []

prefix_list_ids = []

security_groups = []

self = false

}

]

tags = local.common_tags

provider = aws.us-west-2

}

resource "aws_route53_resolver_endpoint" "outbound_us_west_2" {

name = "${local.prefix}-outbound-resolver-endpoint"

direction = "OUTBOUND"

security_group_ids = [

aws_security_group.route53_endpoint_us_west_2.id,

]

ip_address {

subnet_id = module.vpc_us_west_2.private_subnets[0]

}

ip_address {

subnet_id = module.vpc_us_west_2.private_subnets[1]

}

tags = local.common_tags

provider = aws.us-west-2

}Resolver Rules

Finally, we need to add four Resolver Rules to Route53 Outbound Resolver pointing to region-specificRoute53 Inbound Resolvers:

- us-east-1.amazonaws.com

- us-east-1.vpce.amazonaws.com

- us-east-2.amazonaws.com

- us-east-2.vpce.amazonaws.com

# us-east-1

resource "aws_route53_resolver_rule" "us-east-1-rslvr" {

domain_name = "us-east-1.amazonaws.com"

name = "${local.prefix}-us-east-1-amazonaws-com"

rule_type = "FORWARD"

resolver_endpoint_id = aws_route53_resolver_endpoint.outbound_us_west_2.id

dynamic "target_ip" {

for_each = aws_route53_resolver_endpoint.inbound_us_east_1.ip_address

content {

ip = target_ip.value["ip"]

}

}

tags = local.common_tags

provider = aws.us-west-2

}

resource "aws_route53_resolver_rule" "us-east-1-vpce-rslvr" {

domain_name = "us-east-1.vpce.amazonaws.com"

name = "${local.prefix}-us-east-1-vpce-amazonaws-com"

rule_type = "FORWARD"

resolver_endpoint_id = aws_route53_resolver_endpoint.outbound_us_west_2.id

dynamic "target_ip" {

for_each = aws_route53_resolver_endpoint.inbound_us_east_1.ip_address

content {

ip = target_ip.value["ip"]

}

}

tags = local.common_tags

provider = aws.us-west-2

}

# us-east-2

resource "aws_route53_resolver_rule" "us-east-2-rslvr" {

domain_name = "us-east-2.amazonaws.com"

name = "${local.prefix}-us-east-2-amazonaws-com"

rule_type = "FORWARD"

resolver_endpoint_id = aws_route53_resolver_endpoint.outbound_us_west_2.id

dynamic "target_ip" {

for_each = aws_route53_resolver_endpoint.inbound_us_east_2.ip_address

content {

ip = target_ip.value["ip"]

}

}

tags = local.common_tags

provider = aws.us-west-2

}

resource "aws_route53_resolver_rule" "us-east-2-vpce-rslvr" {

domain_name = "us-east-2.vpce.amazonaws.com"

name = "${local.prefix}-us-east-2-vpce-amazonaws-com"

rule_type = "FORWARD"

resolver_endpoint_id = aws_route53_resolver_endpoint.outbound_us_west_2.id

dynamic "target_ip" {

for_each = aws_route53_resolver_endpoint.inbound_us_east_2.ip_address

content {

ip = target_ip.value["ip"]

}

}

tags = local.common_tags

provider = aws.us-west-2

}Terraform outputs

We thought it would be helpful to provide some useful outputs, including region-specific S3 VPC Endpoint URLs, testing AWSCLI commands, and SSH commands to connect to public and demo EC2 instances (outputs.tf):

locals {

us_east_1_s3_endpoint_domain = replace(module.endpoints_us_east_1.endpoints.s3.dns_entry[0]["dns_name"], "*", "")

us_east_2_s3_endpoint_domain = replace(module.endpoints_us_east_2.endpoints.s3.dns_entry[0]["dns_name"], "*", "")

regions = {

us-east-1 = {

name = "us-east-1"

s3_endpoint_id = module.endpoints_us_east_1.endpoints.s3.id

s3_bucket_endpoint_url = "https://bucket${local.us_east_1_s3_endpoint_domain}"

s3_access_endpoint_url = "https://accesspoint${local.us_east_1_s3_endpoint_domain}"

s3_control_endpoint = "https://control${local.us_east_1_s3_endpoint_domain}"

s3_bucket = aws_s3_bucket.s3_us_east_1.bucket

test_s3_bucket_endpoint_cmd = "aws s3 --region us-east-1 --endpoint-url https://bucket${local.us_east_1_s3_endpoint_domain} ls s3://${aws_s3_bucket.s3_us_east_1.bucket}/"

# Currently raises: Unsupported configuration when using S3 access-points: Client cannot use a custom "endpoint_url" when specifying an access-point ARN.

test_s3_access_endpoint_cmd = "aws s3api list-objects-v2 --bucket arn:aws:s3:us-east-1:${local.aws_account}:accesspoint/${aws_s3_bucket.s3_us_east_1.bucket} --region us-east-1 --endpoint-url https://accesspoint${local.us_east_1_s3_endpoint_domain}"

test_s3_control_endpoint_cmd = "aws s3control --region us-east-1 --endpoint-url https://control${local.us_east_1_s3_endpoint_domain} list-jobs --account-id ${local.aws_account}"

}

us-east-2 = {

name = "us-east-2"

s3_endpoint_id = module.endpoints_us_east_2.endpoints.s3.id

s3_bucket_endpoint_url = "https://bucket${local.us_east_2_s3_endpoint_domain}"

s3_access_endpoint_url = "https://accesspoint${local.us_east_2_s3_endpoint_domain}"

s3_control_endpoint = "https://control${local.us_east_2_s3_endpoint_domain}"

s3_bucket = aws_s3_bucket.s3_us_east_2.bucket

test_s3_bucket_endpoint_cmd = "aws s3 --region us-east-2 --endpoint-url https://bucket${local.us_east_2_s3_endpoint_domain} ls s3://${aws_s3_bucket.s3_us_east_2.bucket}/"

# Currently raises: Unsupported configuration when using S3 access-points: Client cannot use a custom "endpoint_url" when specifying an access-point ARN.

test_s3_access_endpoint_cmd = "aws s3api list-objects-v2 --bucket arn:aws:s3:us-east-2:${local.aws_account}:accesspoint/${aws_s3_bucket.s3_us_east_2.bucket} --region us-east-2 --endpoint-url https://accesspoint${local.us_east_1_s3_endpoint_domain}"

test_s3_control_endpoint_cmd = "aws s3control --region us-east-2 --endpoint-url https://control${local.us_east_2_s3_endpoint_domain} list-jobs --account-id ${local.aws_account}"

}

}

}

output "us-east-1" {

value = local.regions.us-east-1

description = "us-east-1 outputs (including testing commands)"

}

output "us-east-2" {

value = local.regions.us-east-2

description = "us-east-1 outputs (including testing commands)"

}

output "public_ec2_ssh_cmd" {

value = "ssh ec2-user@${aws_instance.public.public_dns}"

description = "SSH commands to connect to public EC2 instance"

}

output "demo_ec2_ssh_cmd" {

value = "ssh ${aws_instance.ec2_demo.private_ip}"

description = "SSH commands to connect to private EC2 instance for testing endpoints access"

}Testing

Now, we can deploy the entire infrastructure and test that the demo EC2 instance can get access to the S3 buckets through region-specific VPC Endpoints:

terraform init

terraform apply -auto-approveIn a couple of minutes, you can SSH to the public EC2 instance and use it to SSH to the demo EC2 instance:

ssh ec2-user@ec2-34-212-193-255.us-west-2.compute.amazonaws.com # public EC2 instance

ssh 10.2.0.101 # execute from public EC2 instanceNow, from the demo EC2 instance, execute the following commands to test access to the S3 buckets:

aws s3 --region us-east-1 \

--endpoint-url https://bucket.vpce-06aacae06a7ef260f-chhnwb13.s3.us-east-1.vpce.amazonaws.com \

ls s3://vpc-endpoints-multi-region-access-s3-us-east-1/

aws s3 --region us-east-2 \

--endpoint-url https://bucket.vpce-0f0abce7d9610ceb5-ednztv79.s3.us-east-2.vpce.amazonaws.com \

ls s3://vpc-endpoints-multi-region-access-s3-us-east-2/

Summary

This article covered how to use Route53 Resolvers andRoute53 Resolver Rules to provide access to VPC Endpoints in different AWS Regions and automated this solution using Terraform.