AWS Fargate Private VPC Subnets – Terraform Example

AWS Fargate is one of our favorite serverless compute engines that lets you focus on building applications without managing servers. It allows you to deploy your applications, APIs, and microservices architectures using containerized architectures. AWS Fargate supports Machine Learning workloads and lets you train, test, and deploy your ML models at scale. Launching AWS Fargate tasks in public subnets is a trivial task, but launching Fargate tasks in private AWS subnets might be challenging, especially for newcomers to the AWS cloud.

This article provides an example of how to use Terraform to deploy AWS Fargate Private VPC tasks.

Table of contents

Prerequisites

Private VPC subnets usually are not allowing any outgoing traffic from the subnet to the Internet. That means we need to use the following list of VPC endpoints to enable AWS Fargate to talk to AWS APIs using a private communication channel:

- Amazon S3

- Amazon Elastic Container Registry

- Amazon CloudWatch Logs

- AWS Secrets Manager

- AWS Systems Manager

- AWS Key Management Service

Not all AWS services may have VPC endpoint support (list of AWS services currently integrated with VPC endpoints). If you need an AWS Fargate task to interact with an AWS service that does not have VPC endpoint support, you must allow this traffic through NAT Gateway or any proxy server. For example, right now, AWS Cloud Map (autodiscovery service) does not have integration with VPC endpoints, and we’ll be using NAT Gateway to allow Fargate tasks to reach out to its public AWS API endpoint.

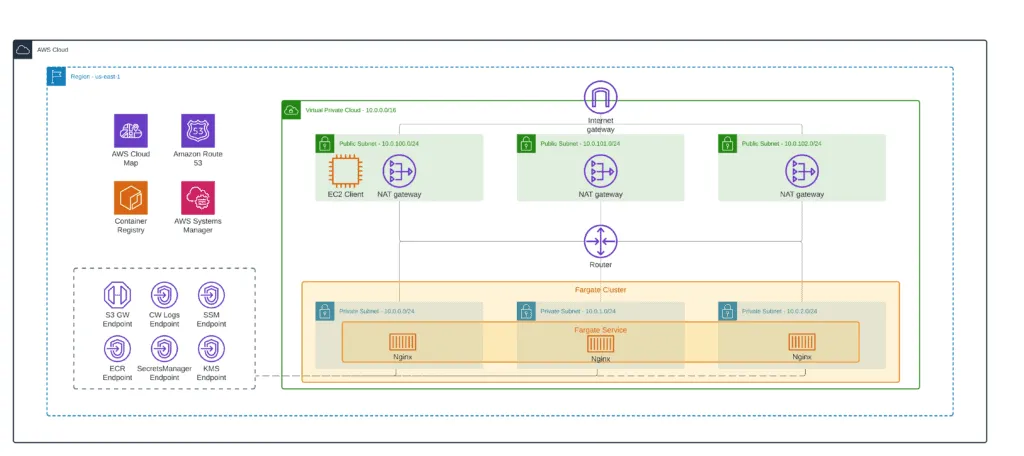

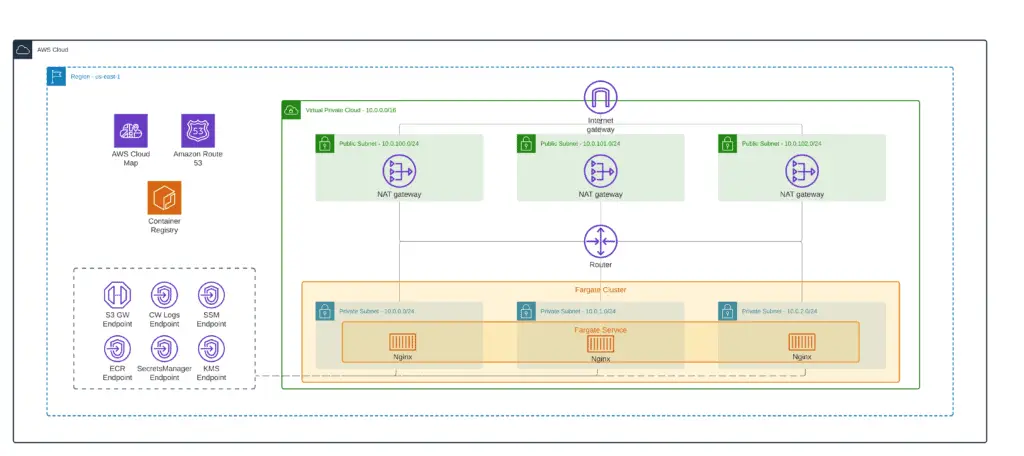

Building Terraform stack

In the following sections of the article, we’ll build Terraform stack launch AWS Fargate cluster tasks in private subnets. Here’s an architecture diagram of the entire solution:

Local variables

First, let’s define a common variable for the stack (main.tf):

locals {

aws_region = "us-east-1"

prefix = "private-fargate-demo"

common_tags = {

Project = local.prefix

ManagedBy = "Terraform"

}

vpc_cidr = "10.0.0.0/16"

}

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}VPC deployment

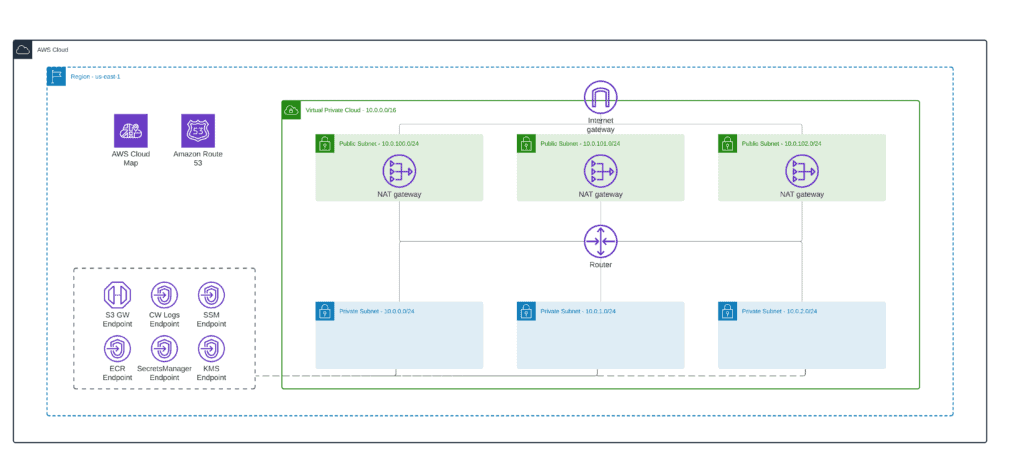

Now, we can build a VPC with 3 public and private subnets to baseline our environment. To simplify VPC deployment, we’ll use the official Terraform terraform-aws-modules/vpc/aws module (vpc.tf):

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

name = "${local.prefix}-vpc"

cidr = local.vpc_cidr

azs = ["${local.aws_region}a", "${local.aws_region}b", "${local.aws_region}c"]

private_subnets = ["10.0.0.0/24", "10.0.1.0/24", "10.0.2.0/24"]

public_subnets = ["10.0.100.0/24", "10.0.101.0/24", "10.0.102.0/24"]

enable_nat_gateway = true

single_nat_gateway = false

one_nat_gateway_per_az = true

enable_vpn_gateway = false

enable_dns_hostnames = true

enable_dns_support = true

tags = merge(

local.common_tags,

{

Name = "${local.prefix}-vpc"

}

)

}

resource "aws_service_discovery_private_dns_namespace" "app" {

name = "${local.prefix}.hands-on.cloud.local"

description = "${local.prefix}.hands-on.cloud.local zone"

vpc = module.vpc.vpc_id

}An architecture diagram of the current state of your implementation:

For more in-depth information about building Amazon VPC from scratch, check the Terraform AWS VPC – Complete Tutorial article.

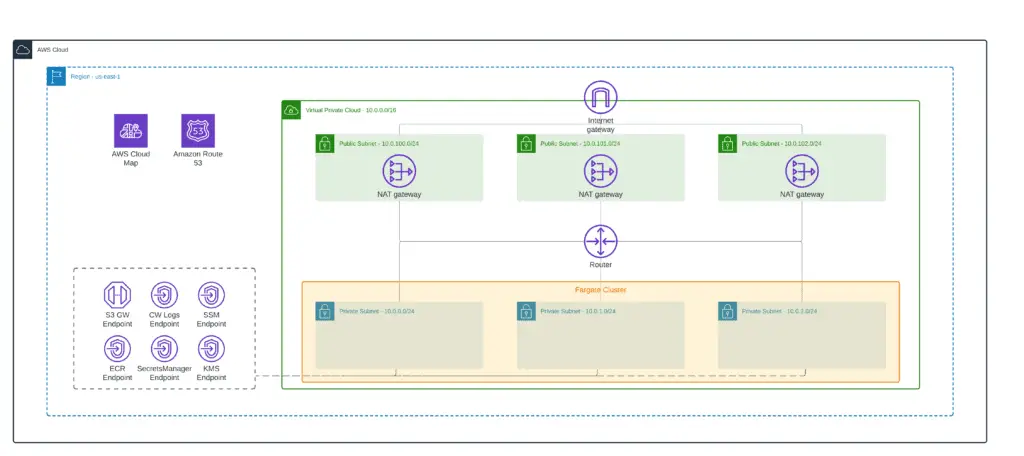

VPC Endpoints

Next, we need to define all required VPC endpoints which will allow AWS API calls for Fargate tasks (vpc_endpoints.tf):

# VPC Endpoint Security Group

resource "aws_security_group" "vpc_endpoint" {

name = "${local.prefix}-vpce-sg"

vpc_id = module.vpc.vpc_id

ingress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = [module.vpc.vpc_cidr_block]

}

tags = local.common_tags

}

# VPC Endpoints

resource "aws_vpc_endpoint" "s3" {

vpc_id = module.vpc.vpc_id

service_name = "com.amazonaws.${data.aws_region.current.name}.s3"

vpc_endpoint_type = "Gateway"

route_table_ids = module.vpc.private_route_table_ids

tags = {

Name = "s3-endpoint"

Environment = "dev"

}

}

resource "aws_vpc_endpoint" "dkr" {

vpc_id = module.vpc.vpc_id

private_dns_enabled = true

service_name = "com.amazonaws.${data.aws_region.current.name}.ecr.dkr"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.vpc_endpoint.id,

]

subnet_ids = module.vpc.private_subnets

tags = {

Name = "dkr-endpoint"

Environment = "dev"

}

}

resource "aws_vpc_endpoint" "dkr_api" {

vpc_id = module.vpc.vpc_id

private_dns_enabled = true

service_name = "com.amazonaws.${data.aws_region.current.name}.ecr.api"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.vpc_endpoint.id,

]

subnet_ids = module.vpc.private_subnets

tags = {

Name = "dkr-api-endpoint"

Environment = "dev"

}

}

resource "aws_vpc_endpoint" "logs" {

vpc_id = module.vpc.vpc_id

private_dns_enabled = true

service_name = "com.amazonaws.${data.aws_region.current.name}.logs"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.vpc_endpoint.id,

]

subnet_ids = module.vpc.private_subnets

tags = {

Name = "logs-endpoint"

Environment = "dev"

}

}

resource "aws_vpc_endpoint" "secretsmanager" {

vpc_id = module.vpc.vpc_id

private_dns_enabled = true

service_name = "com.amazonaws.${data.aws_region.current.name}.secretsmanager"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.vpc_endpoint.id,

]

subnet_ids = module.vpc.private_subnets

tags = {

Name = "secretsmanager-endpoint"

Environment = "dev"

}

}

resource "aws_vpc_endpoint" "ssm" {

vpc_id = module.vpc.vpc_id

private_dns_enabled = true

service_name = "com.amazonaws.${data.aws_region.current.name}.ssm"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.vpc_endpoint.id,

]

subnet_ids = module.vpc.private_subnets

tags = {

Name = "ssm-endpoint"

Environment = "dev"

}

}

resource "aws_vpc_endpoint" "kms" {

vpc_id = module.vpc.vpc_id

private_dns_enabled = true

service_name = "com.amazonaws.${data.aws_region.current.name}.kms"

vpc_endpoint_type = "Interface"

security_group_ids = [

aws_security_group.vpc_endpoint.id,

]

subnet_ids = module.vpc.private_subnets

tags = {

Name = "kms-endpoint"

Environment = "dev"

}

}An architecture diagram of the current state of your implementation:

Fargate cluster

Let’s define the AWS Fargate cluster (fargate.tf):

resource "aws_ecs_cluster" "main" {

name = "${local.prefix}-fargate-cluster"

tags = merge(

local.common_tags,

{

Name = "${local.prefix}-fargate-cluster"

}

)

}An architecture diagram of the current state of your implementation:

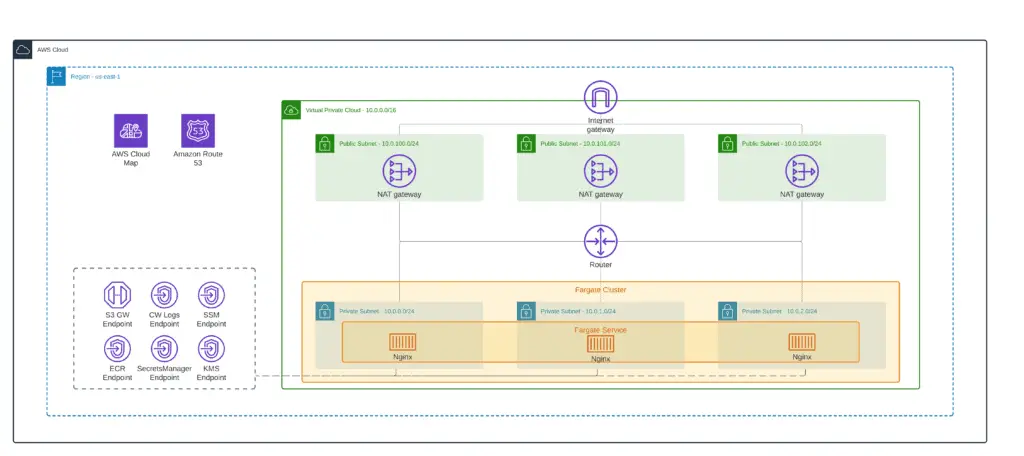

AWS Fargate Private VPC: service and task

At this step, we can define the demo Fargate Service to check that everything works as expected. We’ll use the default Nginx Docker container for the Fargate task definition. Here’s what the Terraform code looks like (fargate_service.tf):

locals {

aws_account_id = data.aws_caller_identity.current.account_id

service_name = "nginx"

task_image = "${local.aws_account_id}.dkr.ecr.${local.aws_region}.amazonaws.com/${local.prefix}-${local.service_name}:latest"

service_port = 80

service_namespace_id = aws_service_discovery_private_dns_namespace.app.id

container_definition = [{

cpu = 512

image = local.task_image

memory = 1024

name = local.service_name

networkMode = "awsvpc"

environment = [

{

"name": "SERVICE_DISCOVERY_NAMESPACE_ID", "value": local.service_namespace_id

}

]

portMappings = [

{

protocol = "tcp"

containerPort = local.service_port

hostPort = local.service_port

}

]

logConfiguration = {

logdriver = "awslogs"

options = {

"awslogs-group" = local.cw_log_group

"awslogs-region" = data.aws_region.current.name

"awslogs-stream-prefix" = "stdout"

}

}

}]

cw_log_group = "/ecs/${local.service_name}"

}

# Fargate service

# AWS Fargate Security Group

resource "aws_security_group" "fargate_task" {

name = "${local.service_name}-fargate-task"

vpc_id = module.vpc.vpc_id

ingress {

from_port = local.service_port

to_port = local.service_port

protocol = "tcp"

cidr_blocks = [module.vpc.vpc_cidr_block]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = merge(

local.common_tags,

{

Name = local.service_name

}

)

}

data "aws_iam_policy_document" "fargate-role-policy" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["ecs.amazonaws.com", "ecs-tasks.amazonaws.com"]

}

}

}

resource "aws_iam_policy" "fargate_execution" {

name = "fargate_execution_policy"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"ecr:BatchCheckLayerAvailability",

"ecr:GetAuthorizationToken",

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ssm:GetParameters",

"secretsmanager:GetSecretValue",

"kms:Decrypt"

],

"Resource": [

"*"

]

}

]

}

EOF

}

resource "aws_iam_policy" "fargate_task" {

name = "fargate_task_policy"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"servicediscovery:ListServices",

"servicediscovery:ListInstances"

],

"Resource": "*"

}

]

}

EOF

}

resource "aws_iam_role" "fargate_execution" {

name = "${local.service_name}-fargate-execution-role"

assume_role_policy = data.aws_iam_policy_document.fargate-role-policy.json

}

resource "aws_iam_role" "fargate_task" {

name = "${local.service_name}-fargate-task-role"

assume_role_policy = data.aws_iam_policy_document.fargate-role-policy.json

}

resource "aws_iam_role_policy_attachment" "fargate-execution" {

role = aws_iam_role.fargate_execution.name

policy_arn = aws_iam_policy.fargate_execution.arn

}

resource "aws_iam_role_policy_attachment" "fargate-task" {

role = aws_iam_role.fargate_task.name

policy_arn = aws_iam_policy.fargate_task.arn

}

# Fargate Container

resource "aws_cloudwatch_log_group" "app" {

name = local.cw_log_group

tags = merge(

local.common_tags,

{

Name = local.service_name

}

)

}

resource "aws_ecs_task_definition" "app" {

family = local.service_name

network_mode = "awsvpc"

cpu = local.container_definition.0.cpu

memory = local.container_definition.0.memory

requires_compatibilities = ["FARGATE"]

container_definitions = jsonencode(local.container_definition)

execution_role_arn = aws_iam_role.fargate_execution.arn

task_role_arn = aws_iam_role.fargate_task.arn

tags = merge(

local.common_tags,

{

Name = local.service_name

}

)

}

resource "aws_ecs_service" "app" {

name = local.service_name

cluster = aws_ecs_cluster.main.name

task_definition = aws_ecs_task_definition.app.arn

desired_count = "1"

launch_type = "FARGATE"

service_registries {

registry_arn = aws_service_discovery_service.app_service.arn

container_name = local.service_name

container_port = local.service_port

}

network_configuration {

security_groups = [aws_security_group.fargate_task.id]

subnets = module.vpc.private_subnets

}

}

resource "aws_service_discovery_service" "app_service" {

name = local.service_name

dns_config {

namespace_id = local.service_namespace_id

dns_records {

ttl = 10

type = "A"

}

dns_records {

ttl = 10

type = "SRV"

}

routing_policy = "MULTIVALUE"

}

health_check_custom_config {

failure_threshold = 1

}

}An architecture diagram of the current state of your implementation:

Amazon ECR

Now we need to define an Elastic Container Registry to store our Nginx container image (ecr.tf):

resource "aws_ecr_repository" "foo" {

name = "${local.prefix}-nginx"

image_tag_mutability = "MUTABLE"

image_scanning_configuration {

scan_on_push = true

}

}An architecture diagram of the current state of your implementation:

Setting up JumpHost

Finally, we’ll deploy an Amazon Linux EC2 instance which will play the role of JumpHost and allow us to test the connection to the Fargate service. We’ll assign this EC2 Instance Profile role to allow connections to this EC2 instance using AWS Systems Manager Session Manager (jumphost.tf):

locals {

demo_ec2_instance_type = "t3.micro"

}

# Latest Amazon Linux 2 AMI

data "aws_ami" "amazon_linux_2" {

most_recent = true

filter {

name = "owner-alias"

values = ["amazon"]

}

filter {

name = "name"

values = ["amzn2-ami-hvm*"]

}

owners = ["amazon"]

}

# EC2 client Instance Profile

resource "aws_iam_instance_profile" "ec2_client" {

name = "${local.prefix}-ec2-client"

role = aws_iam_role.ec2_client.name

}

resource "aws_iam_role" "ec2_client" {

name = "${local.prefix}-ec2-client"

path = "/"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

# EC2 client Security Group

resource "aws_security_group" "ec2_client" {

name = "${local.prefix}-ec2-client"

vpc_id = module.vpc.vpc_id

ingress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = [module.vpc.vpc_cidr_block]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = merge(

local.common_tags,

{

Name = "${local.prefix}-ec2-client"

}

)

}

# EC2 client instance

resource "aws_iam_policy_attachment" "ec2_client" {

name = "${local.prefix}-ec2-client-role-attachment"

roles = [aws_iam_role.ec2_client.name]

policy_arn = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore

}

resource "aws_network_interface" "ec2_client" {

subnet_id = module.vpc.public_subnets[0]

private_ips = ["10.0.100.101"]

security_groups = [aws_security_group.ec2_client.id]

}

resource "aws_instance" "ec2_client" {

ami = data.aws_ami.amazon_linux_2.id

instance_type = local.demo_ec2_instance_type

availability_zone = "${local.aws_region}a"

iam_instance_profile = aws_iam_instance_profile.ec2_client.name

network_interface {

network_interface_id = aws_network_interface.ec2_client.id

device_index = 0

}

tags = merge(

local.common_tags,

{

Name = "${local.prefix}-ec2-client"

}

)

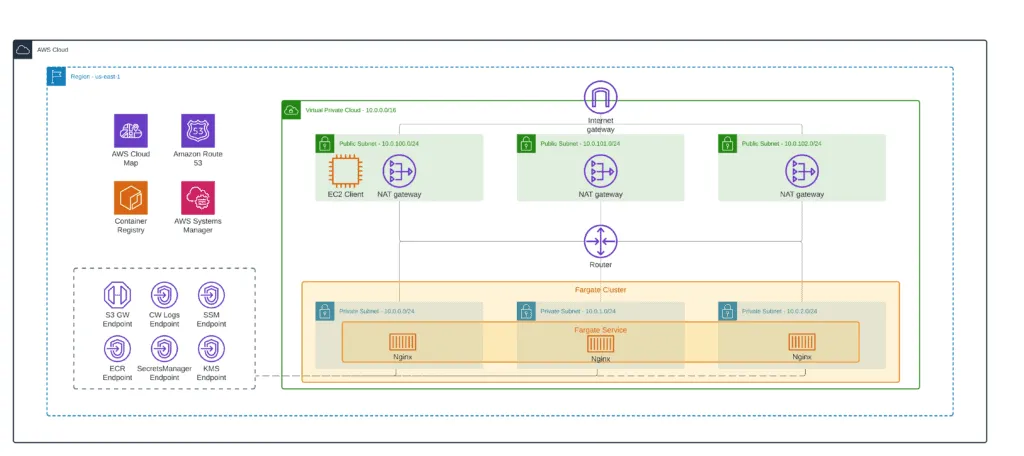

}At this stage, you’ve built the final version of the architecture:

Terraform outputs

To simplify testing, we can generate the connection URL of the Fargate task registered at the AWS ConfigMap service (outputs.tf):

output "nginx_test" {

value = "curl http://${aws_ecs_service.app.name}.${aws_service_discovery_private_dns_namespace.app.name}"

}Building Docker image

As soon as we’ve set up the infrastructure, let’s push the Nginx Docker image to the ECR:

export MY_AWS_ACCOUNT=$(aws sts get-caller-identity | jq -r .Account")

export MY_AWS_REGION=$(aws configure get default.region)

docker pull nginx

docker tag nginx:latest $MY_AWS_ACCOUNT.dkr.ecr.$MY_AWS_REGION.amazonaws.com/private-fargate-demo-nginx:latest

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin $MY_AWS_ACCOUNT.dkr.ecr.$MY_AWS_REGION.amazonaws.com

docker push 585584209241.dkr.ecr.us-east-1.amazonaws.com/private-fargate-demo-nginx:latestStack testing

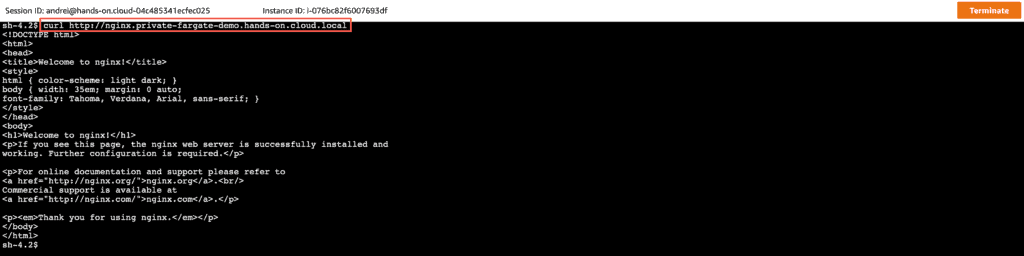

The Fargate Service will automatically pull the Nginx Docker image from the ECR and start it as a Fargate Task. All we need to do to test our service is to connect to the JumpBox EC2 instance using AWS Systems Manager Session Manager and run the following command:

curl http://nginx.private-fargate-demo.hands-on.cloud.local

FAQ

Does Fargate run inside a VPC?

Does Fargate need a public subnet?

Does Fargate need a VPC endpoint?

How do you secure a Fargate?

Summary

This article covered deploying AWS Fargate tasks in private VPC subnets using Terraform. Usually, AWS Fargate services are used as a backend for the Application Load Balancer. For more information on this topic, check out the “Managing AWS Application Load Balancer (ALB) Using Terraform” article.