Amazon Kinesis is a collection of services for collecting, processing, and analyzing streaming data. It enables you to capture and process data as it flows in real-time, so you can get timely insights and take action quickly. Kinesis is commonly used in BigData, IoT, Gaming, and Media solutions.

AWS Kinesis streaming data platform is built to process real-time video and data streams, for example, application logs and metrics (AWS Logging – Centralized Logging Best Practices), mobile data, gaming data, website clickstreams, IoT telemetry data, video streams, etc.

AWS Kinesis consists of four services:

- Kinesis Data Streams – responsible for capturing streaming data and making it available for other AWS services for future processing

- Kinesis Data Firehose – responsible for processing and delivering streaming data into AWS data stores (S3), analytics services (Redshift, OpenSearch), or 3rd-party services such as New Relic and Splunk, for example.

- Kinesis Data Analytics – allows analyzing and getting actional insights from real-time streaming data with SQL queries using Apache Flink

- Kinesis Video Streams – capturing, processing, and storing video streams for future playbacks and analytics.

Amazon Kinesis Data Streams

The Amazon Kinesis Data Streams service acts as a durable and immutable data store helping you to ingest and buffer real-time data streams to allow other AWS services to process and analyze the incoming data later. To achieve this goal, you need to set up a Kinesis stream.

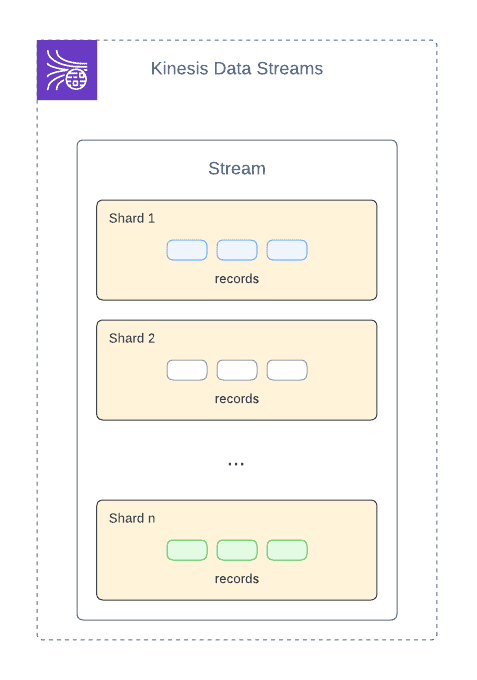

Each Kinesis Data Stream consists of multiple shards. The number of shards you use defines your overall data ingestion capacity throughput.

Each shard gives you 1 MiB/second and 1,000 records/second of write capacity, and 2 MiB/second of read capacity.

If traffic exceeds capacity, your data stream will throttle.

You can use launch Data Stream in one of the two capacity modes:

- On-demand mode automatically scales the stream’s throughput to accommodate the traffic of up to 200 MiB per second and 200,000 records per second for the write capacity. You pay per stream per hour, and the data transfers in/out per GB.

- Provisioned mode allows you to use a fixed number of shards in your stream. By default, the limit is set to 500 shards in N. Virginia region (AWS Global Infrastructure: Regions and Availability Zones), 200 shards in other regions, can be increased by Amazon Web Services after opening quota increase service request. You pay per shard per hour.

Data producers for Kinesis Data Stream

A Kinesis stream producer might be any device or application that uses SDK, Kinesis Producer Library (KPL), or AWS CLI. Additionally, a Kinesis Agent application might be installed on your server to stream, for example, application logs to the Kinesis Data Streams.

Kinesis record

Each Kinesis client streaming data records (maximum record size is 1 MB), which consist of the “Partition key” and “Data” fields:

- The “Partition key” defines to which shard the record will go.

- The “Data” field contains the actual transmitted data.

When the record is received by the consumer, the “Sequence number” field is added to the record by Kinesis to represent the order of the message in the shard.

Data Stream consumers

Kinesis Data Streams can be consumed and processed by any application using SDK or Amazon Kinesis Client Library (KCL), Lambda functions, Kinesis Data Firehose, and Kinesis Data Analytics.

Kinesis consumers can read records from each shard in two modes:

- Shared – 2 MB/sec per shard throughput is dedicated to all consumers reading from the shard

- Enhanced – 2MB/sec per shard throughput is dedicated per consumer

Amazon Kinesis Data Streams Capabilities

- The retention period is from 24 hours and up to 365 days

- You can reprocess any data from Kinesis Data Streams at any time during the retention period

- Data in the Kinesis data store is immutable and can’t be deleted

- Data with the same Partition key will go to the same shard in the order it was received

Additional features

- IAM access controls

- Encryption in transit (HTTPS) and at rest (AWS KMS)

- VPC endpoints and AWS CloudTrail API calls logs for additional security

Amazon Kinesis Data Firehose

Amazon Kinesis Data Firehose is a service for streaming data to AWS or AWS partner services in near real-time. It can optionally use the Lambda function to transform streamed data and load it into Amazon S3, Redshift, OpenSearch Service, or a third-party service.

Also, you can configure Kinesis Data Firehose to convert raw streaming data from your data sources into formats like Apache Parquet and Apache ORC required by destination data stores without building your own data processing pipelines.

Data producers for Amazon Kinesis Data Firehose

Producers for the Kinesis Firehose are the same as for Kinesis Data Streams (any device or application that uses SDK, Kinesis Producer Library (KPL), AWS CLI, Kinesis Agent) and Amazon Kinesis Data Streams itself.

Kinesis Data Firehose destinations

Amazon Kinesis Firehose allows uploading streaming data in batches to three destination types:

- AWS Services – Amazon S3, Amazon Redshift (COPY through S3 bucket), Amazon OpenSearch

- Third-party platforms – New Relic, Dynatrace, DataDog, Splunk, and others.

- Custom HTTP endpoint

For any option where S3 is not a part of the configuration, Kinesis Data Firehose also saves all or failed data into an S3 bucket.

Kinesis Data Firehose can capture and store data records for up to 24 hours. If data delivery fails for more than 24 hours, your data is lost.

Amazon Kinesis Data Analytics

Amazon Kinesis Data Analytics is a service that allows you to build applications to process and analyze streaming data. It can be used to detect patterns and insights and to take action in real-time based on the data that is streaming through your data pipeline.

Kinesis Data Analytics uses streamed data sent between Amazon Kinesis Data Streams and Amazon Managed Streaming for Apache Kafka (MSK) services, allowing you to analyze data in near real-time.

Kinesis Data Analytics Studio allows you to use a Studio notebook to develop your application visually using Apache Flink and Apache Zeppelin in a browser-based IDE for managing code, computation inputs and outputs, visualizations, and labels.

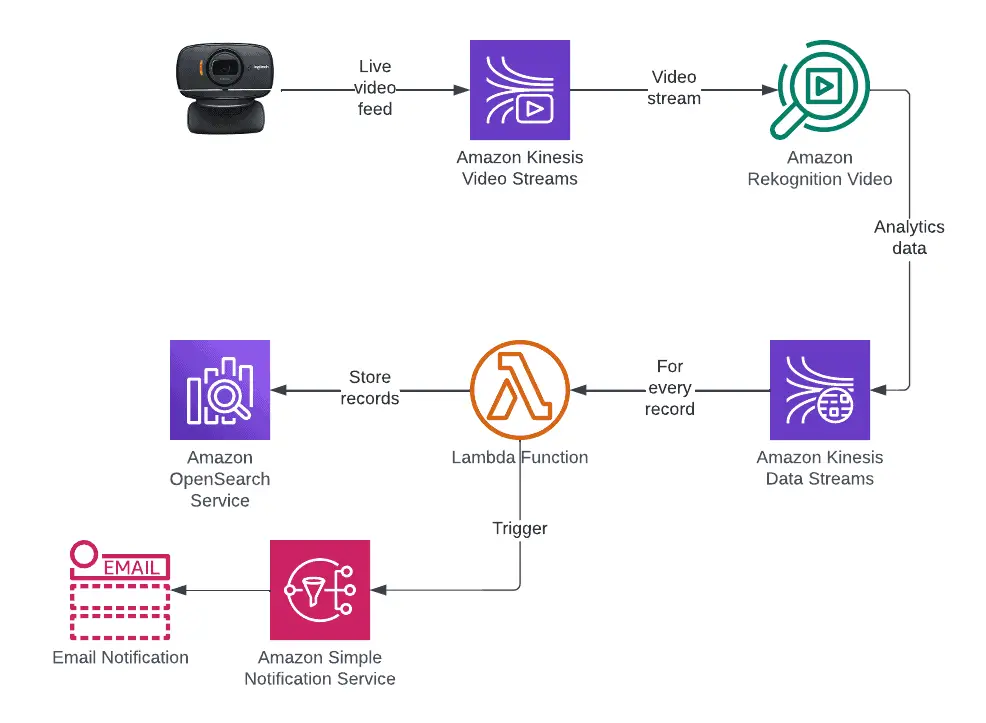

Amazon Kinesis Video Streams

Amazon Kinesis Video Streams is a service that captures, processes, and stores video stream data for real-time analysis and playback. It can power various applications, such as security, marketing, customer service, and IoT.

Kinesis Video Streams provisions and elastically scales all required infrastructure to automatically ingest streaming video data from multiple devices. Kinesis Video Streams makes it easy to securely stream video from various devices to AWS for real-time processing, analytics, and Machine Learning (ML). Existing business intelligence tools can also digest extracted video metadata.

Free hands-on AWS workshops

Currently, AWS provides two great hands-on Kinesis workshops:

- Real-Time Streaming with Kinesis – you’ll build a real-time data streaming and processing application from capturing real-time data, acting on real-time insights to persisting processed data.

- Amazon Kinesis Video Streams Workshop – will teach you how to ingest and store video from camera devices, do live and on-demand playback, and download video files using Amazon Kinesis Video Streams. You will also learn how to do live face recognition and near real-time video analysis using Amazon Rekognition Video.

Summary

In this article, we’ve covered the most important information you have to know about Amazon Kinesis services and provided links to hands-on AWS workshops. If you have any questions or suggestions, let us know in the comments below.