LocalStack is an open-source, fully functional local stack of AWS services. LocalStack provides a local testing environment for applications utilizing the same APIs. LocalStack helps test your code interacting with different services such as S3, API Gateway, DynamoDB, etc. And all these services are deployed on your laptop using Docker containers without utilizing AWS cloud infrastructure. This article covers installing, configuring, and testing various AWS Serverless services such as S3, Lambda Functions, DynamoDB, KMS, etc., using LocalStack and Python Boto3 library.

Prerequisites

To start testing AWS cloud apps and making API calls using the Boto3 library, you must first install the LocalStack CLI.

In general, here’s what you need to have installed to start developing cloud applications:

Alternatively, you can use a Cloud9 IDE. Cloud9 IDE comes with pre-installed AWS CLI, Python, PIP, and Docker.

What is LocalStack?

LocalStack is an AWS cloud service emulator that runs in a single container on your laptop. Using LocalStack, you can test your Lambda functions and other AWS cloud resources on your local laptop without connecting to an AWS cloud infrastructure!

LocalStack supports the deployment of many AWS cloud resources, such as AWS S3, API Gateway, SQS, SNS, Lambda, and many more. For the comprehensive list of services supported by LocalStack, refer here.

LocalStack also offers paid Pro and Enterprise versions. The Paid versions of LocalStack support additional service APIs and advanced features such as Lambda Layers, a graphical user interface, and professional LocalStack team support. We will be using LocalStack Community Edition.

| Benefits of using LocalStack |

|---|

| A fully functional cloud stack. |

| Fast development and testing workflows for cloud and serverless apps offline. |

| Substantial cost savings for testing apps as no cloud infrastructure is required. |

Installation

LocalStack supports several installation methods:

To install LocalStack, we will use Python’s Package Manager, pip:

python3 -m pip install localstackNote: install LocalStack as a local non-root user (the root user is not required for the installation).

Once installation is complete, you can type the below command to verify the installation of LocalStack.

localstack --help

Usage: localstack [OPTIONS] COMMAND [ARGS]...

The LocalStack Command Line Interface (CLI)

Options:

--version Show the version and exit.

--debug Enable CLI debugging mode

--help Show this message and exit.

...Connecting to LocalStack

By default, LocalStack provides a cloud developer a Docker container to spin up the AWS emulation environment on your machine. To start the LocalStack, run the below command in Terminal / PowerShell.

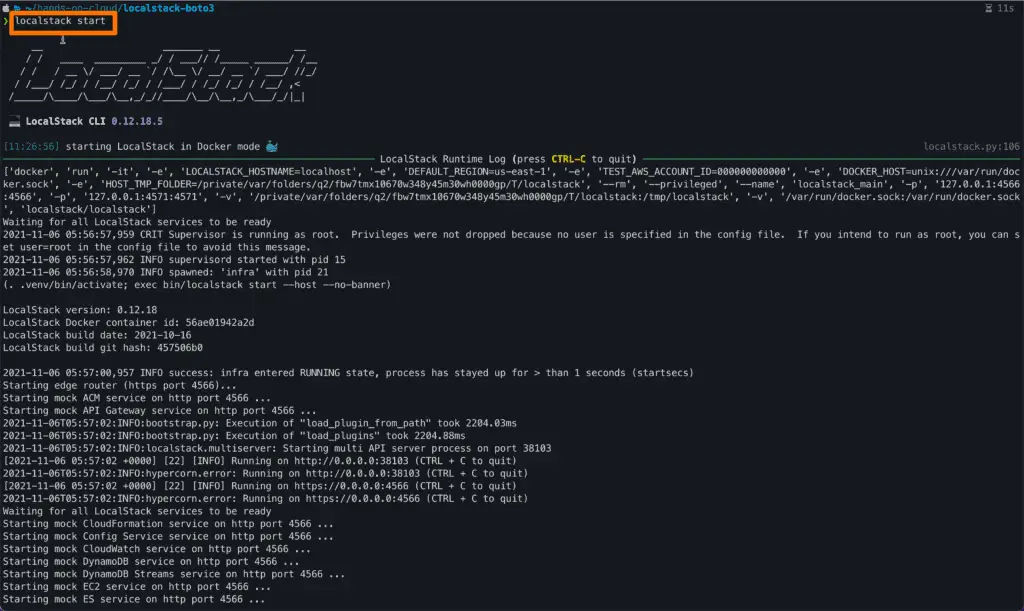

localstack startYou should see similar output as below.

The command will start the container that hosts Localstack runtime. As soon as the service is started, you’re ready to start testing your cloud or serverless apps offline without making a real connection to a remote cloud provider.

To stop running LocalStack instance of the Docker container, run the following command:

localstack stopConfiguring Environment

LocalStack comes with integration with several tools and CLIs. To get the complete list of supported integrations, refer here.

This article will use AWS Command Line Interface (CLI) and AWS CDK (Cloud Development Kit) integrations.

Configure Environment Variables

When you start the LocalStack service on your system, it uses a Docker container to run the different services locally. And LocalStack exposes the APIs for these various services on port 4566 by default.

We will configure this default port as an environment variable for ease of use in our configurations and connect to the LocalStack by using AWS CLI or AWS CDK.

If you are on Mac / Linux user, open the Terminal, execute the below command and restart the Terminal.

echo 'LOCALSTACK_ENDPOINT_URL="http://localhost:4566"' >> $HOME/.bash_profileIf you are using Windows, Open PowerShell and run the below command and restart the PowerShell.

[System.Environment]::SetEnvironmentVariable('LOCALSTACK_ENDPOINT_URL', 'http://localhost:4566')The above commands will configure the environment variable LOCALSTACK_ENDPOINT_URL, and you can refer to this variable from on onwards without explicitly mentioning its value repeatedly.

Configure AWS CLI

If you are on Mac/ Linux, Open Terminal. If you are using Windows, open PowerShell and execute the below commands.

aws configure --profile localstack

AWS Access Key ID [None]: test

AWS Secret Access Key [None]: test

Default region name [None]: us-east-1

Default output format [None]:The above command execution creates an AWS CLI profile named localstack with Access key and Secret key as test.

The test value for AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY is required to make pre-signed URLs for S3 buckets work.

To verify LocalStack configuration, execute the below command, which gets a list of the S3 buckets.

aws --endpoint-url=$LOCALSTACK_ENDPOINT_URL s3 ls--endpoint-url=$LOCALSTACK_ENDPOINT_URLis required to point the AWS CLI API calls to the local LocalStack deployments instead of AWS Cloud Infrastructure.

For ease of use, we will create an alias for the above command.

- If you are on Mac/ Linux, execute the below command to create an alias

awsls.

alias awsls="aws --endpoint-url=$LOCALSTACK_ENDPOINT_URL"- If you are using Windows, execute the below function code in PowerShell, which creates an alias

awsls.

function awsls {

aws --endpoint-url=$Env:LOCALSTACK_ENDPOINT_URL $args

}Now, you can interact with the various LocalStack services running locally. To test it, let’s create an S3 Bucket using AWS CLI in LocalStack. We will use the above-created alias awsls

awsls s3api create-bucket --bucket hands-on-cloud-localstack-bucket

{

"Location": "/hands-on-cloud-localstack-bucket"

}

(END)To get a list of the S3 buckets,

awsls s3 ls

2021-10-17 23:52:44 hands-on-cloud-localstack-bucketConfigure AWS CDK

We will use the Python Boto3 library to interact with LocalStack’s emulated AWS APIs.

The Boto3 library provides a low-level API client to manage AWS APIs.

- The

clientallows you to access the low-level API data. For example, you can access API response data in JSON format. - The

resourceallows you to interact with AWS APIs in a higher-level object-oriented way. For more information on the topic, look at AWS CLI vs. botocore vs. Boto3.

Here’s how you can instantiate the Boto3 client to start working with LocalStack services APIs:

import boto3

from botocore.exceptions import ClientError

import os

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

boto3.setup_default_session(profile_name=AWS_PROFILE)

loaclstack_client = boto3.client("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)Similarly, you can instantiate the Boto3 resource to interact with LocalStack APIs:

import boto3

from botocore.exceptions import ClientError

import os

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

boto3.setup_default_session(profile_name=AWS_PROFILE)

loaclstack_resource = boto3.resource("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)

In the above examples, we have used the setup_default_session() method to configure the profile named localstack that we created earlier.

Also, we have passed region_name and endpoint_url arguments while configuring the Boto3 client and resource, ensuring the API calls made from the Boto3 client and resource objects will refer to the LocalStack services on the local machine, not the AWS infrastructure.

Configure AWS SAM

You can use AWS SAM with LocalStack. Check out the official LocakStack AWS SAM documentation page for more information on this topic.

Working with Amazon S3

To work with Amazon S3 services, you need to use the Boto3 library S3 client, and AWS CLI configured to interact with the LocalStack on the local machine. The community version of the LocalStack helps speed up and simplify your testing and development workflow.

Create an S3 bucket

To create the S3 bucket in LocalStack using the Boto3 library, you need to use the client create_bucket() or resource create_bucket() method from the Boto3 library.

import logging

import boto3

from botocore.exceptions import ClientError

import json

import os

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

s3_client = boto3.client("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)

def create_bucket(bucket_name):

"""

Creates a S3 bucket.

"""

try:

response = s3_client.create_bucket(

Bucket=bucket_name)

except ClientError:

logger.exception('Could not create S3 bucket locally.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

bucket_name = "hands-on-cloud-localstack-bucket"

logger.info('Creating S3 bucket locally using LocalStack...')

s3 = create_bucket(bucket_name)

logger.info('S3 bucket created.')

logger.info(json.dumps(s3, indent=4) + '\n')

if __name__ == '__main__':

main()

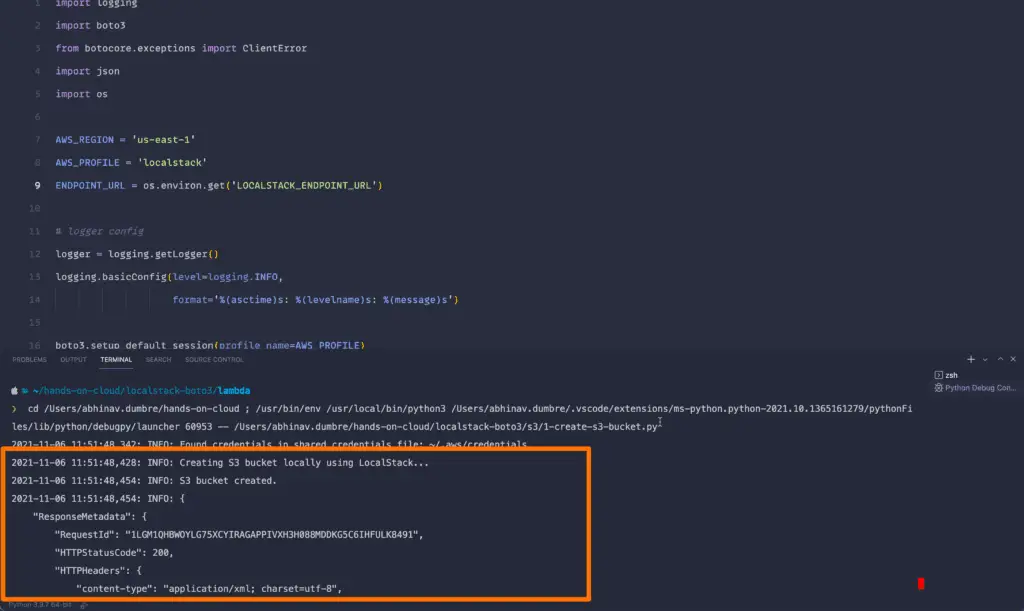

The above example uses the create_bucket() method from the Boto3 client that takes the Bucket name as an argument.

Here’s the code execution output:

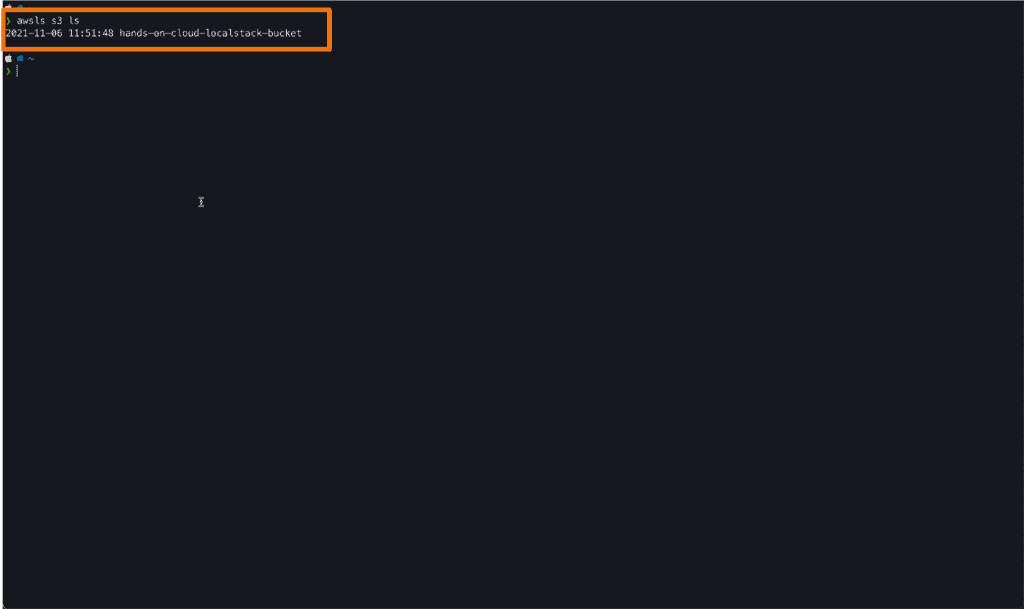

We will use the LocalStack and configured AWS CLI to verify the bucket creation.

If you are on Mac / Linux, open the Terminal; on Windows, open PowerShell. And execute the below command.

awsls s3 lsNote: awsls is an alias that we created earlier while configuring the AWS CLI for LocalStack. We will continue to use this alias throughout the remaining examples.

Expected output:

List S3 Buckets

To list the S3 buckets, you need to use one of the below two methods.

- list_buckets() method of the client

- all() method of the resource.

import logging

import boto3

from botocore.exceptions import ClientError

import json

import os

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

s3_resource = boto3.resource("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)

def list_buckets():

"""

List S3 buckets.

"""

try:

response = s3_resource.buckets.all()

except ClientError:

logger.exception('Could not list S3 bucket from LocalStack.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

logger.info('Listing S3 buckets from LocalStack...')

s3 = list_buckets()

logger.info('S3 bucket names: ')

for bucket in s3:

logger.info(bucket.name)

if __name__ == '__main__':

main()

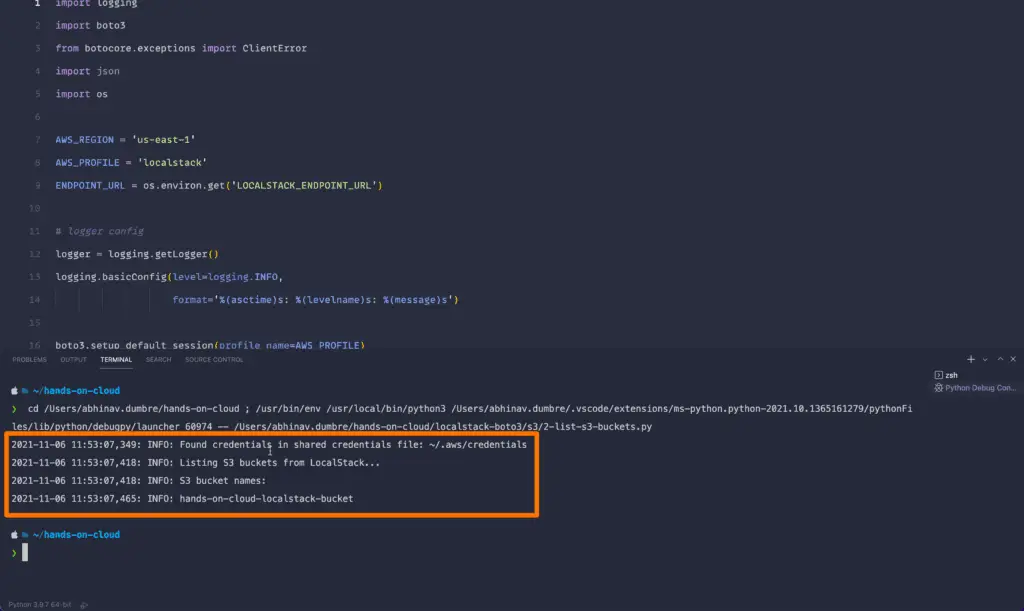

The above example uses the all() method from the Boto3 resource that returns an iterator object containing all S3 bucket names.

Here’s the code execution output:

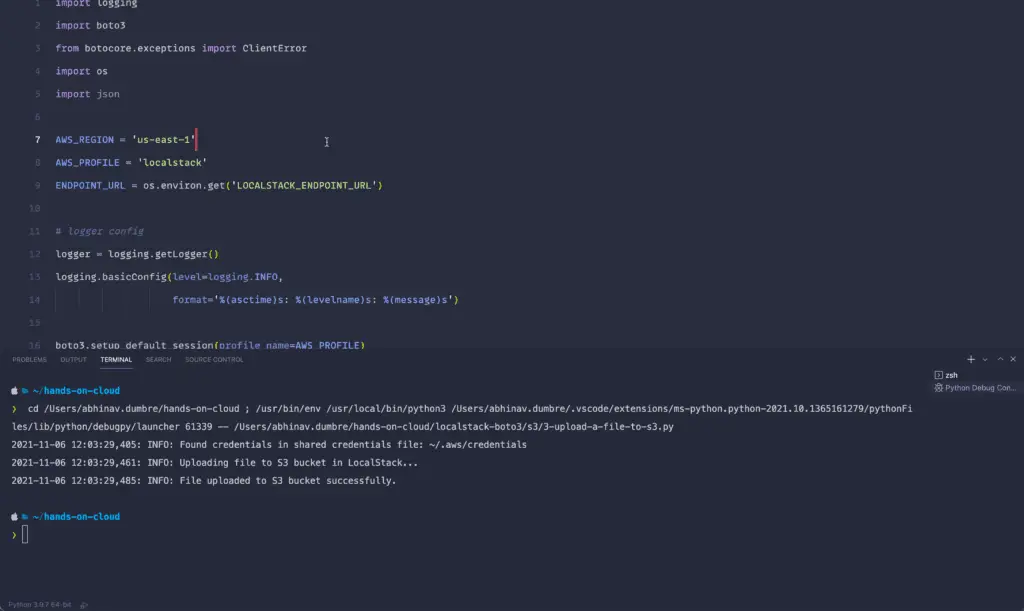

Upload file to S3 Bucket

To upload a file to the S3 bucket, you need to use the upload_file() method from the Boto3 library. The upload_file() method allows you to upload a file from the file system to the S3 bucket.

In addition, the Boto3 library provides the upload_fileobj() method to upload a binary file object to the bucket. (For more details, refer to Working with Files in Python)

import logging

import boto3

from botocore.exceptions import ClientError

import os

import json

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

s3_client = boto3.client("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)

def upload_file(file_name, bucket, object_name=None):

"""

Upload a file to a S3 bucket.

"""

try:

if object_name is None:

object_name = os.path.basename(file_name)

response = s3_client.upload_file(

file_name, bucket, object_name)

except ClientError:

logger.exception('Could not upload file to S3 bucket.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

file_name = '/Users/abhinav.dumbre/hands-on-cloud/localstack-boto3/s3/files/hands-on-cloud.txt'

object_name = 'hands-on-cloud.txt'

bucket = 'hands-on-cloud-localstack-bucket'

logger.info('Uploading file to S3 bucket in LocalStack...')

s3 = upload_file(file_name, bucket, object_name)

logger.info('File uploaded to S3 bucket successfully.')

if __name__ == '__main__':

main()

Required arguments:

file_name: Specifies the name of the filebucket_name– Specifies the name of the S3 bucketobject_name– Specifies the name of the uploaded file (usually equal to thefile_name)

We’re using the os module to get the file basename from the specified file path. Then, we create the upload_files() method responsible for calling the S3 client and uploading the file.

Here’s the code execution output:

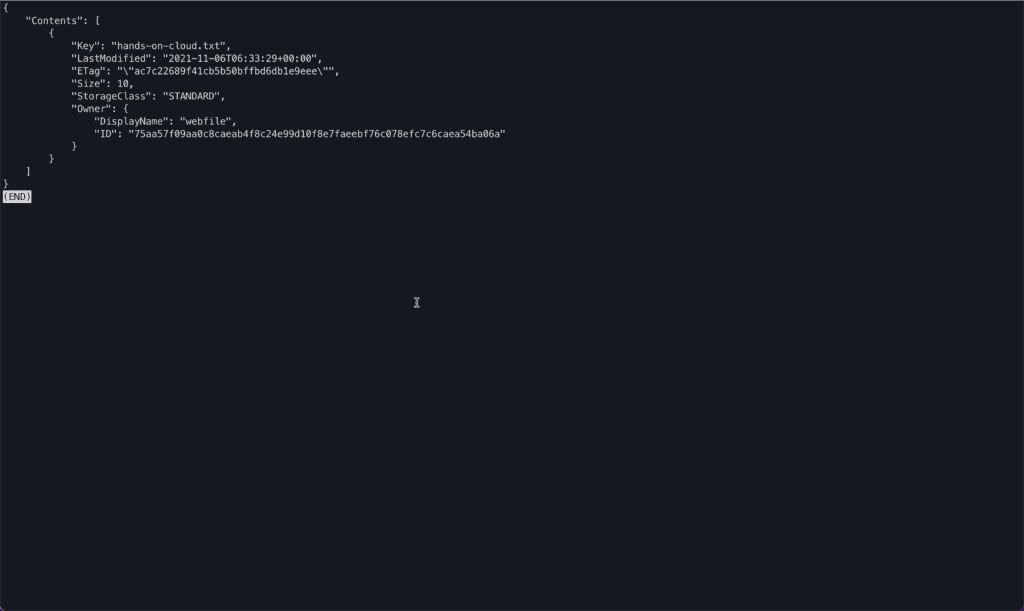

To verify the upload operation, we will use the AWS CLI.

Execute the below command in Terminal/ PowerShell.

awsls s3api list-objects --bucket hands-on-cloud-localstack-bucketExpected Output:

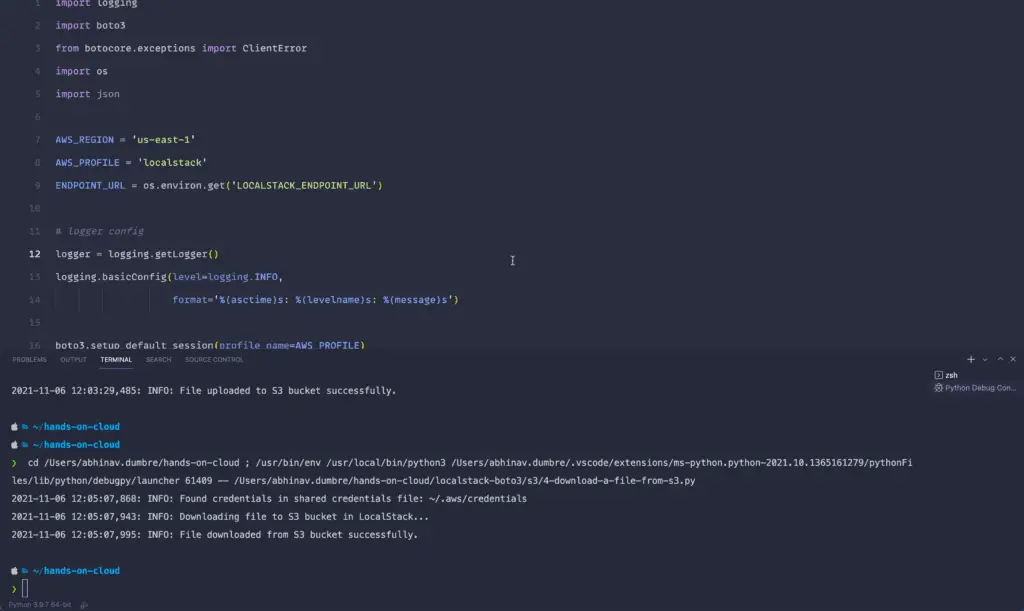

Download file from S3 Bucket

To download a file from the S3 bucket to your local file system, you can use the download_file() method from the Boto3 library.

import logging

import boto3

from botocore.exceptions import ClientError

import os

import json

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

s3_resource = boto3.resource("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)

def download_file(file_name, bucket, object_name):

"""

Download a file from a S3 bucket.

"""

try:

response = s3_resource.Bucket(bucket).download_file(object_name, file_name)

except ClientError:

logger.exception('Could not download file to S3 bucket.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

file_name = '/Users/abhinav.dumbre/hands-on-cloud/localstack-boto3/s3/files/hands-on-cloud-download.txt'

object_name = 'hands-on-cloud.txt'

bucket = 'hands-on-cloud-localstack-bucket'

logger.info('Downloading file to S3 bucket in LocalStack...')

s3 = download_file(file_name, bucket, object_name)

logger.info('File downloaded from S3 bucket successfully.')

if __name__ == '__main__':

main()

Required arguments:

file_name: Specifies the name of the filebucket_name– Specifies the name of the S3 bucketobject_name– Specifies the name of the uploaded file (usually equal to thefile_name). Specifies the name of the file to be downloaded.

Here’s the code execution output:

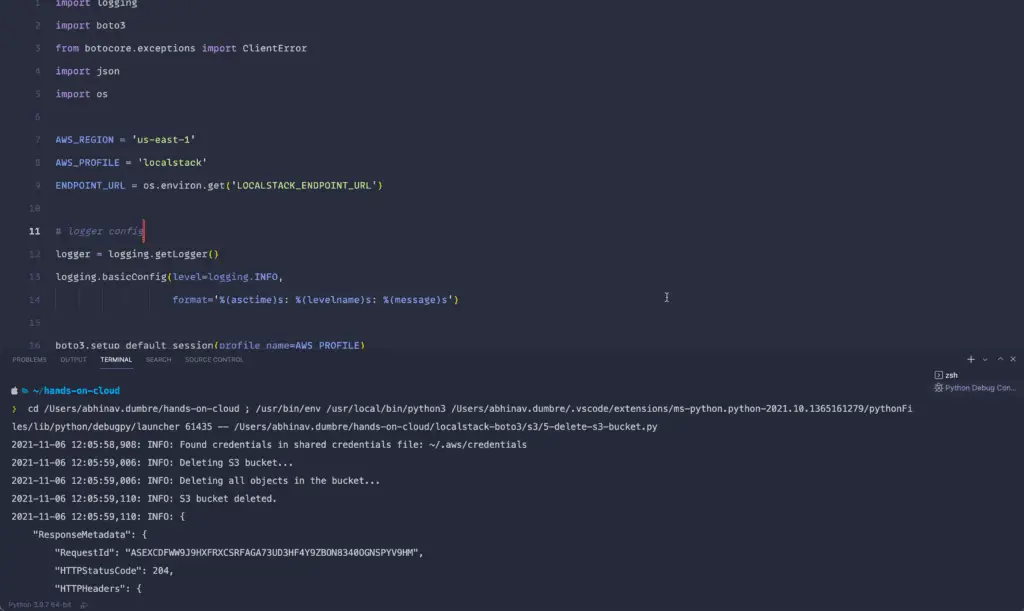

Delete S3 Bucket

To delete an S3 bucket, you can use the delete_bucket() method from the Boto3 library.

Before deleting an S3 bucket, we have to ensure it does not contain any existing objects. To remove all the existing objects from the S3 bucket, you need to use the delete() method from the Boto3 Resource library.

import logging

import boto3

from botocore.exceptions import ClientError

import json

import os

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

s3_client = boto3.client("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)

s3_resource = boto3.resource("s3", region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL)

def empty_bucket(bucket_name):

"""

Deletes all objects in the bucket.

"""

try:

logger.info('Deleting all objects in the bucket...')

bucket = s3_resource.Bucket(bucket_name)

response = bucket.objects.all().delete()

except:

logger.exception('Could not delete all S3 bucket objects.')

raise

else:

return response

def delete_bucket(bucket_name):

"""

Deletes a S3 bucket.

"""

try:

# remove all objects from the bucket before deleting the bucket

empty_bucket(bucket_name)

# delete bucket

response = s3_client.delete_bucket(

Bucket=bucket_name)

except ClientError:

logger.exception('Could not delete S3 bucket locally.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

bucket_name = "hands-on-cloud-localstack-bucket"

logger.info('Deleting S3 bucket...')

s3 = delete_bucket(bucket_name)

logger.info('S3 bucket deleted.')

logger.info(json.dumps(s3, indent=4) + '\n')

if __name__ == '__main__':

main()

Required argument:

bucket_name– Specifies the name of the S3 bucket

Here’s the code execution output:

Working with AWS Lambda

Amazon Web Services (AWS) Lambda is a service for developers who want to host and run their code without setting up and managing servers. Lambda functions that utilize the AWS cloud’s power provide a simple API with which you can upload your code and create functions that respond to events and requests.

In the following sections, we will explore the Lambda service and learn how to upload and test your serverless application using the LocalStack on your local machine.

We will use Python pytest module to invoke and test the lambda functions. You need to install the pytest module before running the tests against Lambdas. Use the below command to install the pytest library using the Python package manager:

pip install pytestTo begin with, let’s take a look at the basic Lambda function code. Upon invocation, this function returns a simple JSON-formatted response.

import logging

LOGGER = logging.getLogger()

LOGGER.setLevel(logging.INFO)

def handler(event, context):

logging.info('Hands-on-cloud')

return {

"message": "Hello User!"

}

The main functions for creating the .zip file to upload to LocalStack, creating Lambda, invoking Lambda, and deleting the Lambda function are defined in main_utils.py

import os

import logging

import json

from zipfile import ZipFile

import boto3

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

LAMBDA_ZIP = './function.zip'

boto3.setup_default_session(profile_name=AWS_PROFILE)

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

def get_boto3_client(service):

"""

Initialize Boto3 Lambda client.

"""

try:

lambda_client = boto3.client(

service,

region_name=AWS_REGION,

endpoint_url=ENDPOINT_URL

)

except Exception as e:

logger.exception('Error while connecting to LocalStack.')

raise e

else:

return lambda_client

def create_lambda_zip(function_name):

"""

Generate ZIP file for lambda function.

"""

try:

with ZipFile(LAMBDA_ZIP, 'w') as zip:

zip.write(function_name + '.py')

except Exception as e:

logger.exception('Error while creating ZIP file.')

raise e

def create_lambda(function_name):

"""

Creates a Lambda function in LocalStack.

"""

try:

lambda_client = get_boto3_client('lambda')

_ = create_lambda_zip(function_name)

# create zip file for lambda function.

with open(LAMBDA_ZIP, 'rb') as f:

zipped_code = f.read()

lambda_client.create_function(

FunctionName=function_name,

Runtime='python3.8',

Role='role',

Handler=function_name + '.handler',

Code=dict(ZipFile=zipped_code)

)

except Exception as e:

logger.exception('Error while creating function.')

raise e

def delete_lambda(function_name):

"""

Deletes the specified lambda function.

"""

try:

lambda_client = get_boto3_client('lambda')

lambda_client.delete_function(

FunctionName=function_name

)

# remove the lambda function zip file

os.remove(LAMBDA_ZIP)

except Exception as e:

logger.exception('Error while deleting lambda function')

raise e

def invoke_function(function_name):

"""

Invokes the specified function and returns the result.

"""

try:

lambda_client = get_boto3_client('lambda')

response = lambda_client.invoke(

FunctionName=function_name)

return json.loads(

response['Payload']

.read()

.decode('utf-8')

)

except Exception as e:

logger.exception('Error while invoking function')

raise e

Now, let’s define the test cases for the pytest.

import main_utils

import unittest

unittest.TestLoader.sortTestMethodsUsing = None

class Test(unittest.TestCase):

def test_a_setup_class(self):

print('\r\nCreating the lambda function...')

main_utils.create_lambda('lambda')

def test_b_invoke_function_and_response(self):

print('\r\nInvoking the lambda function...')

payload = main_utils.invoke_function('lambda')

self.assertEqual(payload['message'], 'Hello User!')

def test_c_teardown_class(self):

print('\r\nDeleting the lambda function...')

main_utils.delete_lambda('lambda')

The above test file contains lambda creation, lambda invocation, and lambda delete test cases.

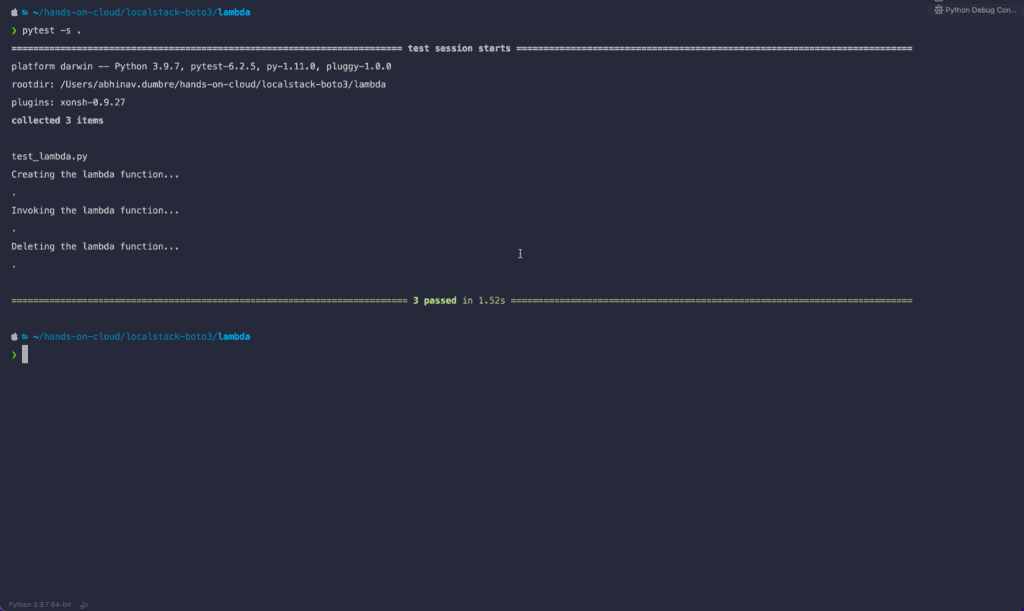

Let’s run the test cases using pytest. Open your Terminal/ PowerShell, and execute the below command.

pytest -s .You should see similar output as below.

You’ve successfully run your first test against LocalStack, and executed it successfully!

Testing AWS Lambda function interacting with S3

To interact with S3 buckets and objects, let’s append the below changes to lambda.py

To interact with S3 buckets using LocalStack Lambda functions, let’s extend the previous example of main_utils.py and append the following functions:

- Create an S3 bucket.

- Upload an object to the bucket.

- List all the objects from the bucket.

- Delete all the objects from the bucket.

- Delete the bucket.

First, let’s make the following changes to the lambda.py

import logging

import os

import boto3

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

AWS_REGION = os.environ.get('AWS_REGION', 'us-east-1')

LOCALSTACK = os.environ.get('LOCALSTACK', None)

LOCALSTACK_ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL', 'http://host.docker.internal:4566')

def configure_aws_env():

if LOCALSTACK:

os.environ['AWS_ACCESS_KEY_ID'] = 'test'

os.environ['AWS_SECRET_ACCESS_KEY'] = 'test'

os.environ['AWS_DEFAULT_REGION'] = AWS_REGION

boto3.setup_default_session()

def get_boto3_client(service):

"""

Initialize Boto3 client.

"""

try:

configure_aws_env()

boto3_client = boto3.client(

service,

region_name=AWS_REGION,

endpoint_url=LOCALSTACK_ENDPOINT_URL

)

except Exception as e:

logger.exception('Error while connecting to LocalStack.')

raise e

else:

return boto3_client

def handler(event, context):

s3_client = get_boto3_client('s3')

logging.info('Uploading an object to the localstack s3 bucket...')

bucket_name='hands-on-cloud-bucket'

object_key = 'hands-on-cloud.txt'

s3_client.put_object(

Bucket='hands-on-cloud-bucket',

Key=object_key,

Body='localstack-boto3-python'

)

return {

"message": "Object uploaded to S3."

}The above function will upload the test object to the bucket and return the successful response upon upload completion.

We’re using the LOCALSTACK environment variable in the Lambda function code to seamlessly run in the real and AWS-emulated environment.

One noticeable change in the above code is, instead of using LOCALSTACK_ENDPOINT_URL (‘http://localhost:4566‘), we’ve used LOCALSTACK_ENDPOINT_URL (‘http://host.docker.internal:4566‘).

This change enables the Lambda function to communicate with the Docker container.

Let’s append the following functions to the main_utils.py, which creates and deletes the S3 bucket.

import glob

import os

import logging

import json

from zipfile import ZipFile

import boto3

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

LAMBDA_ZIP = './function.zip'

LOCALSTACK_ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL', 'http://localhost:4566')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

def get_boto3_client(service):

"""

Initialize Boto3 client.

"""

try:

return boto3.client(

service,

region_name=AWS_REGION,

endpoint_url=LOCALSTACK_ENDPOINT_URL

)

except Exception as e:

logger.exception('Error while connecting to LocalStack.')

raise e

else:

return client

def get_boto3_resource(service):

"""

Initialize Boto3 resource.

"""

try:

return boto3.resource(

service,

region_name=AWS_REGION,

endpoint_url=LOCALSTACK_ENDPOINT_URL

)

except Exception as e:

logger.exception('Error while connecting to LocalStack.')

raise e

else:

return resource

def create_lambda_zip(function_name):

"""

Generate ZIP file for lambda function.

"""

try:

with ZipFile(LAMBDA_ZIP, 'w') as zip:

py_files = glob.glob('*.py')

for f in py_files:

zip.write(f)

except Exception as e:

logger.exception('Error while creating ZIP file.')

raise e

def create_lambda(function_name, **kw_args):

"""

Creates a Lambda function in LocalStack.

"""

try:

lambda_client = get_boto3_client('lambda')

_ = create_lambda_zip(function_name)

# create zip file for lambda function.

with open(LAMBDA_ZIP, 'rb') as f:

zipped_code = f.read()

lambda_client.create_function(

FunctionName=function_name,

Runtime='python3.8',

Role='role',

Handler=function_name + '.handler',

Code=dict(ZipFile=zipped_code),

**kw_args

)

except Exception as e:

logger.exception('Error while creating function.')

raise e

def delete_lambda(function_name):

"""

Deletes the specified lambda function.

"""

try:

lambda_client = get_boto3_client('lambda')

lambda_client.delete_function(

FunctionName=function_name

)

# remove the lambda function zip file

os.remove(LAMBDA_ZIP)

except Exception as e:

logger.exception('Error while deleting lambda function')

raise e

def invoke_function(function_name):

"""

Invokes the specified function and returns the result.

"""

try:

lambda_client = get_boto3_client('lambda')

response = lambda_client.invoke(

FunctionName=function_name)

return (response['Payload']

.read()

.decode('utf-8')

)

except Exception as e:

logger.exception('Error while invoking function')

raise e

def create_bucket(bucket_name):

"""

Create a S3 bucket.

"""

try:

s3_client = get_boto3_client('s3')

s3_client.create_bucket(

Bucket=bucket_name

)

except Exception as e:

logger.exception('Error while creating s3 bucket')

raise e

def s3_upload_file(bucket_name, object_name, content):

"""

Uploads a file object with specific content to S3

"""

try:

s3_client = get_boto3_client('s3')

s3_client.put_object(

Bucket=bucket_name,

Key=object_name,

Body=content

)

except Exception as e:

logger.exception('Error while uploading s3 object')

raise e

def list_s3_bucket_objects(bucket_name):

"""

List S3 buckets.

"""

try:

s3_resource = get_boto3_resource('s3')

return [

obj.key for obj in s3_resource.Bucket(bucket_name).objects.all()

]

except Exception as e:

logger.exception('Error while listing s3 bucket objects')

raise e

def delete_bucket(bucket_name):

"""

Delete a S3 bucket.

"""

try:

s3_resource = get_boto3_resource('s3')

# empty the bucket before deleting

s3_resource.Bucket(bucket_name).objects.all().delete()

s3_resource.Bucket(bucket_name).delete()

except Exception as e:

logger.exception('Error while deleting s3 bucket')

raise eAnd finally, let’s add the test cases to the test_lambda.py.

import main_utils

import unittest

unittest.TestLoader.sortTestMethodsUsing = None

class Test(unittest.TestCase):

def test_a_setup_class(self):

print('\r\nCreate test case...')

args = {

'Environment': {

'Variables': {

'LOCALSTACK': 'True'

}

}

}

main_utils.create_lambda('lambda', **args)

main_utils.create_bucket('hands-on-cloud-bucket')

def test_b_invoke_function_and_response(self):

print('\r\nInvoke test case...')

payload = main_utils.invoke_function('lambda')

bucket_objects = main_utils.list_s3_bucket_objects('hands-on-cloud-bucket')

self.assertEqual(bucket_objects, ['hands-on-cloud.txt'])

def test_c_teardown_class(self):

print('\r\nDelete test case...')

main_utils.delete_lambda('lambda')

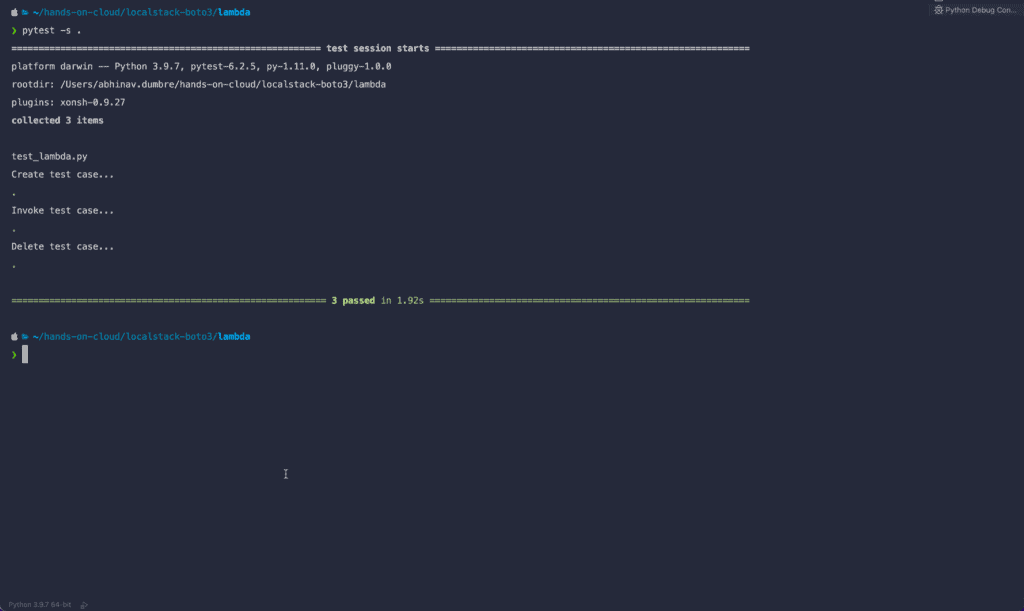

main_utils.delete_bucket('hands-on-cloud-bucket')Lastly, execute the following command to test the Lambda code interacting with the S3 bucket.

pytest -s .Expected output:

Working with DynamoDB

Amazon DynamoDB is a fully managed NoSQL database that provides strong consistency and predictable performance. It enables fast and flexible queries while delivering automatic scaling on demand. Amazon DynamoDB handles the heavy lifting for developers to focus on their applications, not managing databases. Amazon DynamoDB supports document-oriented data models (JSON, BSON, or XML) and key-value data models (binary).

Create a DynamoDB Table

To create a DynamoDB table, you need to use the create_table() method from the Boto3 library.

import json

import logging

from datetime import date, datetime

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

dynamodb_client = boto3.client(

"dynamodb", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def json_datetime_serializer(obj):

"""

Helper method to serialize datetime fields

"""

if isinstance(obj, (datetime, date)):

return obj.isoformat()

raise TypeError("Type %s not serializable" % type(obj))

def create_dynamodb_table(table_name):

"""

Creates a DynamoDB table.

"""

try:

response = dynamodb_client.create_table(

TableName=table_name,

KeySchema=[

{

'AttributeName': 'Name',

'KeyType': 'HASH'

},

{

'AttributeName': 'Email',

'KeyType': 'RANGE'

}

],

AttributeDefinitions=[

{

'AttributeName': 'Name',

'AttributeType': 'S'

},

{

'AttributeName': 'Email',

'AttributeType': 'S'

}

],

ProvisionedThroughput={

'ReadCapacityUnits': 1,

'WriteCapacityUnits': 1

},

Tags=[

{

'Key': 'Name',

'Value': 'hands-on-cloud-dynamodb-table'

}

])

except ClientError:

logger.exception('Could not create the table.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

table_name = 'hands-on-cloud-dynamodb-table'

logger.info('Creating a DynamoDB table...')

dynamodb = create_dynamodb_table(table_name)

logger.info(

f'DynamoDB table created: {json.dumps(dynamodb, indent=4, default=json_datetime_serializer)}')

if __name__ == '__main__':

main()

Required arguments are:

AttributeDefinitions: Specifies a list of attributes that describe the key schema for the table and indexes.TableName: Specifies the name of the table to create.KeySchema: Specifies the attributes that make up the primary key for a table or an index.

The optional arguments we have used in the above example:

Tags: Specifies the Resource Tags

Also, we’re using additional json_datetime_serializer() method to serialize (convert to string) datetime.datetime fields returned by the create_table() method.

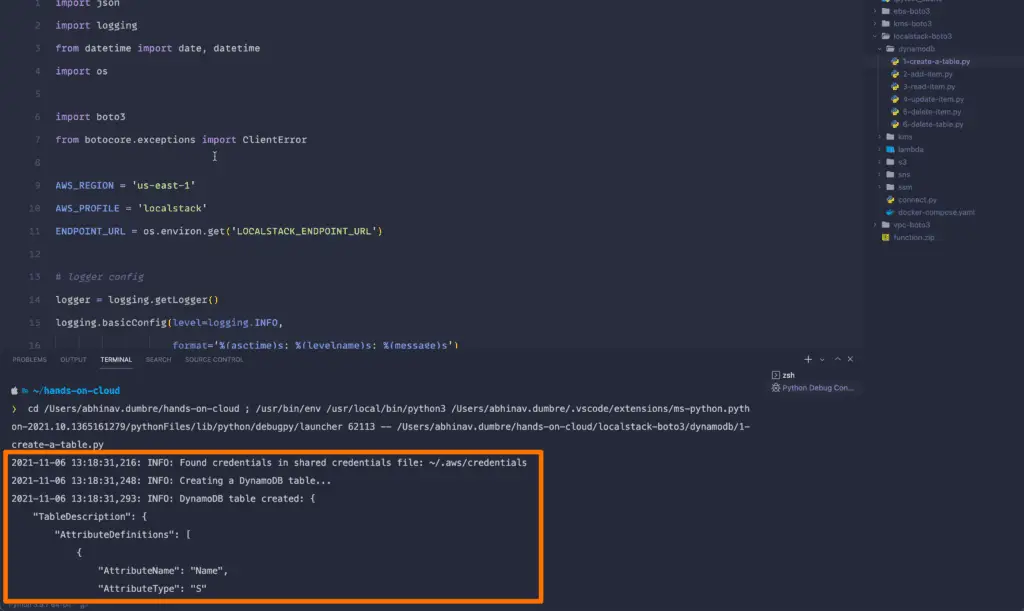

Here is the code execution output:

To verify the DynamoDB table status from the Command line, execute the below command from Terminal/ PowerShell.

awsls dynamodb describe-table --table-name hands-on-cloud-dynamodb-tableExpected output:

Performing CRUD Operations on DynamoDB Table

The following sections will explain the four basic ways to perform Create, Read, Update and Delete (CRUD) operations on Items in DynamoDB Tables.

Create Items

To add a new item to a table, you need to use the put_item() method from the Boto3 library.

The below example adds an item with Name and Email keys.

import json

import logging

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

dynamodb_resource = boto3.resource(

"dynamodb", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def add_dynamodb_table_item(table_name, name, email):

"""

adds a DynamoDB table.

"""

try:

table = dynamodb_resource.Table(table_name)

response = table.put_item(

Item={

'Name': name,

'Email': email

}

)

except ClientError:

logger.exception('Could not add the item to table.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

table_name = 'hands-on-cloud-dynamodb-table'

name = 'hands-on-cloud'

email = 'example@cloud.com'

logger.info('Adding item...')

dynamodb = add_dynamodb_table_item(table_name, name, email)

logger.info(

f'DynamoDB table item created: {json.dumps(dynamodb, indent=4)}')

if __name__ == '__main__':

main()

Required arguments are:

TableName: Specifies the name of the table to contain the item.Item: Specifies a dictionary of attribute name/value pairs, one for each attribute.

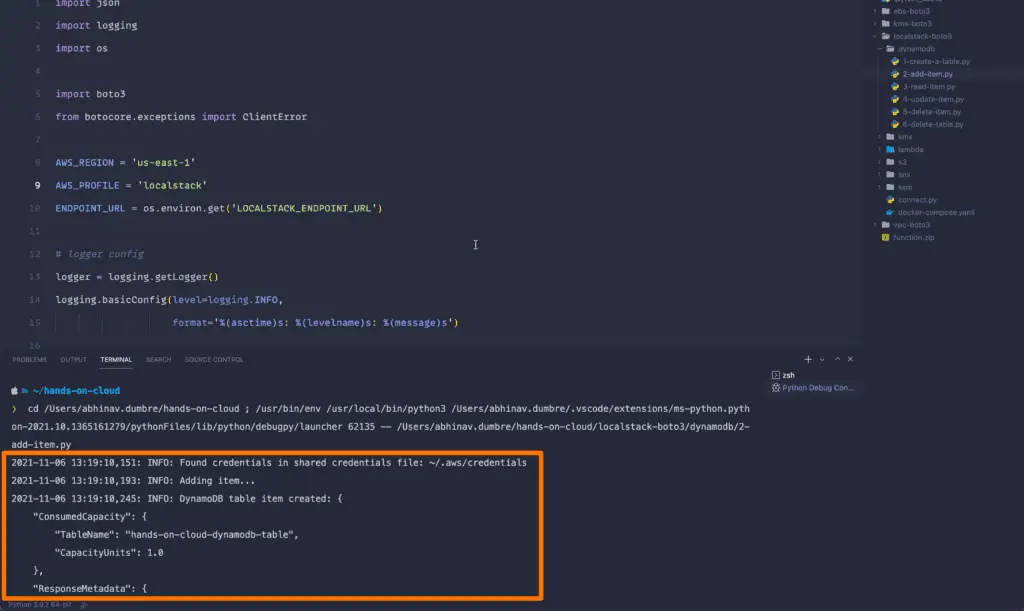

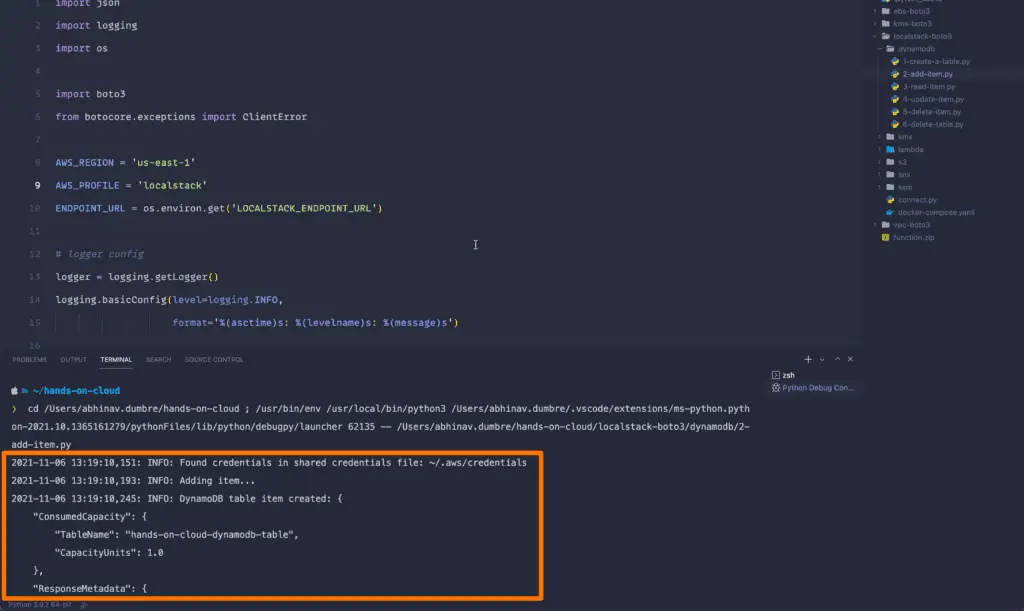

Here is the Execution output:

Read Items

You need to use the get_item() method to read an item from the hands-on-cloud-dynamodb-table table. You must specify the primary key value to read any row in hands-on-cloud-dynamodb-table.

You have previously added the following item to the table.

{

'Name': 'hands-on-cloud',

'Email': 'example@cloud.com'

}import json

import logging

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

dynamodb_resource = boto3.resource(

"dynamodb", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def read_dynamodb_table_item(table_name, name, email):

"""

Reads from a DynamoDB table.

"""

try:

table = dynamodb_resource.Table(table_name)

response = table.get_item(

Key={

'Name': name,

'Email': email

}

)

except ClientError:

logger.exception('Could not read the item from table.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

table_name = 'hands-on-cloud-dynamodb-table'

name = 'hands-on-cloud'

email = 'example@cloud.com'

logger.info('Reading item...')

dynamodb = read_dynamodb_table_item(table_name, name, email)

logger.info(

f'Item details: {json.dumps(dynamodb, indent=4)}')

if __name__ == '__main__':

main()

Required arguments are:

TableName: Specifies the name of the table to contain the item.Key: A dictionary of attribute names to AttributeValue objects, specifying the item’s primary key to retrieve.

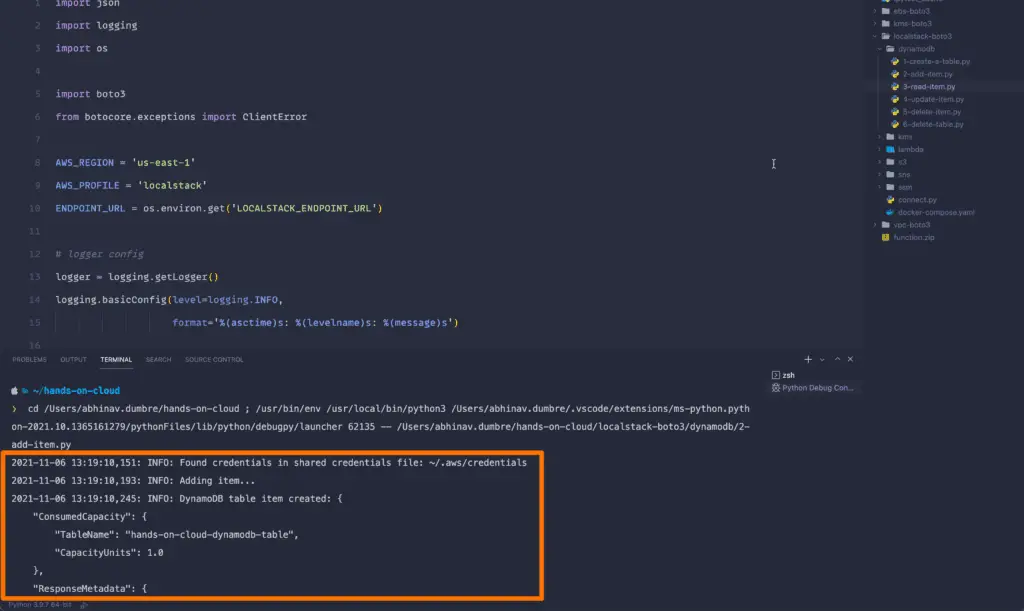

Here is the Execution output:

Update Items

When you need to make changes to an existing available item, you’ll need to use the update_item() method.

In this example,

- You change the values of existing attributes (name and email),

- Add a new attribute to the current info map list: phone number.

import json

import logging

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

dynamodb_resource = boto3.resource(

"dynamodb", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def update_dynamodb_table_item(table_name, name, email, phone_number):

"""

update the DynamoDB table item.

"""

try:

table = dynamodb_resource.Table(table_name)

response = table.update_item(

Key={

'Name': name,

'Email': email

},

UpdateExpression="set phone_number=:ph",

ExpressionAttributeValues={

':ph': phone_number

}

)

except ClientError:

logger.exception('Could not update the item.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

table_name = 'hands-on-cloud-dynamodb-table'

name = 'hands-on-cloud'

email = 'example@cloud.com'

phone_number = '123-456-1234'

logger.info('updateing item...')

dynamodb = update_dynamodb_table_item(

table_name, name, email, phone_number)

logger.info(

f'Item details: {json.dumps(dynamodb, indent=4)}')

if __name__ == '__main__':

main()

Required arguments are:

TableName: Specifies the name of the table to contain the item.Key: A dictionary of attribute names to AttributeValue objects, specifying the item’s primary key to retrieve.

The optional arguments used in the above example are:

UpdateExpression: Specifies an expression that describes one or more attributes to be updated, the action to be performed on them, and updated values for them.ExpressionAttributeValues: Specifies one or more values that you can substitute in an expression.

Here is the Execution output:

Delete Items

To delete an item from the table, You need to use the delete_item() method to delete one item by providing its primary key.

import json

import logging

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

dynamodb_resource = boto3.resource(

"dynamodb", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def delete_dynamodb_table_item(table_name, name, email):

"""

Deletes the DynamoDB table item.

"""

try:

table = dynamodb_resource.Table(table_name)

response = table.delete_item(

Key={

'Name': name,

'Email': email

}

)

except ClientError:

logger.exception('Could not delete the item.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

table_name = 'hands-on-cloud-dynamodb-table'

name = 'hands-on-cloud'

email = 'example@cloud.com'

logger.info('Deleteing item...')

dynamodb = delete_dynamodb_table_item(

table_name, name, email)

logger.info(

f'Details: {json.dumps(dynamodb, indent=4)}')

if __name__ == '__main__':

main()

Required arguments are:

TableName: Specifies the name of the table to contain the item.Key: A dictionary of attribute names to AttributeValue objects, specifying the item’s primary key to delete.

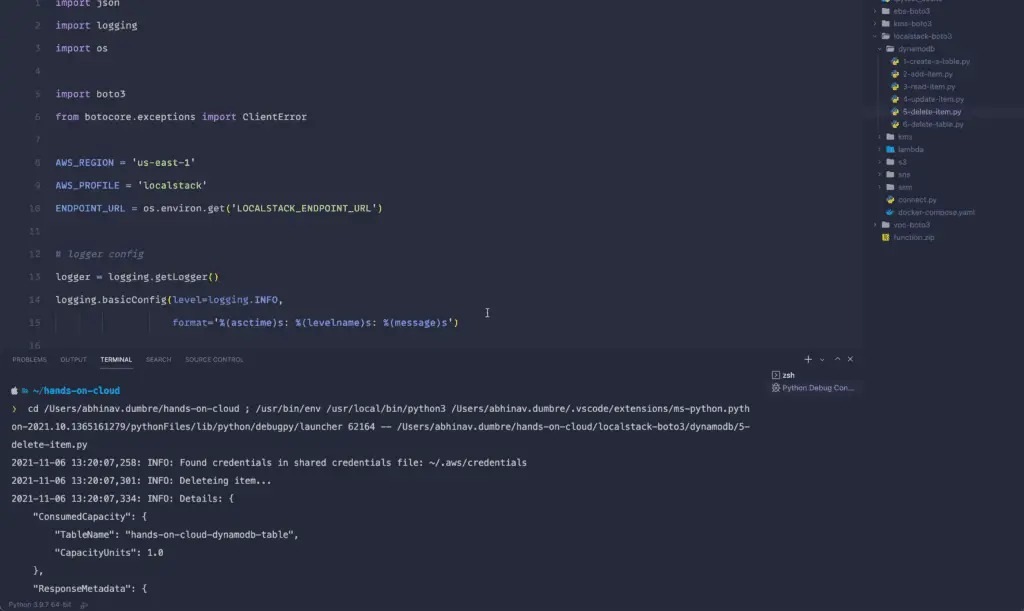

Here’s the Execution output:

Delete a DynamoDB Table

To delete a DynamoDB table, you need to use the delete_table() method.

import json

import logging

from datetime import date, datetime

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

dynamodb_client = boto3.client(

"dynamodb", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def json_datetime_serializer(obj):

"""

Helper method to serialize datetime fields

"""

if isinstance(obj, (datetime, date)):

return obj.isoformat()

raise TypeError("Type %s not serializable" % type(obj))

def delete_dynamodb_table(table_name):

"""

Deletes the DynamoDB table.

"""

try:

response = dynamodb_client.delete_table(

TableName=table_name

)

except ClientError:

logger.exception('Could not delete the table.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

table_name = 'hands-on-cloud-dynamodb-table'

logger.info('Deleteing DynamoDB table...')

dynamodb = delete_dynamodb_table(table_name)

logger.info(

f'Details: {json.dumps(dynamodb, indent=4, default=json_datetime_serializer)}')

if __name__ == '__main__':

main()

The required argument is:

TableName: Specifies the name of the table to delete.

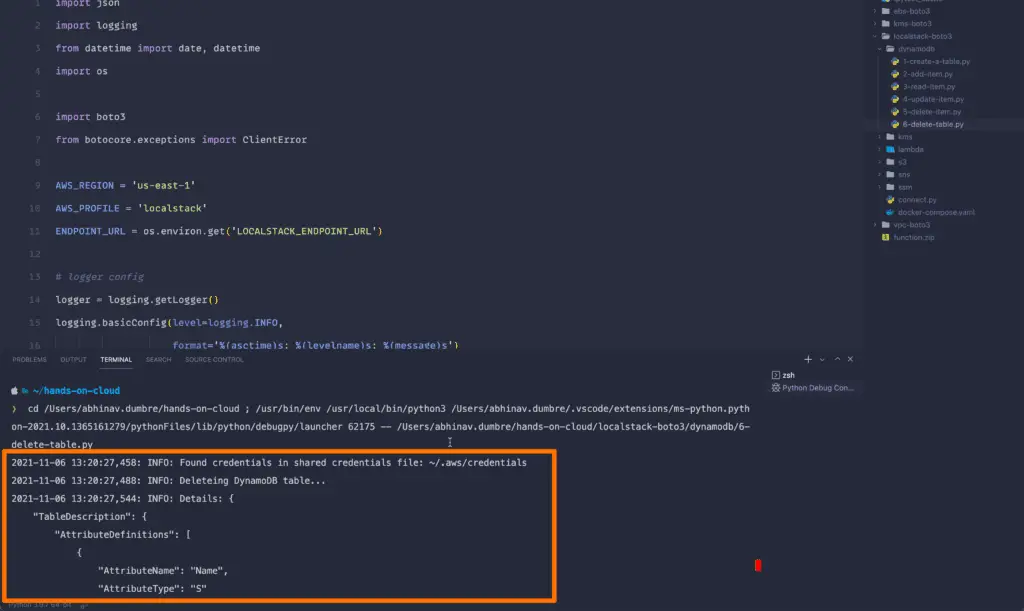

Here is the Execution output:

Working with KMS

AWS KMS (Amazon Web Services Key Management Service) is an AWS-managed service that makes it easy to create and control data encryption stored in AWS. AWS KMS manages the key generation, key rotation, password rotation, and key deactivation tasks for you. These features simplify keeping your data safe while using AWS services such as Amazon S3 and Amazon DynamoDB.

Creating KMS Key

To create a unique KMS key, you need to use the create_key() method from the Boto3 library.

The create_key() method can create symmetric or asymmetric encryption keys.

import json

import logging

from datetime import date, datetime

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

kms_client = boto3.client(

"kms", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def json_datetime_serializer(obj):

"""

Helper method to serialize datetime fields

"""

if isinstance(obj, (datetime, date)):

return obj.isoformat()

raise TypeError("Type %s not serializable" % type(obj))

def create_kms_key():

"""

Creates a unique customer managed KMS key.

"""

try:

response = kms_client.create_key(

Description='hands-on-cloud-cmk',

Tags=[

{

'TagKey': 'Name',

'TagValue': 'hands-on-cloud-cmk'

}

])

except ClientError:

logger.exception('Could not create a CMK key.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

logger.info('Creating a symetric CMK...')

kms = create_kms_key()

logger.info(

f'Symetric CMK is created with details: {json.dumps(kms, indent=4, default=json_datetime_serializer)}')

if __name__ == '__main__':

main()

The optional arguments used in the above example are:

Description: Specifies a description of the KMS key.Tags: Assigns one or more AWS resource tags to the KMS key.

In the above example, we are creating a symmetric encryption key. To create Asymmetric encryption keys, we need to specify the KeySpec argument as part of the create_key() method. To get more details, refer here.

Also, we’re using additional json_datetime_serializer() method to serialize (convert to string) datetime.datetime fields returned by the create_key() method.

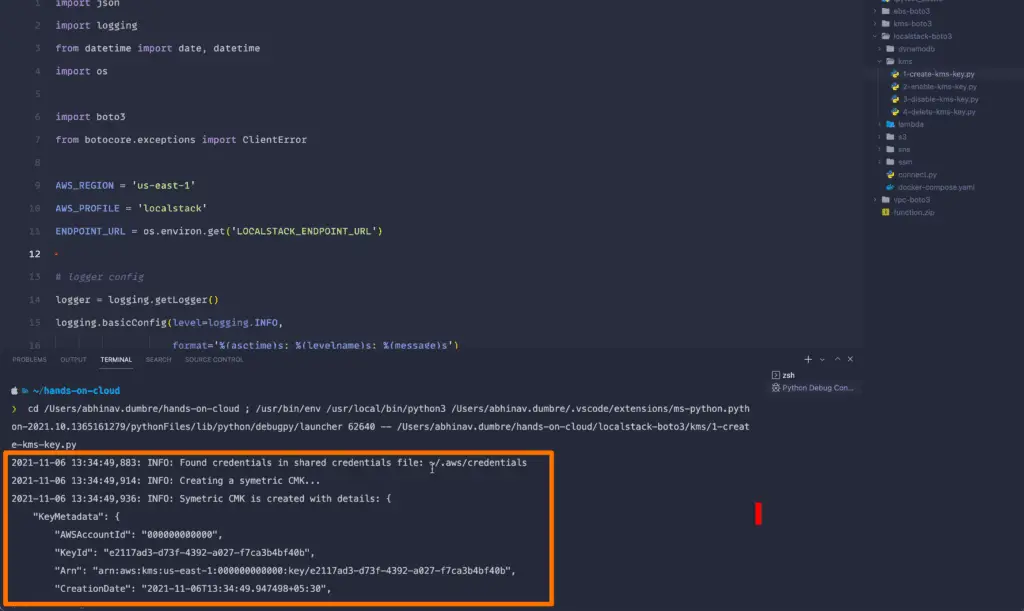

Here is the code execution output:

To verify the KMS key creation, we will use the LocalStack configured AWS CLI.

If you are on Mac/ Linux, open the Terminal; on Windows, open PowerShell. And execute the below command.

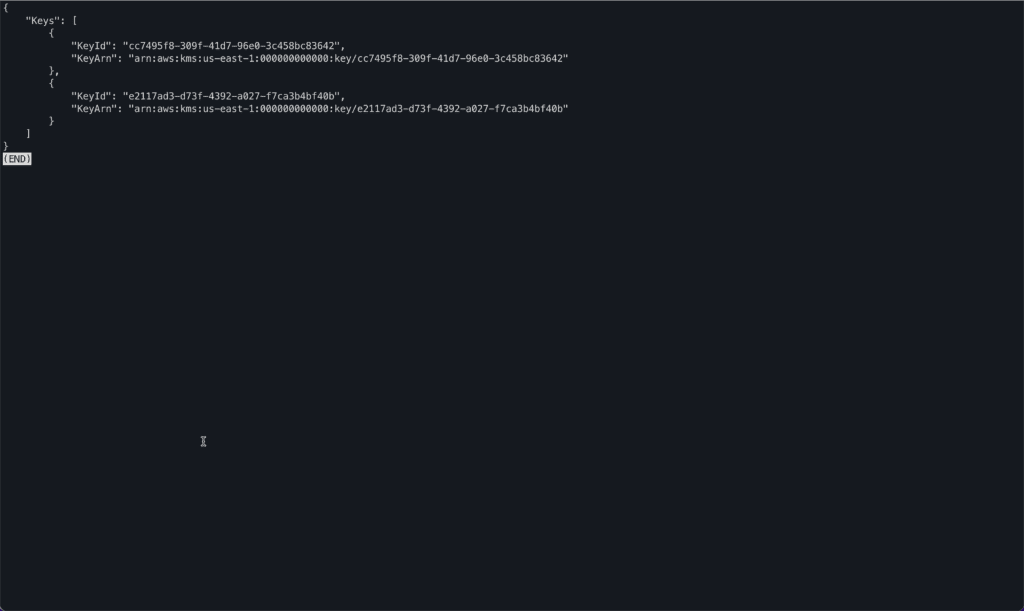

awsls kms list-keysExpected output:

Enabling KMS Key

To enable a KMS key, you need to use the enable_key() method from the Boto3 library.

This method changes the state of the KMS key from disabled to enabled, allowing you to use that key in encryption operations.

import json

import logging

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

kms_client = boto3.client(

"kms", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def enable_kms_key(key_id):

"""

Sets the key state of a KMS key to enabled..

"""

try:

response = kms_client.enable_key(

KeyId=key_id

)

except ClientError:

logger.exception('Could not enable a KMS key.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

KEY_ID = 'd9a916ea-a7de-4d6f-a9bd-2bb9eee10227'

logger.info('Enabling a KMS key...')

kms = enable_kms_key(KEY_ID)

logger.info(f'KMS key ID {KEY_ID} enabled for use.')

if __name__ == '__main__':

main()

The required argument is:

KeyId: Specify the key ID or ARN of the KMS key to change the state to enabled.

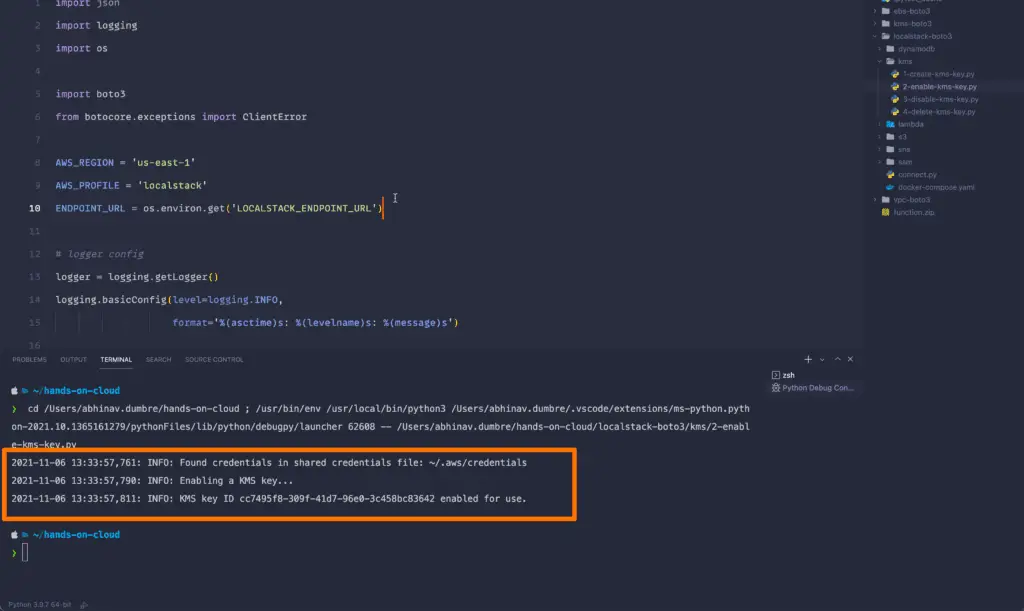

Here is the code execution output:

Disabling KMS Key

To disable a KMS key, you need to use the disable_key() method from the Boto3 library.

As the name suggests, this method changes the state of the KMS key from enabled to disabled, stopping you from using that key in encryption operations.

import json

import logging

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

kms_client = boto3.client(

"kms", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def disable_kms_key(key_id):

"""

Sets the key state of a KMS key to disabled..

"""

try:

response = kms_client.disable_key(

KeyId=key_id

)

except ClientError:

logger.exception('Could not disable a KMS key.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

KEY_ID = 'd9a916ea-a7de-4d6f-a9bd-2bb9eee10227'

logger.info('Disabling a KMS key...')

kms = disable_kms_key(KEY_ID)

logger.info(f'KMS key ID {KEY_ID} disabled for use.')

if __name__ == '__main__':

main()

The required argument is:

KeyId: Specify the key ID or ARN of the KMS key to change the state to enabled.

Here is the code execution output:

Deleting KMS Key

To delete a KMS key, you need to use the schedule_key_deletion() method from the Boto3 library.

By default, KMS applies a waiting period of 30 days when a key is deleted, but you can specify a waiting period of 7-30 days.

import json

import logging

from datetime import date, datetime

import os

import boto3

from botocore.exceptions import ClientError

AWS_REGION = 'us-east-1'

AWS_PROFILE = 'localstack'

ENDPOINT_URL = os.environ.get('LOCALSTACK_ENDPOINT_URL')

# logger config

logger = logging.getLogger()

logging.basicConfig(level=logging.INFO,

format='%(asctime)s: %(levelname)s: %(message)s')

boto3.setup_default_session(profile_name=AWS_PROFILE)

kms_client = boto3.client(

"kms", region_name=AWS_REGION, endpoint_url=ENDPOINT_URL)

def json_datetime_serializer(obj):

"""

Helper method to serialize datetime fields

"""

if isinstance(obj, (datetime, date)):

return obj.isoformat()

raise TypeError("Type %s not serializable" % type(obj))

def delete_kms_key(key_id, pending_window_in_days):

"""

Schedules the deletion of a KMS key.

"""

try:

response = kms_client.schedule_key_deletion(

KeyId=key_id,

PendingWindowInDays=pending_window_in_days

)

except ClientError:

logger.exception('Could not delete a KMS key.')

raise

else:

return response

def main():

"""

Main invocation function.

"""

KEY_ID = 'b5988e5d-3c19-488b-a40b-817594cbe6b0'

PENDING_WINDOW_IN_DAYS = 7

logger.info('Scheduling deletion of KMS key...')

kms = delete_kms_key(KEY_ID, PENDING_WINDOW_IN_DAYS)

logger.info(

f'Deletion Details: {json.dumps(kms, indent=4, default=json_datetime_serializer)}')

if __name__ == '__main__':

main()

The required argument is:

KeyId: Specifies key ID or key ARN of the KMS key to be deleted.

The optional argument used in the above example is:

PendingWindowInDays: Specifies the waiting period in days.

Also, we’re using an additional json_datetime_serializer() method to serialize (convert to string) datetime.datetime fields returned by the schedule_key_deletion() method.

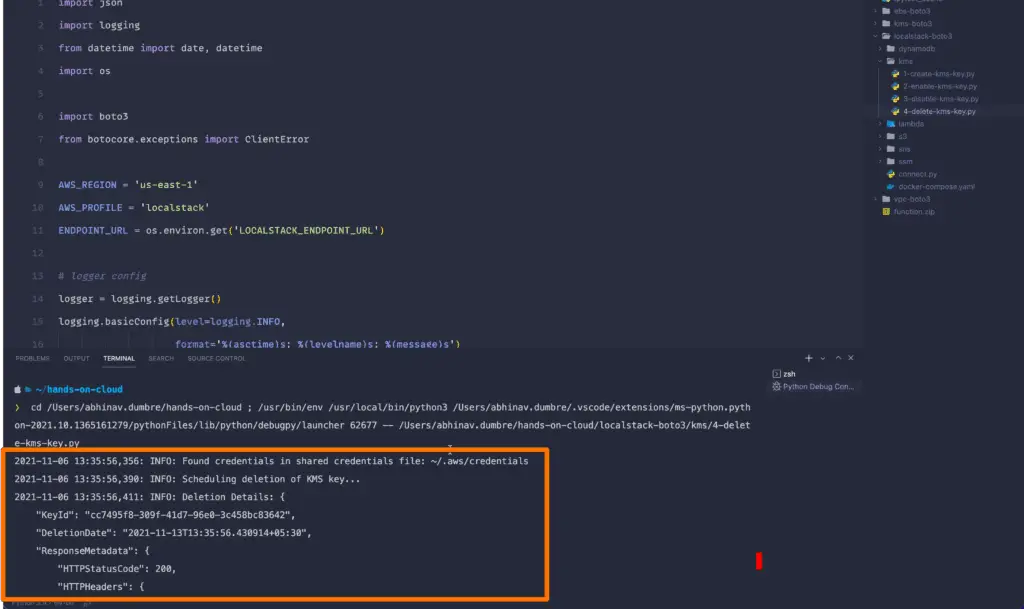

Here is the code execution output:

Summary

LocalStack is an excellent tool for testing AWS services locally. LocalStack is useful for developers who want to save time and money when trying their Serverless applications locally. LocalStack can be used to accelerate the development using AWS APIs. This post uses Boto3 and pytest Python libraries to test various AWS services locally running on LocalStack, including S3, Lambda Functions, DynamoDB, and KMS.