With the Boto3 S3 client and resources, you can perform various operations using Amazon S3 API, such as creating and managing buckets, uploading and downloading objects, setting permissions on buckets and objects, and more. You can also use the Boto3 S3 client to manage metadata associated with your Amazon S3 resources.

This Boto3 S3 tutorial covers examples of using the Boto3 library for managing Amazon S3 service, including the S3 Bucket, S3 Object, S3 Bucket Policy, etc., from your Python programs or scripts.

Table of contents

Prerequisites

To start automating Amazon S3 operations and making API calls to the Amazon S3 service, you must first configure your Python environment.

In general, here’s what you need to have installed:

- Python 3

- Boto3

- AWS CLI tools

How to connect to S3 using Boto3?

The Boto3 library provides you with two ways to access APIs for managing AWS services:

- The

clientthat allows you to access the low-level API data. For example, you can access API response data in JSON format. - The resource that allows you to use AWS services in a higher-level object-oriented way. For more information on the topic, look at AWS CLI vs. botocore vs. Boto3.

Here’s how you can instantiate the Boto3 client to start working with Amazon S3 APIs:

import boto3

AWS_REGION = "us-east-1"

client = boto3.client("s3", region_name=AWS_REGION)Here’s an example of using boto3.resource method:

import boto3

# boto3.resource also supports region_name

resource = boto3.resource('s3')As soon as you instantiate the Boto3 S3 client or resource in your code, you can start managing the Amazon S3 service.

How to create S3 bucket using Boto3?

To create one bucket from the Amazon S3 Bucket using the Boto3 library, you need to either create_bucket client or create_bucket resource.

Note: Every Amazon S3 Bucket must have a unique name. Moreover, this name must be unique across all AWS accounts and customers.

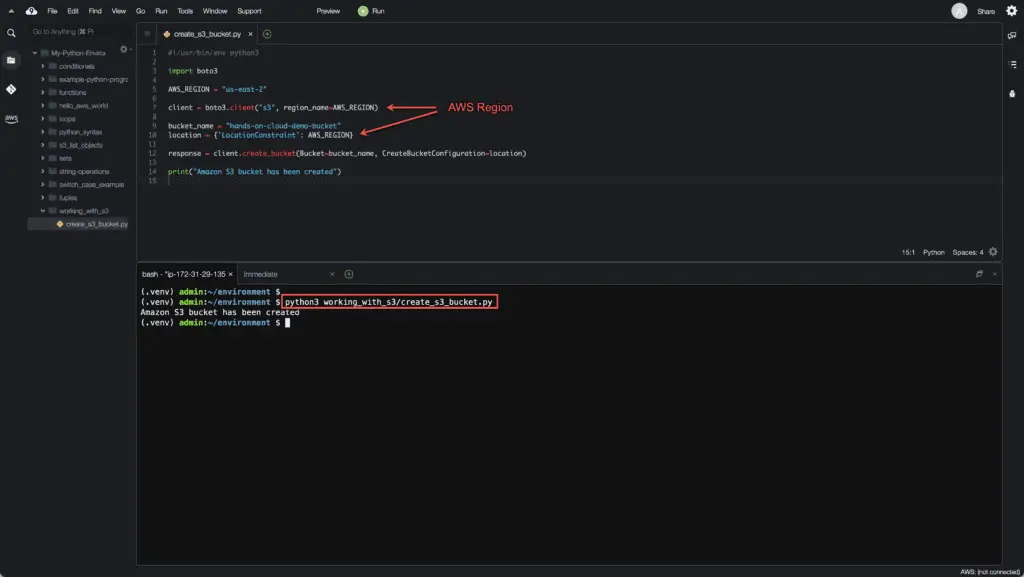

Creating S3 Bucket using Boto3 client

To avoid various exceptions while working with the Amazon S3 service, we strongly recommend you define a specific AWS Region from its default region for both the client, which is the Boto3 client, and the S3 Bucket Configuration:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

client = boto3.client("s3", region_name=AWS_REGION)

bucket_name = "hands-on-cloud-demo-bucket"

location = {'LocationConstraint': AWS_REGION}

response = client.create_bucket(Bucket=bucket_name, CreateBucketConfiguration=location)

print("Amazon S3 bucket has been created")Here’s an example output:

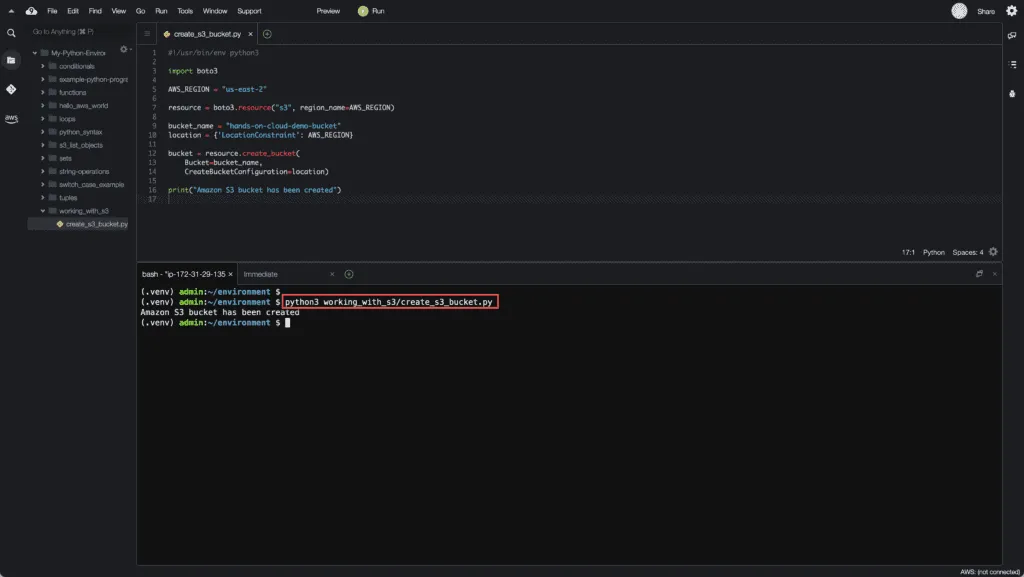

Creating S3 Bucket using Boto3 resource

Similarly, you can use the Boto3 resource to create an Amazon S3 bucket:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

resource = boto3.resource("s3", region_name=AWS_REGION)

bucket_name = "hands-on-cloud-demo-bucket"

location = {'LocationConstraint': AWS_REGION}

bucket = resource.create_bucket(

Bucket=bucket_name,

CreateBucketConfiguration=location)

print("Amazon S3 bucket has been created")Here’s an example output:

How to list Amazon S3 Buckets using Boto3?

There are two ways of listing all the buckets of the Amazon S3 Buckets:

- list_buckets() method of the client resource

- all() method of the S3 buckets resource

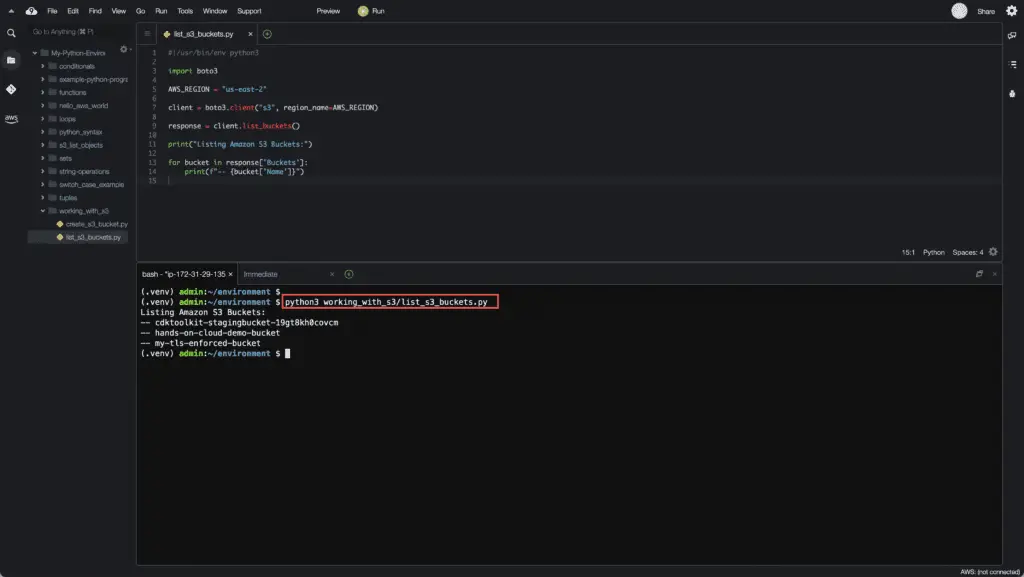

Listing S3 Buckets using Boto3 client

Here’s an example of listing all bucket names of the existing S3 Buckets using the S3 client:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

client = boto3.client("s3", region_name=AWS_REGION)

response = client.list_buckets()

print("Listing Amazon S3 Buckets:")

for bucket in response['Buckets']:

print(f"-- {bucket['Name']}")Here’s an example output:

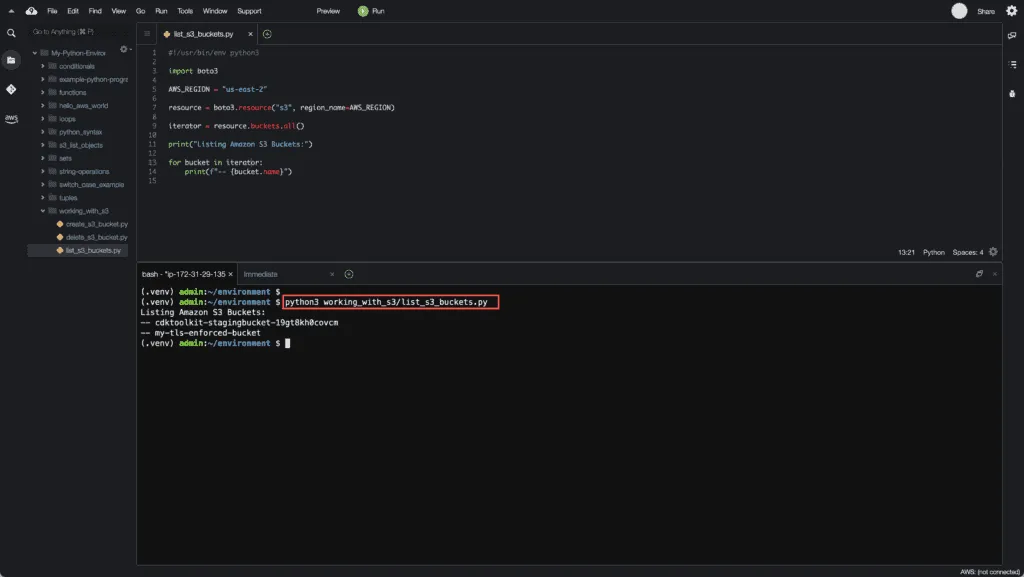

Listing S3 Buckets using Boto3 resource

Here’s an example of listing existing S3 Buckets using the S3 resource:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

resource = boto3.resource("s3", region_name=AWS_REGION)

iterator = resource.buckets.all()

print("Listing Amazon S3 Buckets:")

for bucket in iterator:

print(f"-- {bucket.name}")Here’s an example output:

How to delete Amazon S3 Bucket using Boto3?

There are two possible ways of deletingAmazon S3 Bucket using the Boto3 library:

- delete_bucket() method of the S3 client

- delete() method of the S3.Bucket resource

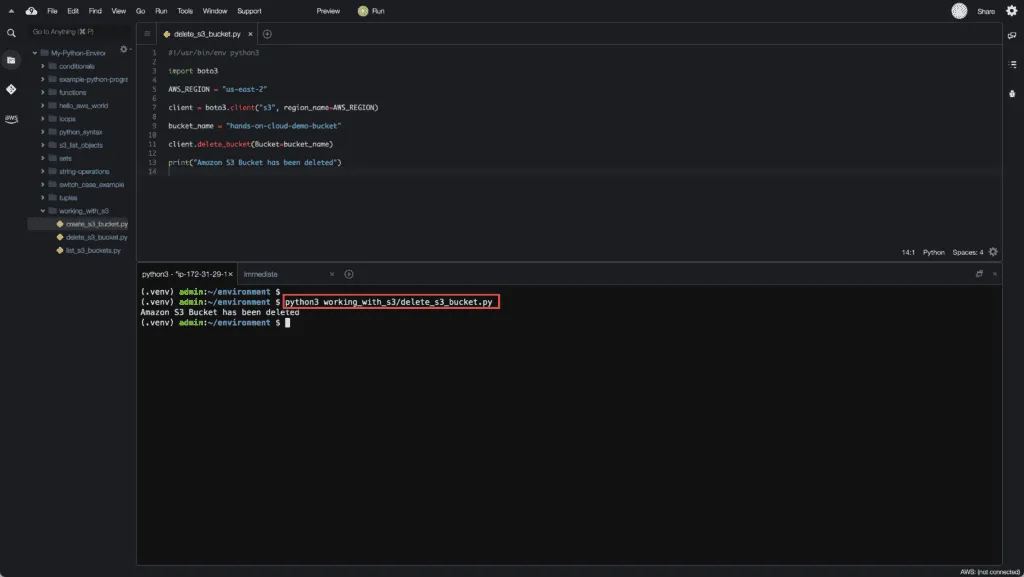

Deleting S3 Buckets using Boto3 client

Here’s an example of deleting the Amazon S3 bucket using the Boto3 client:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

client = boto3.client("s3", region_name=AWS_REGION)

bucket_name = "hands-on-cloud-demo-bucket"

client.delete_bucket(Bucket=bucket_name)

print("Amazon S3 Bucket has been deleted")Here’s an example output:

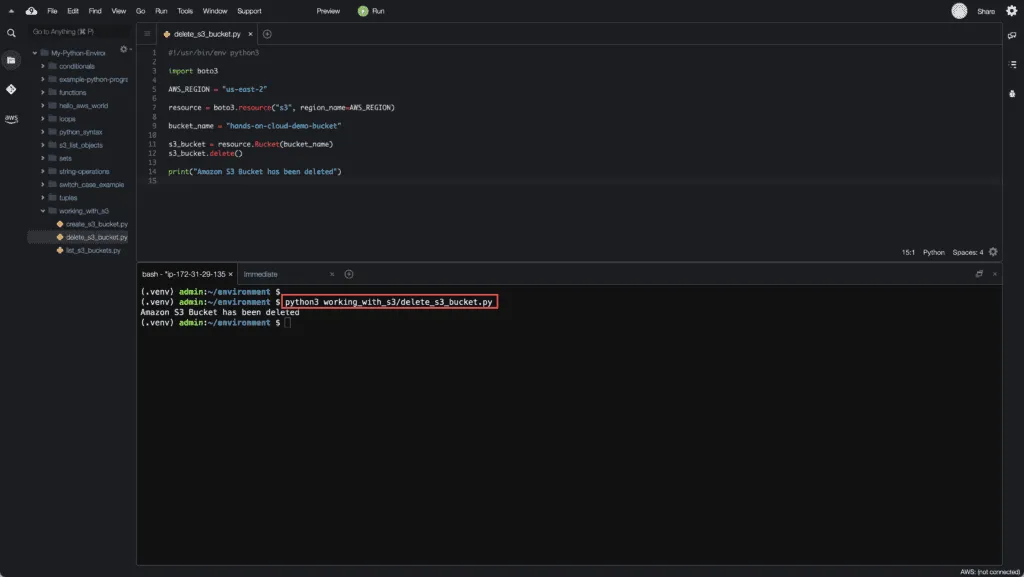

Deleting S3 Buckets using Boto3 resource

Here’s an example of deleting the Amazon S3 bucket using the Boto3 client:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

resource = boto3.resource("s3", region_name=AWS_REGION)

bucket_name = "hands-on-cloud-demo-bucket"

s3_bucket = resource.Bucket(bucket_name)

s3_bucket.delete()

print("Amazon S3 Bucket has been deleted")Here’s an example output:

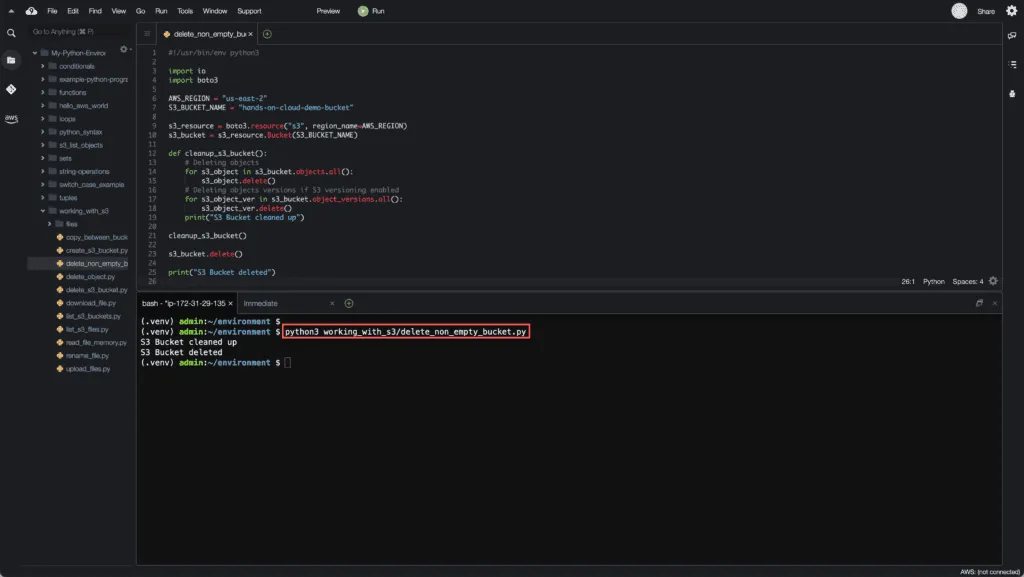

Deleting non-empty S3 Bucket using Boto3

To delete an S3 Bucket using the Boto3 library, you must clean up the S3 Bucket. Otherwise, the Boto3 library will raise the BucketNotEmpty exception to the specified bucket, which is a non empty bucket. The cleanup operation requires deleting all existing versioned bucket like S3 Bucket objects and their versions:

#!/usr/bin/env python3

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_bucket = s3_resource.Bucket(S3_BUCKET_NAME)

def cleanup_s3_bucket():

# Deleting objects

for s3_object in s3_bucket.objects.all():

s3_object.delete()

# Deleting objects versions if S3 versioning enabled

for s3_object_ver in s3_bucket.object_versions.all():

s3_object_ver.delete()

print("S3 Bucket cleaned up")

cleanup_s3_bucket()

s3_bucket.delete()

print("S3 Bucket deleted")Here’s an execution result:

How to upload file to S3 Bucket using Boto3?

The Boto3 library has two ways for uploading files and objects into an S3 Bucket:

- upload_file() method allows you to upload a file from the file system

- upload_fileobj() method allows you to write access and upload a file binary object data (see Working with Files in Python)

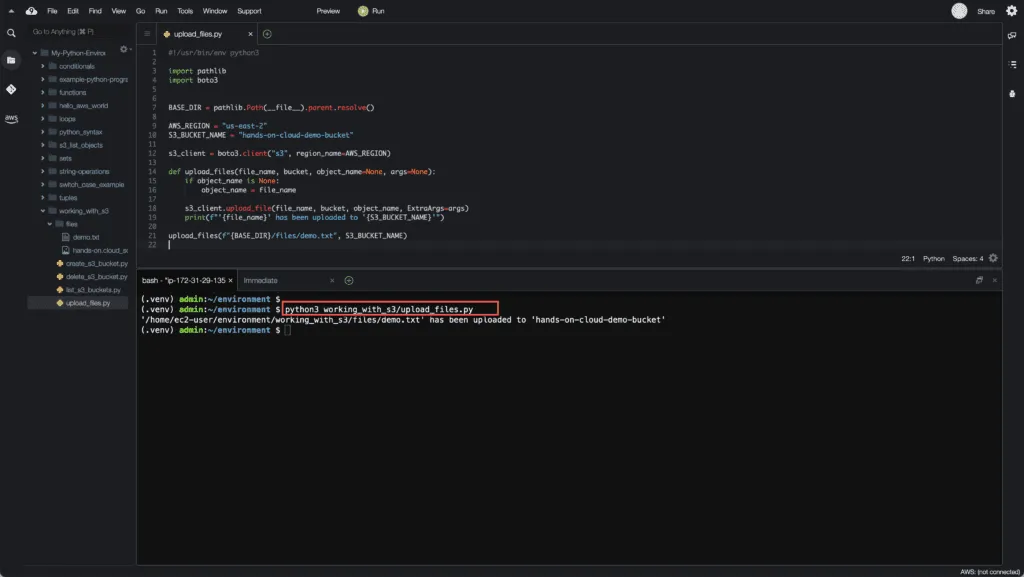

Uploading a file to S3 Bucket using Boto3

The upload_file() method requires the following arguments:

file_name– filename on the local filesystembucket_name– the name of the S3 bucketobject_name– the name of the uploaded file (usually equal to thefile_name)

Here’s an example of uploading a file to an S3 Bucket:

#!/usr/bin/env python3

import pathlib

import boto3

BASE_DIR = pathlib.Path(__file__).parent.resolve()

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_client = boto3.client("s3", region_name=AWS_REGION)

def upload_files(file_name, bucket, object_name=None, args=None):

if object_name is None:

object_name = file_name

s3_client.upload_file(file_name, bucket, object_name, ExtraArgs=args)

print(f"'{file_name}' has been uploaded to '{S3_BUCKET_NAME}'")

upload_files(f"{BASE_DIR}/files/demo.txt", S3_BUCKET_NAME)We’re using the pathlib module on the above code to get the script location path and save it to the BASE_DIR variable. Then, we create theupload_files() method responsible for calling the S3 client and uploading the file.

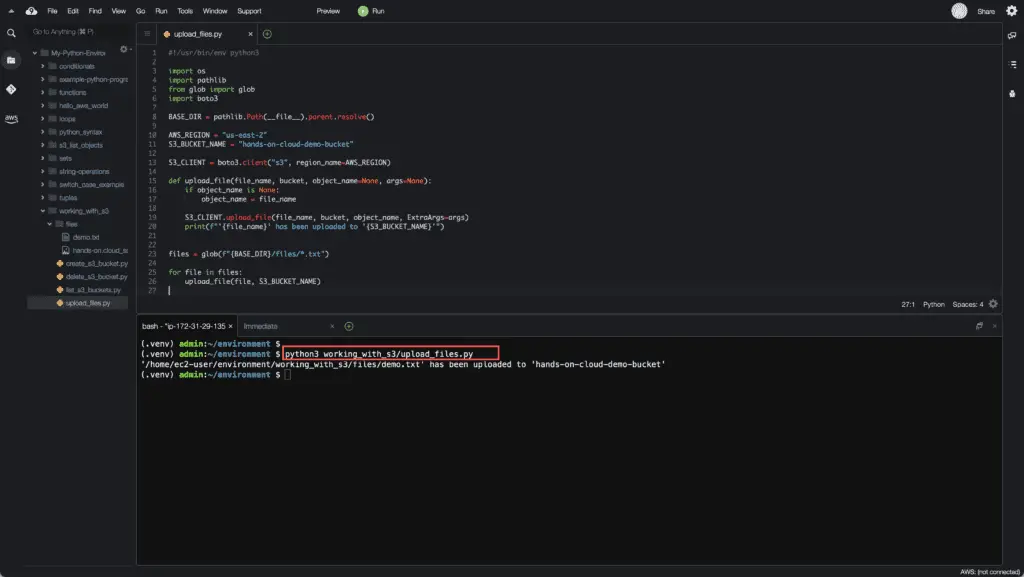

Uploading multiple files to S3 bucket

To upload multiple files to the Amazon S3 bucket, you can use the glob() method from the glob module. This method returns all file paths that match a given pattern as a Python list. You can use glob to select certain files by a search pattern by using a wildcard character:

#!/usr/bin/env python3

import os

import pathlib

from glob import glob

import boto3

BASE_DIR = pathlib.Path(__file__).parent.resolve()

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

S3_CLIENT = boto3.client("s3", region_name=AWS_REGION)

def upload_file(file_name, bucket, object_name=None, args=None):

if object_name is None:

object_name = file_name

S3_CLIENT.upload_file(file_name, bucket, object_name, ExtraArgs=args)

print(f"'{file_name}' has been uploaded to '{S3_BUCKET_NAME}'")

files = glob(f"{BASE_DIR}/files/*.txt")

for file in files:

upload_file(file, S3_BUCKET_NAME)Here’s an example output:

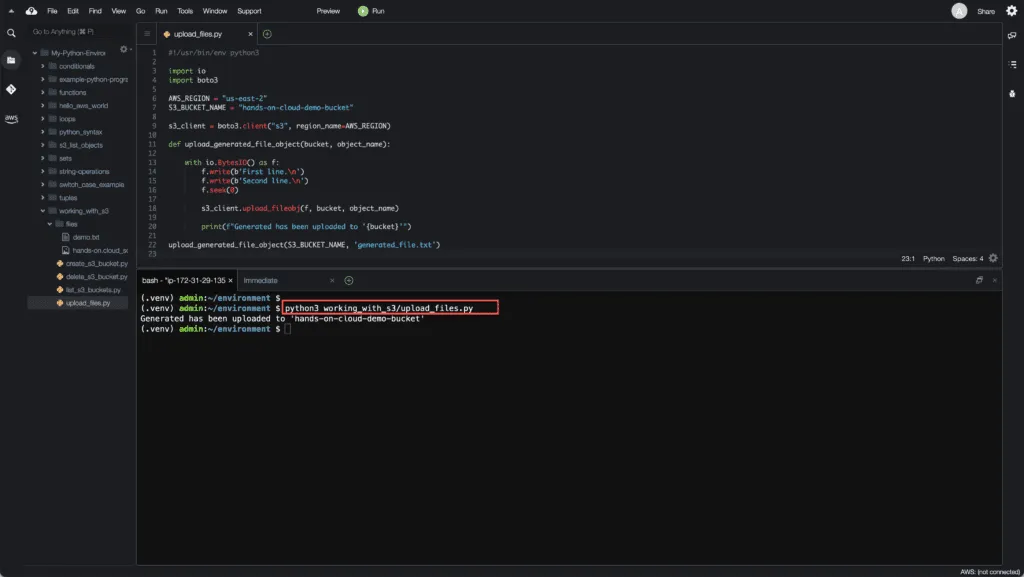

Uploading generated file object data to S3 Bucket using Boto3

If you need to upload file like object data to the Amazon S3 Bucket, you can use the upload_fileobj() method. This method might be useful when you need to generate file content in memory (example) and then upload it to S3 without saving it on the file system.

Note:the upload_fileobj() method requires opening a file in binary mode.

Here’s an example of uploading a generated file to the S3 Bucket:

#!/usr/bin/env python3

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_client = boto3.client("s3", region_name=AWS_REGION)

def upload_generated_file_object(bucket, object_name):

with io.BytesIO() as f:

f.write(b'First line.\n')

f.write(b'Second line.\n')

f.seek(0)

s3_client.upload_fileobj(f, bucket, object_name)

print(f"Generated has been uploaded to '{bucket}'")

upload_generated_file_object(S3_BUCKET_NAME, 'generated_file.txt')Here’s an example output:

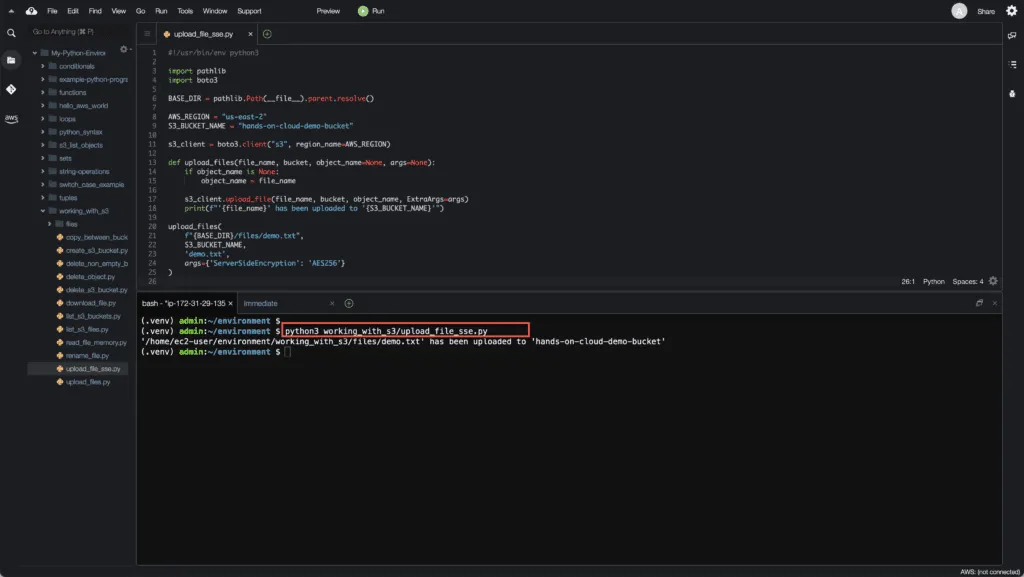

Enabling S3 Server-Side Encryption (SSE-S3) for uploaded objects

You can use S3 Server-Side Encryption (SSE-S3) encryption to protect your data from users access in Amazon S3. We will use server-side encryption, which uses the AES-256 algorithm:

#!/usr/bin/env python3

import pathlib

import boto3

BASE_DIR = pathlib.Path(__file__).parent.resolve()

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_client = boto3.client("s3", region_name=AWS_REGION)

def upload_files(file_name, bucket, object_name=None, args=None):

if object_name is None:

object_name = file_name

s3_client.upload_file(file_name, bucket, object_name, ExtraArgs=args)

print(f"'{file_name}' has been uploaded to '{S3_BUCKET_NAME}'")

upload_files(

f"{BASE_DIR}/files/demo.txt",

S3_BUCKET_NAME,

'demo.txt',

args={'ServerSideEncryption': 'AES256'}

)Here’s an execution output:

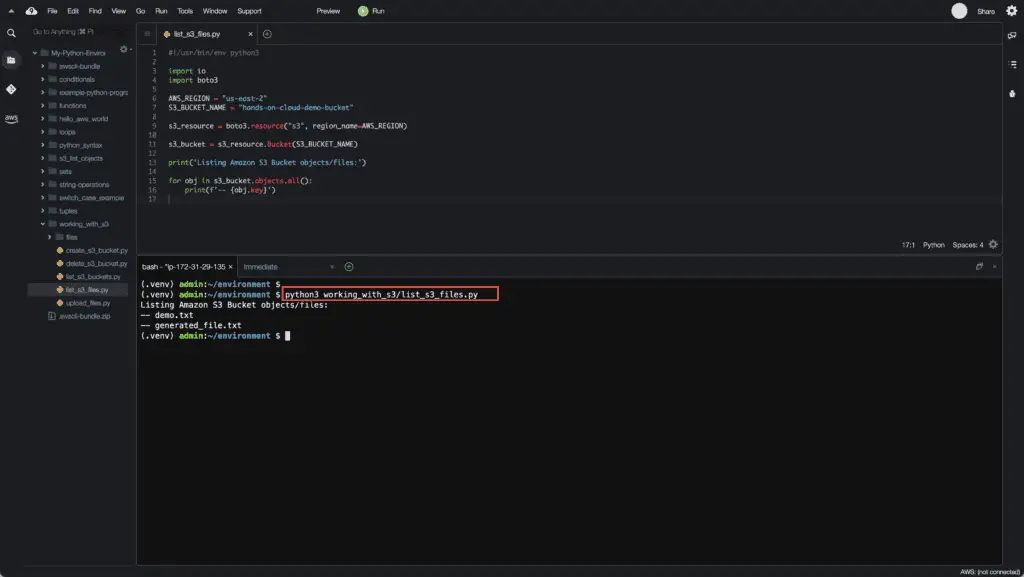

How to get a list of files from S3 Bucket?

The most convenient method to get a list of files with its key name from S3 Bucket using Boto3 is to use the S3Bucket.objects.all() method:

#!/usr/bin/env python3

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_bucket = s3_resource.Bucket(S3_BUCKET_NAME)

print('Listing Amazon S3 Bucket objects/files:')

for obj in s3_bucket.objects.all():

print(f'-- {obj.key}')Here’s an example output:

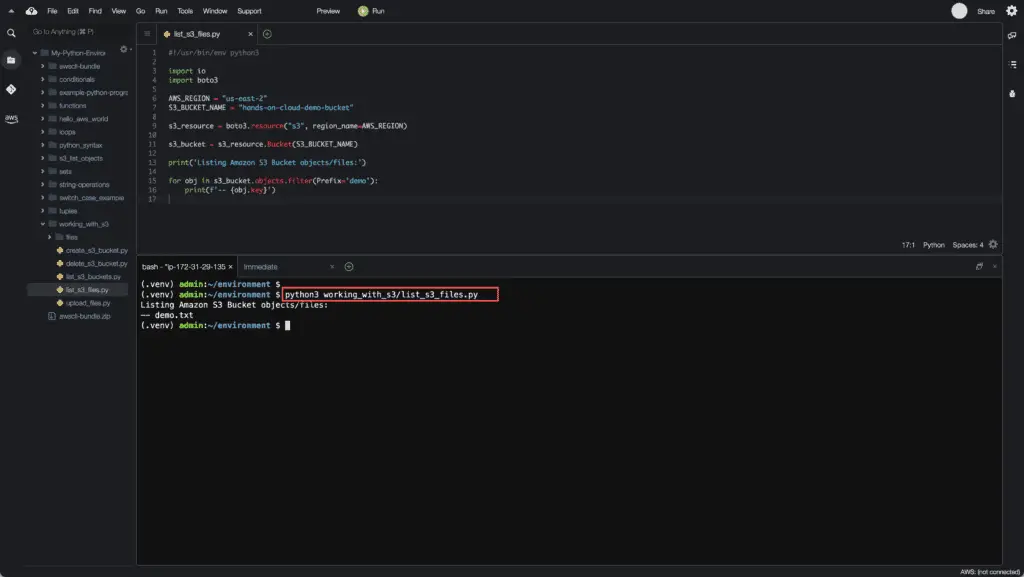

Filtering results of S3 list operation using Boto3

If you need to get a list of S3 objects whose keys are starting from a specific prefix, you can use the .filter() method to do this:

#!/usr/bin/env python3

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_bucket = s3_resource.Bucket(S3_BUCKET_NAME)

print('Listing Amazon S3 Bucket objects/files:')

for obj in s3_bucket.objects.filter(Prefix='demo'):

print(f'-- {obj.key}')Here’s a results output. Instead of getting all files, we’re getting only files whose keys are starting from the demo prefix:

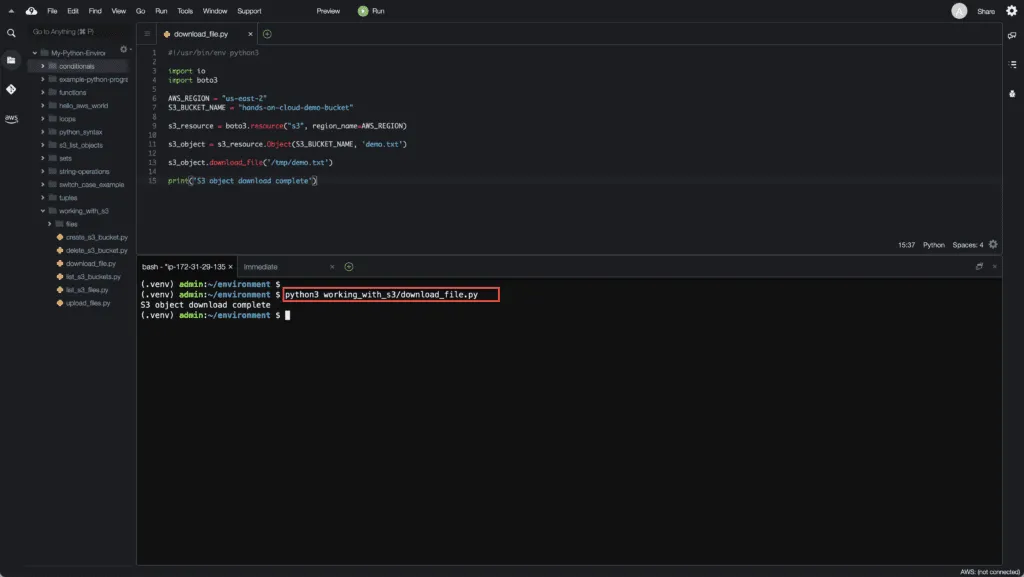

How to download file from S3 Bucket?

You can use the download_file() method to download the S3 object to your local file system:

#!/usr/bin/env python3

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_object = s3_resource.Object(S3_BUCKET_NAME, 'demo.txt')

s3_object.download_file('/tmp/demo.txt')

print('S3 object download complete')Here’s an output example:

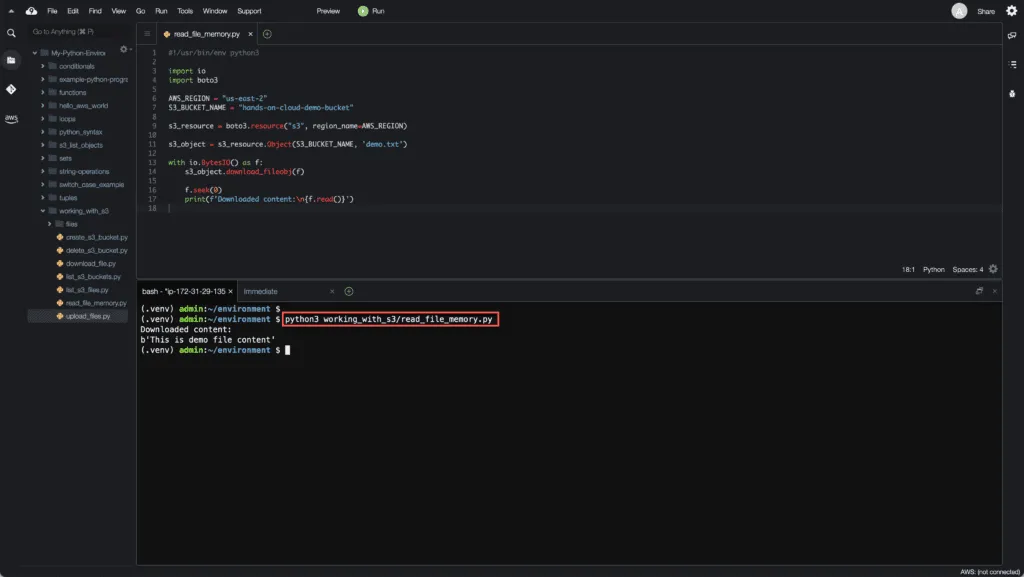

How to read files from the S3 bucket into memory?

#!/usr/bin/env python3

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_object = s3_resource.Object(S3_BUCKET_NAME, 'demo.txt')

with io.BytesIO() as f:

s3_object.download_fileobj(f)

f.seek(0)

print(f'Downloaded content:\n{f.read()}')Here’s an example output:

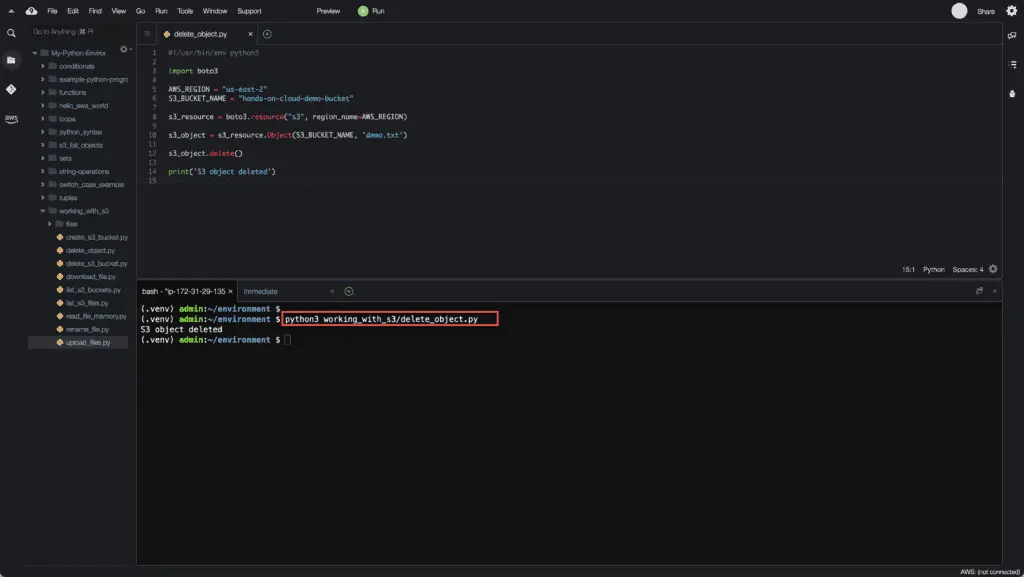

How to delete S3 objects using Boto3?

To delete an object from Amazon S3 Bucket, you need to call delete() method of the object instance representing that object:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_object = s3_resource.Object(bucket_name, 'new_demo.txt')

s3_object.delete()

print('S3 object deleted')Here’s an execution example:

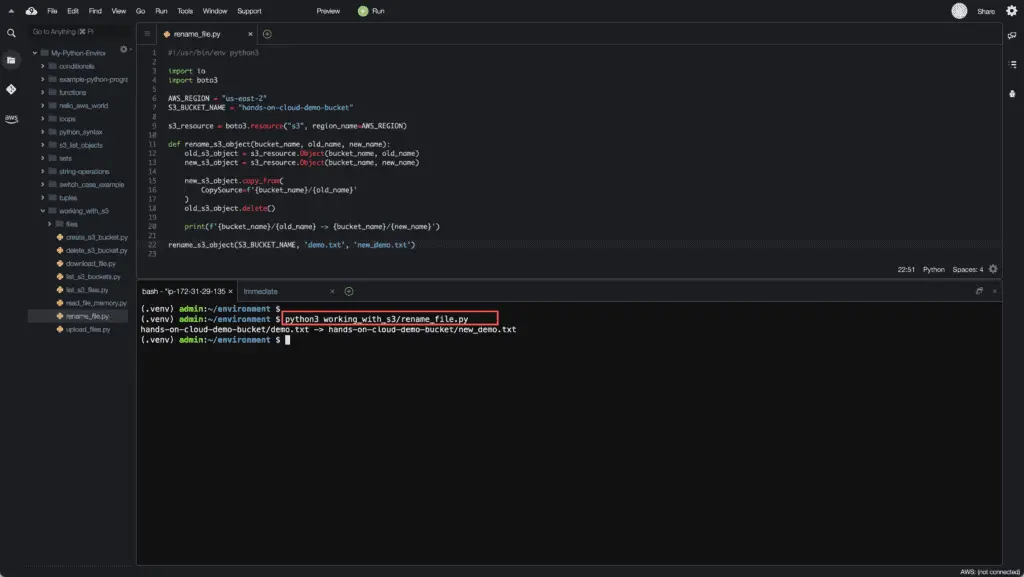

How to rename S3 file object using Boto3?

There’s no single API call to rename an S3 object. So, to rename an S3 object, you need to copy it to a new object with a new name and then delete the old object:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

def rename_s3_object(bucket_name, old_name, new_name):

old_s3_object = s3_resource.Object(bucket_name, old_name)

new_s3_object = s3_resource.Object(bucket_name, new_name)

new_s3_object.copy_from(

CopySource=f'{bucket_name}/{old_name}'

)

old_s3_object.delete()

print(f'{bucket_name}/{old_name} -> {bucket_name}/{new_name}')

rename_s3_object(S3_BUCKET_NAME, 'demo.txt', 'new_demo.txt')Here’s an execution result:

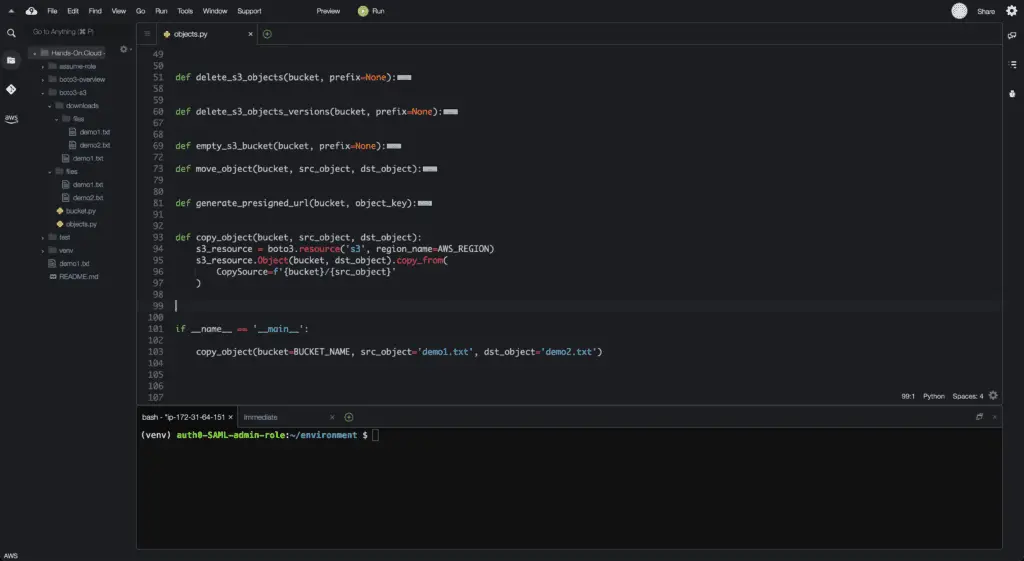

How to copy file objects within S3 bucket using Boto3?

To copy file objects between S3 buckets using Boto3, you can use the copy_from() method.

We can adjust the previous example to support a new S3 Bucket as a destination:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

BUCKET_NAME = "hands-on-cloud-demo-bucket"

def copy_object(bucket, src_object, dst_object):

s3_resource = boto3.resource('s3', region_name=AWS_REGION)

s3_resource.Object(bucket, dst_object).copy_from(

CopySource=f'{bucket}/{src_object}'

)

copy_object(bucket=BUCKET_NAME, src_object='demo1.txt', dst_object='demo2.txt')Thecopy_s3_object() method will copy the S3 object within the same S3 Bucket or between S3 Buckets.

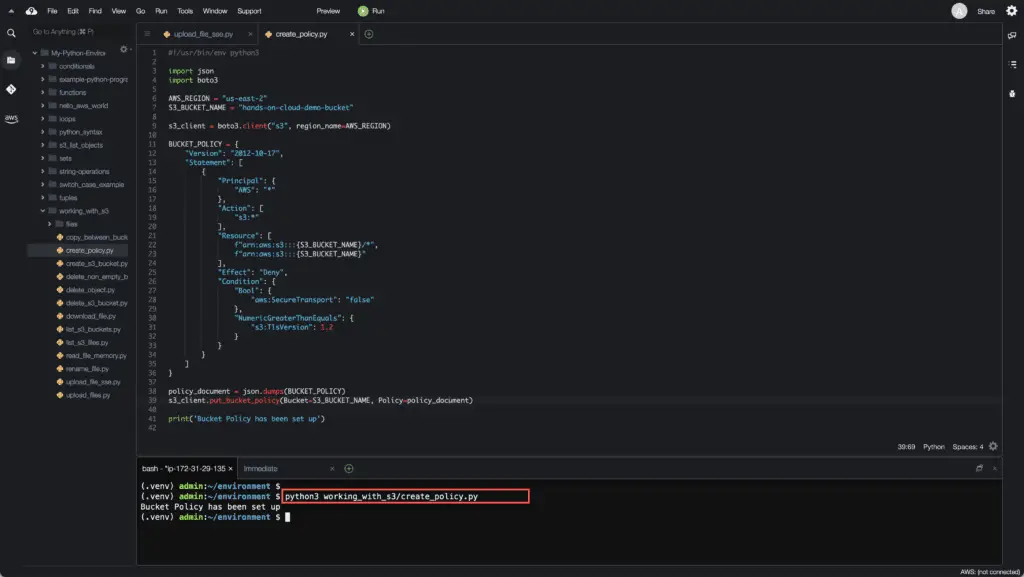

How to create S3 Bucket Policy using Boto3?

To specify requirements, conditions, or restrictions for accessing the Amazon S3 Bucket, you have to use Amazon S3 Bucket Policies. Here’s an example of theAmazon S3 Bucket Policy to enforce HTTPS (TLS) connections to the S3 bucket.

Let’s use the Boto3 library to set up this policy to the S3 bucket:

#!/usr/bin/env python3

import json

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_client = boto3.client("s3", region_name=AWS_REGION)

BUCKET_POLICY = {

"Version": "2012-10-17",

"Statement": [

{

"Principal": {

"AWS": "*"

},

"Action": [

"s3:*"

],

"Resource": [

f"arn:aws:s3:::{S3_BUCKET_NAME}/*",

f"arn:aws:s3:::{S3_BUCKET_NAME}"

],

"Effect": "Deny",

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

},

"NumericLessThan": {

"s3:TlsVersion": 1.2

}

}

}

]

}

policy_document = json.dumps(BUCKET_POLICY)

s3_client.put_bucket_policy(Bucket=S3_BUCKET_NAME, Policy=policy_document)

print('Bucket Policy has been set up')Here’s an example output:

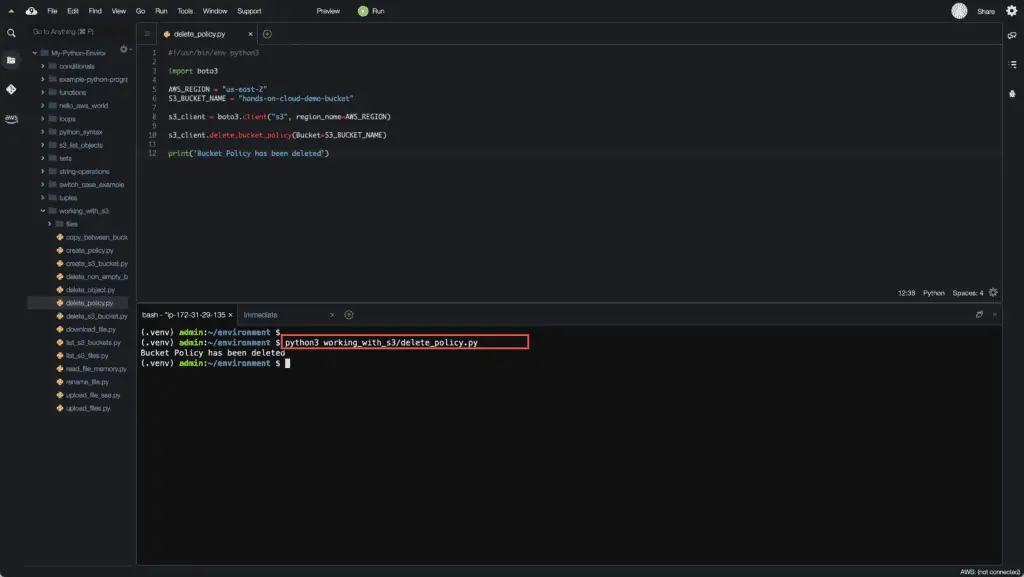

How to delete S3 Bucket Policy using Boto3?

To delete the S3 Bucket Policy, you can use thedelete_bucket_policy() method of the S3 client:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_client = boto3.client("s3", region_name=AWS_REGION)

s3_client.delete_bucket_policy(Bucket=S3_BUCKET_NAME)

print('Bucket Policy has been deleted')Here’s an execution output:

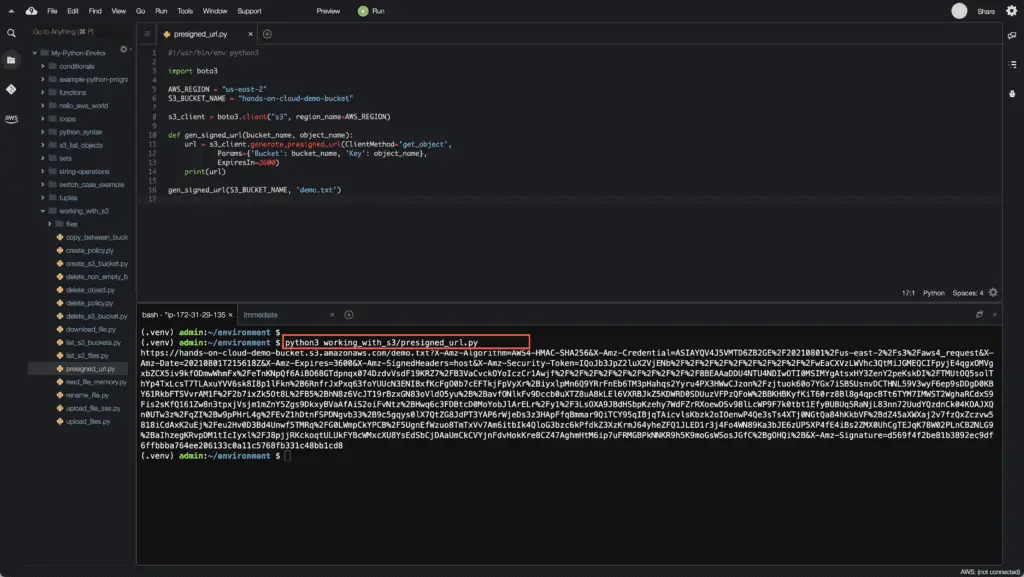

How to generate S3 presigned URL?

If you need to share files from a non-public Amazon S3 Bucket without granting access to AWS APIs to the final user, you can create a pre-signed URL to the Bucket Object:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_client = boto3.client("s3", region_name=AWS_REGION)

def gen_signed_url(bucket_name, object_name):

url = s3_client.generate_presigned_url(ClientMethod='get_object',

Params={'Bucket': bucket_name, 'Key': object_name},

ExpiresIn=3600)

print(url)

gen_signed_url(S3_BUCKET_NAME, 'demo.txt')The S3 client’s generate_presigned_url() method accepts the following parameters:

ClientMethod(string) — The Boto3 S3 client method to presign forParams(dict) — The parameters need to be passed to theClientMethodExpiresIn(int) — The number of seconds the presigned URL is valid for. By default, the presigned URL expires in an hour (3600 seconds)HttpMethod(string) — The HTTP method to use for the generated URL. By default, the HTTP method is whatever is used in the method’s model

Here’s an example output:

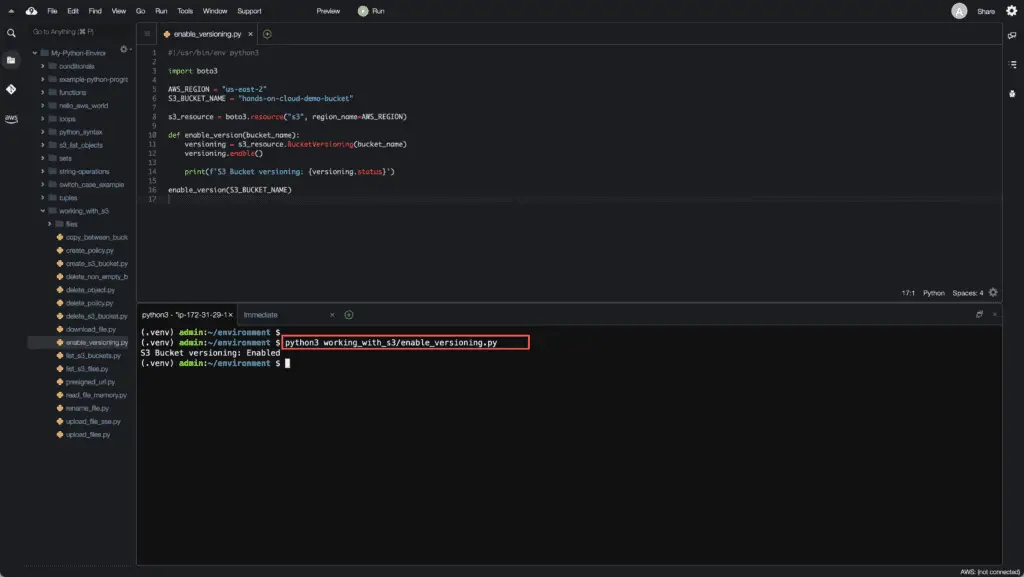

How to enable S3 Bucket versioning using Boto3?

S3 Bucket versioning allows you to keep track of the S3 Bucket object’s versions and its latest version over time. Also, it safeguards against accidental object deletion of a currently existing object. Boto3 will retrieve the most recent version of a versioned object on request. When a new version of an object is added, the enabled versioning object takes up the storage size of the original file versions added together; i.e., a 2MB file with 5 versions will take up 10MB of space in the storage class.

To enable versioning for the S3 Bucket, you need to use the enable_version() method:

#!/usr/bin/env python3

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "hands-on-cloud-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

def enable_version(bucket_name):

versioning = s3_resource.BucketVersioning(bucket_name)

versioning.enable()

print(f'S3 Bucket versioning: {versioning.status}')

enable_version(S3_BUCKET_NAME)Here’s an execution output:

Summary

In this article, we’ve covered examples of using Boto3 for managing Amazon S3 service, including the S3 Buckets, Objects, Bucket Policy, Versioning, and presigned URLs.