More and more companies nowadays are adopting Serverless technologies. AWS Lambda is the essential building block of any Serverless cloud application or automation. Whatever AWS Lambda is doing for you, it is very important to test its code to ensure it’s working exactly as expected. This article covers how to unit test AWS Lambda Python interacting with DynamoDB and S3 services using the moto library.

Demo Lambda Function

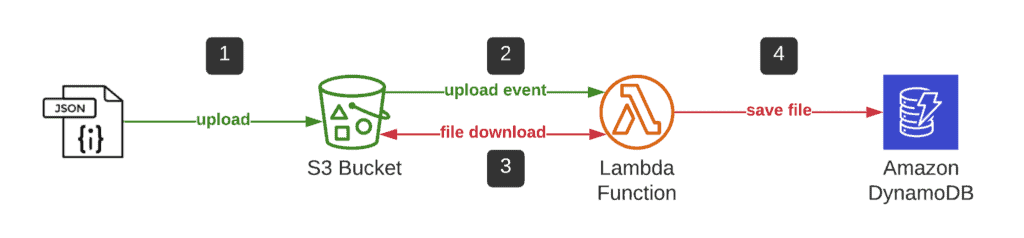

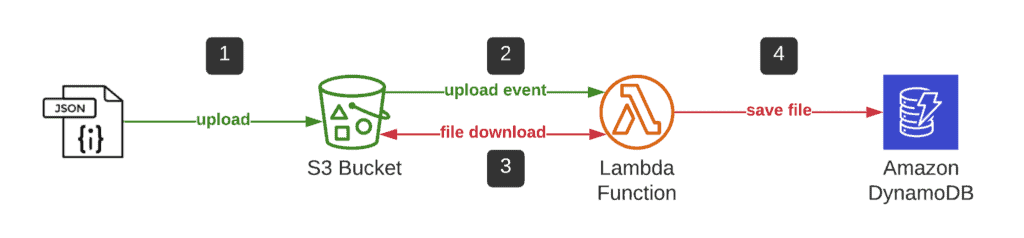

For example, let’s test a Lambda function that reads the file uploaded to the S3 bucket and saves its content to DynamoDB.

So, here are several places that need to be tested:

- File upload to S3 – we need to make sure that during the test cycle, we’ll be dealing with the same file and the same content

- File download – we must ensure that our Lambda function can download, read, and parse the file.

- Save the file to DynamoDB – we need to ensure that our Lambda function code can save the file of the specified structure to DynamoDB.

- Upload event – we must ensure that our Lambda function can process standard events from the S3 bucket.

Here’s the Lambda function code that we’re going to test:

'''

Simple Lambda function that reads file from S3 bucket and saves

its content to DynamoDB table

'''

#!/usr/bin/env python3

import json

import os

import logging

import boto3

LOGGER = logging.getLogger()

LOGGER.setLevel(logging.INFO)

AWS_REGION = os.environ.get('AWS_REGION', 'us-east-1')

DB_TABLE_NAME = os.environ.get('DB_TABLE_NAME', 'amaksimov-test')

S3_CLIENT = boto3.client('s3')

DYNAMODB_CLIENT = boto3.resource('dynamodb', region_name=AWS_REGION)

DYNAMODB_TABLE = DYNAMODB_CLIENT.Table(DB_TABLE_NAME)

def get_data_from_file(bucket, key):

'''

Function reads json file uploaded to S3 bucket

'''

response = S3_CLIENT.get_object(Bucket=bucket, Key=key)

content = response['Body']

data = json.loads(content.read())

LOGGER.info('%s/%s file content: %s', bucket, key, data)

return data

def save_data_to_db(data):

'''

Function saves data to DynamoDB table

'''

result = DYNAMODB_TABLE.put_item(Item=data)

return result

def handler(event, context):

'''

Main Lambda function method

'''

LOGGER.info('Event structure: %s', event)

for record in event['Records']:

s3_bucket = record['s3']['bucket']['name']

s3_file = record['s3']['object']['key']

data = get_data_from_file(s3_bucket, s3_file)

for item in data:

save_data_to_db(item)

return {

'StatusCode': 200,

'Message': 'SUCCESS'

}The code itself is self-explanatory, so we’ll not spend time describing it and move straight to the testing.

So, how can we ensure that the code above is working?

There are two ways of doing that:

- Deploy and test AWS Lambda manually

- Unit test AWS Lambda automatically before the deployment

Let’s start with the manual testing first.

Manual testing

For manual testing of your AWS Lambda function, you should have:

- S3 bucket

- DynamoDB table

- Correct permissions for Lambda to access both

Let’s assume that you have all of them, if not, we recommend you to check the article Terraform – Deploy Lambda To Copy Files Between S3 Buckets to get mostly all Terraform deployment automation.

Alternatively, you can create using our AWS CLI guides to create them:

Example of test file/test data

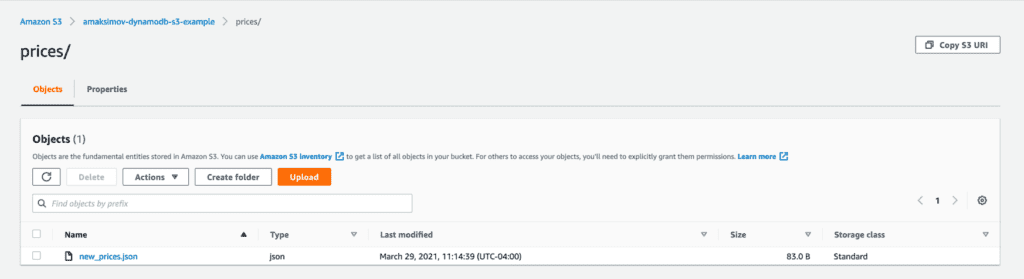

Here’s the new_prices.json file content, which is uploaded to the prices folder in the S3 bucket:

cat new_prices.json

[

{"product": "Apple", "price": 15},

{"product": "Orange", "price": 25}

]This file is placed in the S3 bucket in the prices folder:

Configuring test event

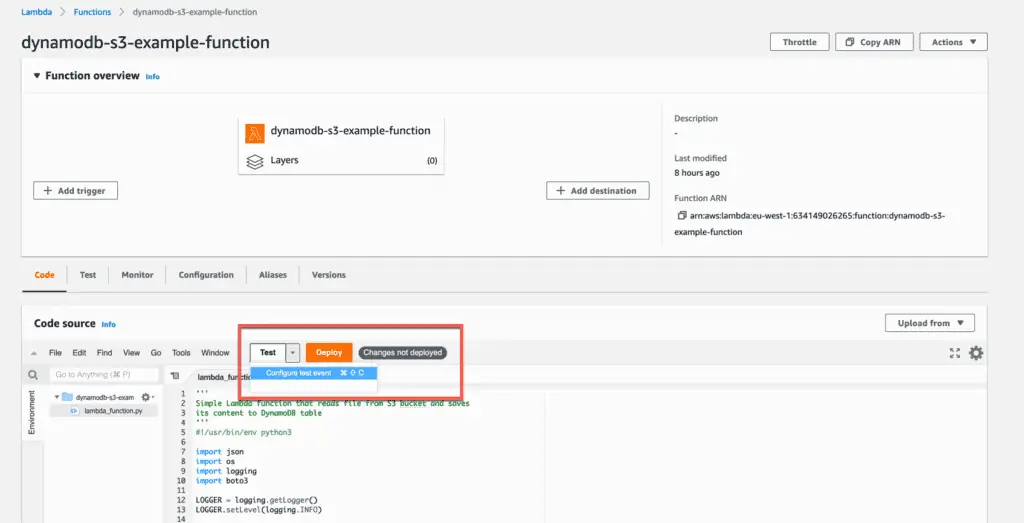

Now, to test the Lambda function manually, you need to open your Lambda function at the web console and select “Configure test event” from the dropdown menu of the “Test” button:

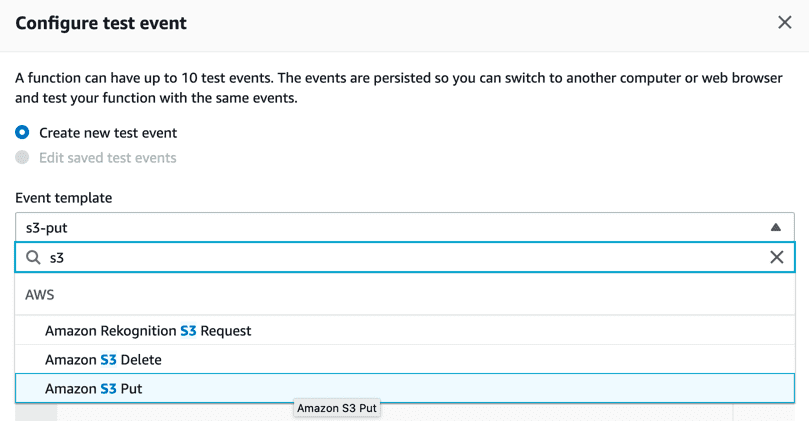

Now you can select a test event for almost any AWS service with Lambda integration. We need an “Amazon S3 Put” example:

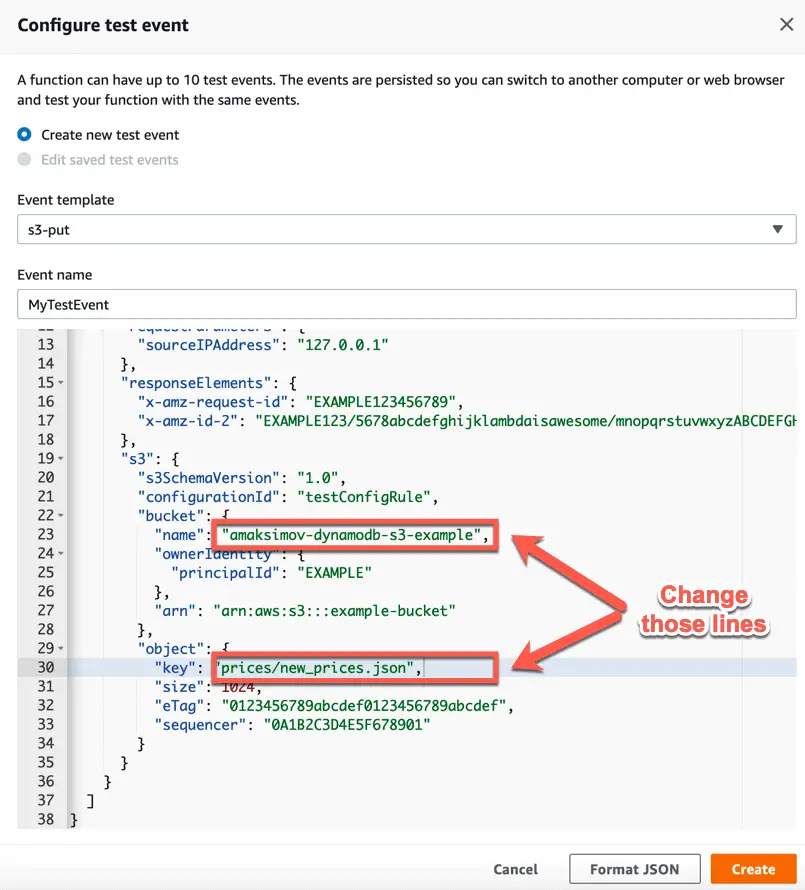

Next, you need to set up the test Event name, change the S3 bucket name, and test file location (key):

Finally, hit the “Create” button to save your test event.

Every time you click the “Test” button, it will send the same test event to your Lambda function.

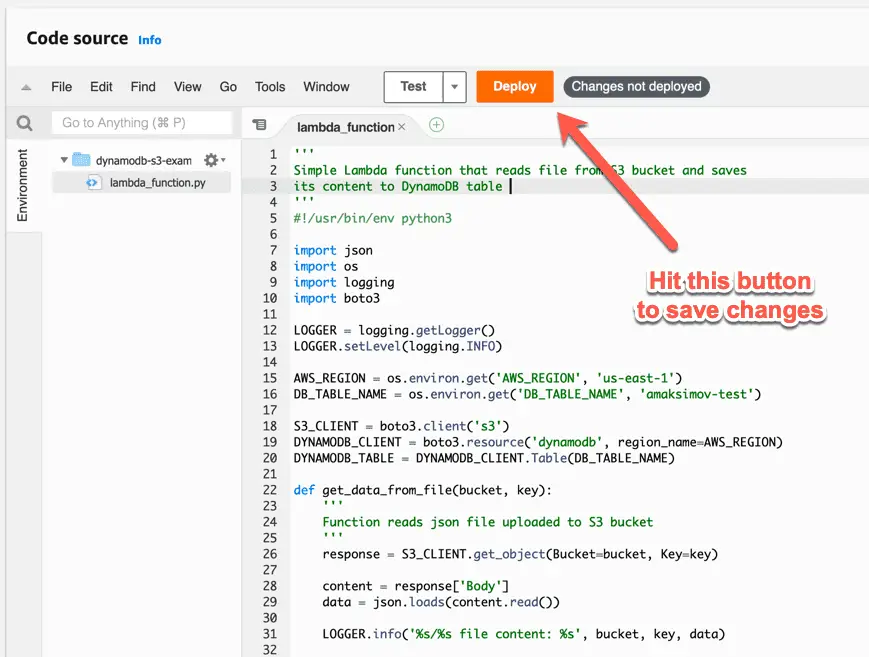

If you did not deploy your Lambda function, do it now:

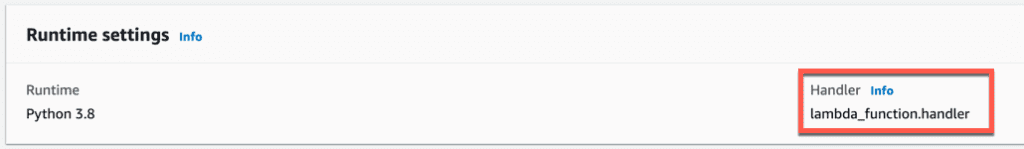

Lambda handler

Change the Lambda function handler to lambda_function.handler:

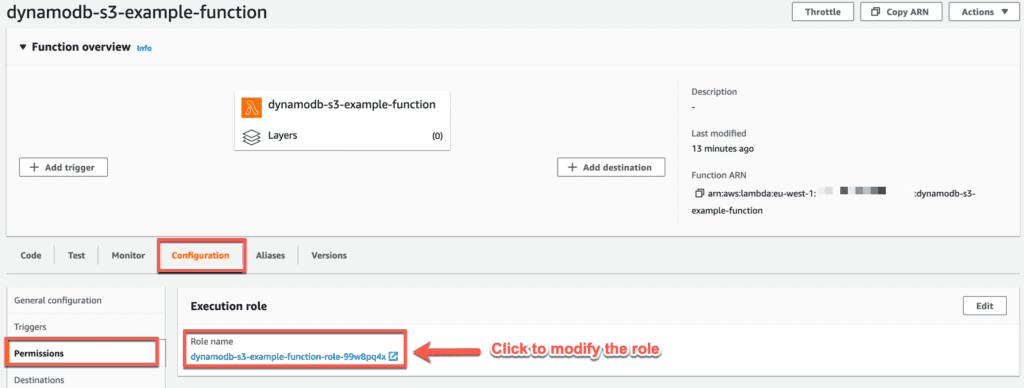

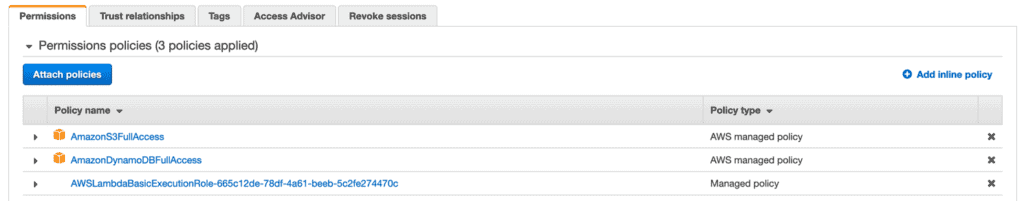

Lambda role (permissions)

If you’re doing the process manually, you must add the Lambda function required permissions to access DynamoDB and S3 bucket.

The quickest way to do this is to add them to the already created Lambda function role:

For real production deployment, it is strongly recommended to provide only necessary permissions to your Lambda function, but for this example, we’ll add the following policies:

- AmazonS3FullAccess

- AmazonDynamoDBFullAccess

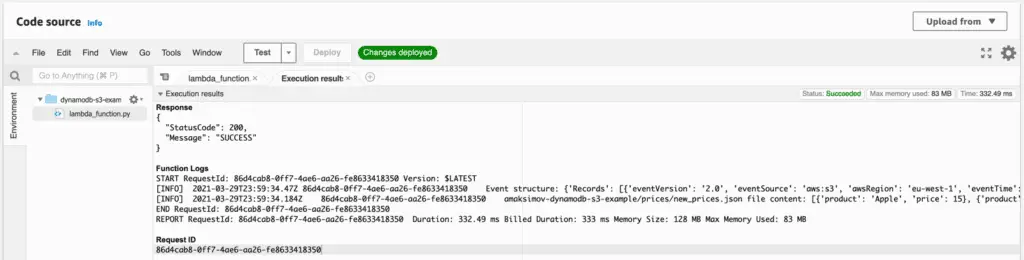

Test execution

Now, your Lambda has enough privileges, and you can press the “Test” button to test it manually:

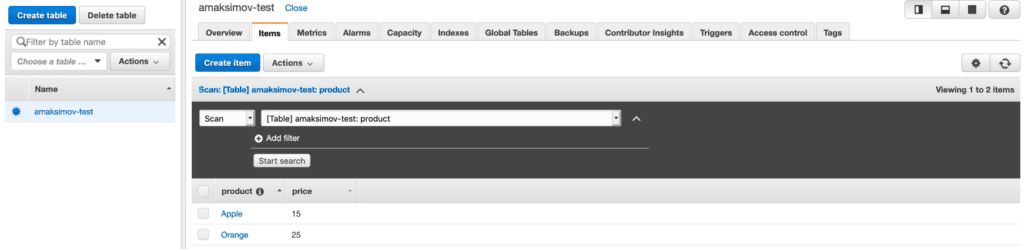

Нou should see your test data in the DynamoDB:

Automated Unit Testing

There are lots of downsides to manual operations. The most important are:

- All manual operations are time-consuming

- Manual testing may introduce inconsistency and errors

Here’s where test automation can help.

For Python Lambda functions, we can do it using the moto library. This library allows us to mock AWS services and test your code before deploying it. And it’s extremely fast!

Of course, not all problems can be solved using moto. Still, if you’re using it, you can reduce Serverless application development time by up to 5 times (measurement is based on personal author experience).

Let’s look at how we can test the function above using moto.

Just to remind you, we’ll be testing those integrations:

Requirements

For the examples below, we’ll use the following Python libraries:

cat requirements_dev.txt

moto==2.0.2

coverage==5.5

pylint==2.7.2

pytest==6.2.2You can install them in the virtual environment using the following commands:

python3 -m venv .venv

source .venv/bin/activate

pip install --upgrade pip

pip install -r requirements_dev.txt Project structure

In this article, we’ll use the following project structure:

tree

.

├── index.py

├── requirements_dev.txt

└── test_index.py

0 directories, 3 filesWhere:

index.pyis the file which contains the Lambda function code from the example belowrequirements_dev.txtis the file that contains the required Python libraries for this articletest_index.pyis the file that contains test cases for the Lambda function code

Unit-testing AWS Lambda S3 file upload events

First, let’s check the file upload to the S3 bucket and get_data_from_file() function:

import json

import unittest

import boto3

from moto import mock_s3

S3_BUCKET_NAME = 'amaksimov-s3-bucket'

DEFAULT_REGION = 'us-east-1'

S3_TEST_FILE_KEY = 'prices/new_prices.json'

S3_TEST_FILE_CONTENT = [

{"product": "Apple", "price": 15},

{"product": "Orange", "price": 25}

]

@mock_s3

class TestLambdaFunction(unittest.TestCase):

def setUp(self):

self.s3 = boto3.resource('s3', region_name=DEFAULT_REGION)

self.s3_bucket = self.s3.create_bucket(Bucket=S3_BUCKET_NAME)

self.s3_bucket.put_object(Key=S3_TEST_FILE_KEY,

Body=json.dumps(S3_TEST_FILE_CONTENT))

def test_get_data_from_file(self):

from index import get_data_from_file

file_content = get_data_from_file(S3_BUCKET_NAME, S3_TEST_FILE_KEY)

self.assertEqual(file_content, S3_TEST_FILE_CONTENT)In this simple test example, we’re importing required libraries, setting up global variables, and declaring TestLambdaFunction test case class, which has two methods

setUp()– this method is called before any executed test (function starts fromtest_name) it is very useful when you need to reset the DB or other services to the initial statetest_get_data_from_file()– this test imports theget_data_from_filemethod, executes it, and checks that returned file content is exactly what was uploaded (setUp()).

Check the Python JSON – Complete Tutorial article for more information about working with JSON.

To execute this test, run the following command:

coverage run -m pytestHaving this test in place will allow us to make sure that we’re getting the same expected output from the get_data_from_file() method of our Lambda function.

Unit-testing AWS Lambda DynamoDB operations

Now, let’s add DynamoDB support to test save_data_to_db() and handler methods.

import json

import os

import unittest

import boto3

import mock

from moto import mock_s3

from moto import mock_dynamodb2

S3_BUCKET_NAME = 'amaksimov-s3-bucket'

DEFAULT_REGION = 'us-east-1'

S3_TEST_FILE_KEY = 'prices/new_prices.json'

S3_TEST_FILE_CONTENT = [

{"product": "Apple", "price": 15},

{"product": "Orange", "price": 25}

]

DYNAMODB_TABLE_NAME = 'amaksimov-dynamodb'

@mock_s3

@mock_dynamodb2

@mock.patch.dict(os.environ, {'DB_TABLE_NAME': DYNAMODB_TABLE_NAME})

class TestLambdaFunction(unittest.TestCase):

def setUp(self):

# S3 setup

self.s3 = boto3.resource('s3', region_name=DEFAULT_REGION)

self.s3_bucket = self.s3.create_bucket(Bucket=S3_BUCKET_NAME)

self.s3_bucket.put_object(Key=S3_TEST_FILE_KEY,

Body=json.dumps(S3_TEST_FILE_CONTENT))

# DynamoDB setup

self.dynamodb = boto3.client('dynamodb')

try:

self.table = self.dynamodb.create_table(

TableName=DYNAMODB_TABLE_NAME,

KeySchema=[

{'KeyType': 'HASH', 'AttributeName': 'product'}

],

AttributeDefinitions=[

{'AttributeName': 'product', 'AttributeType': 'S'}

],

ProvisionedThroughput={

'ReadCapacityUnits': 5,

'WriteCapacityUnits': 5

}

)

except self.dynamodb.exceptions.ResourceInUseException:

self.table = boto3.resource('dynamodb').Table(DYNAMODB_TABLE_NAME)

def test_get_data_from_file(self):

from index import get_data_from_file

file_content = get_data_from_file(S3_BUCKET_NAME, S3_TEST_FILE_KEY)

self.assertEqual(file_content, S3_TEST_FILE_CONTENT)In addition to the previous code, we’ve added a DynamoDB table declaration with a HASH key product of type string (S).

@mock.patch.dict sets up DB_TABLE_NAME environment variable used by the Lambda function.

Now, let’s test save_data_to_db() method:

def test_save_data_to_db(self):

from index import save_data_to_db

for item in S3_TEST_FILE_CONTENT:

save_data_to_db(item)

db_response = self.table.scan(Limit=1)

db_records = db_response['Items']

while 'LastEvaluatedKey' in db_response:

db_response = self.table.scan(

Limit=1,

ExclusiveStartKey=db_response['LastEvaluatedKey']

)

db_records += db_response['Items']

self.assertEqual(len(S3_TEST_FILE_CONTENT), len(db_records))In this test, we’re calling the save_data_to_db() method for every single item from S3_TEST_FILE_CONTENT list to save it to DynamoDB.

Then we query DynamoDB and check that we’re getting back the same amount of records.

Finally, we can test the handler method.

This method will get the following S3 integration data structure in the event variable during its execution.

You may also copy-paste the event structure we created during the manual test, but my event example is a bit clearer for me.

def test_handler(self):

from index import handler

event = {

'Records': [

{

's3': {

'bucket': {

'name': S3_BUCKET_NAME

},

'object': {

'key': S3_TEST_FILE_KEY

}

}

}

]

}

result = handler(event, {})

self.assertEqual(result, {'StatusCode': 200, 'Message': 'SUCCESS'})Final test_index.py content is the following:

import json

import os

import unittest

import boto3

import mock

from moto import mock_s3

from moto import mock_dynamodb2

S3_BUCKET_NAME = 'amaksimov-s3-bucket'

DEFAULT_REGION = 'us-east-1'

S3_TEST_FILE_KEY = 'prices/new_prices.json'

S3_TEST_FILE_CONTENT = [

{"product": "Apple", "price": 15},

{"product": "Orange", "price": 25}

]

DYNAMODB_TABLE_NAME = 'amaksimov-dynamodb'

@mock_s3

@mock_dynamodb2

@mock.patch.dict(os.environ, {'DB_TABLE_NAME': DYNAMODB_TABLE_NAME})

class TestLambdaFunction(unittest.TestCase):

def setUp(self):

# S3 setup

self.s3 = boto3.resource('s3', region_name=DEFAULT_REGION)

self.s3_bucket = self.s3.create_bucket(Bucket=S3_BUCKET_NAME)

self.s3_bucket.put_object(Key=S3_TEST_FILE_KEY,

Body=json.dumps(S3_TEST_FILE_CONTENT))

# DynamoDB setup

self.dynamodb = boto3.client('dynamodb')

try:

self.table = self.dynamodb.create_table(

TableName=DYNAMODB_TABLE_NAME,

KeySchema=[

{'KeyType': 'HASH', 'AttributeName': 'product'}

],

AttributeDefinitions=[

{'AttributeName': 'product', 'AttributeType': 'S'}

],

ProvisionedThroughput={

'ReadCapacityUnits': 5,

'WriteCapacityUnits': 5

}

)

except self.dynamodb.exceptions.ResourceInUseException:

self.table = boto3.resource('dynamodb').Table(DYNAMODB_TABLE_NAME)

def test_get_data_from_file(self):

from index import get_data_from_file

file_content = get_data_from_file(S3_BUCKET_NAME, S3_TEST_FILE_KEY)

self.assertEqual(file_content, S3_TEST_FILE_CONTENT)

def test_save_data_to_db(self):

from index import save_data_to_db

for item in S3_TEST_FILE_CONTENT:

save_data_to_db(item)

db_response = self.table.scan(Limit=1)

db_records = db_response['Items']

while 'LastEvaluatedKey' in db_response:

db_response = self.table.scan(

Limit=1,

ExclusiveStartKey=db_response['LastEvaluatedKey']

)

db_records += db_response['Items']

self.assertEqual(len(S3_TEST_FILE_CONTENT), len(db_records))

def test_handler(self):

from index import handler

event = {

'Records': [

{

's3': {

'bucket': {

'name': S3_BUCKET_NAME

},

'object': {

'key': S3_TEST_FILE_KEY

}

}

}

]

}

result = handler(event, {})

self.assertEqual(result, {'StatusCode': 200, 'Message': 'SUCCESS'})Running automated unit-tests

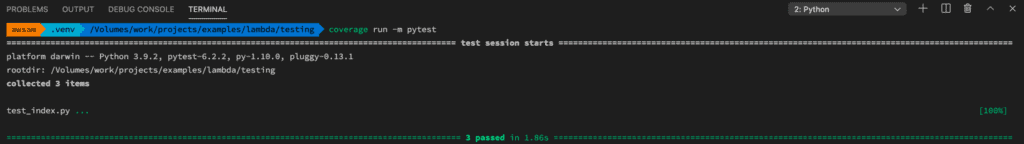

If you run this test again, you may see that all three tests successfully passed:

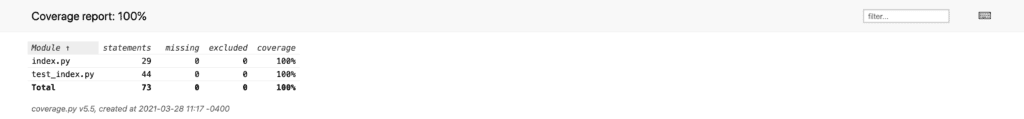

To get test execution statistics, execute the following coverage commands:

coverage html --omit='.venv/*'

open htmlcov/index.htmlHere’s an expected web page:

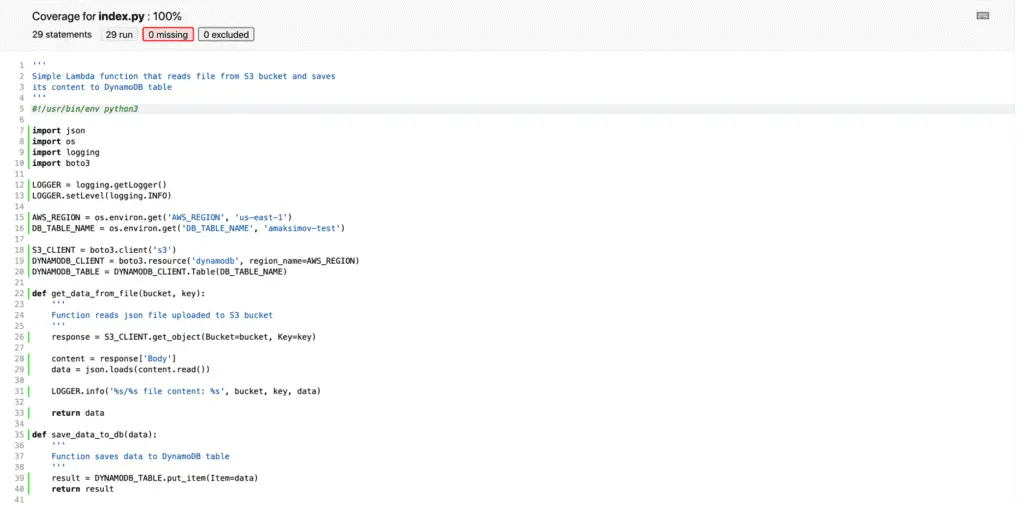

Which can give you more information about what’s covered in your code by tests and what is not:

Summary

In this article, we covered the process of manual and automatic unit testing of your AWS Lambda function code by testing the S3 file upload event and DynamoDB put_item and scan operations.