Choosing the Right Amazon EC2 Instance Types: A Comprehensive Guide

Are you looking to understand the various Amazon EC2 instance types available? Choosing the right instance type is crucial for optimizing the performance and cost-effectiveness of your workloads on the AWS cloud. This comprehensive guide delves into Amazon EC2 instance types, providing insights into their characteristics, performance metrics, pricing options, and ideal use cases. Whether you’re running general-purpose applications, compute-intensive workloads, memory-intensive tasks, or storage-focused operations, we’ll help you navigate the diverse range of EC2 instance families to find the perfect fit for your needs.

By examining the factors influencing instance type selection, such as vCPUs, memory, storage options, and network performance, you’ll understand how each type caters to different workload requirements. We’ll also explore real-world case studies showcasing how businesses successfully choose the right EC2 instance types for their specific use cases, enabling them to scale, process big data, and leverage GPU acceleration effectively. Additionally, we’ll share best practices for optimizing EC2 instance selection, including right-sizing instances, utilizing spot instances and savings plans, and monitoring and performance-tuning techniques. Join us on this journey to unlock the power of Amazon EC2 instance types and maximize the performance and efficiency of your applications on AWS.

Introduction

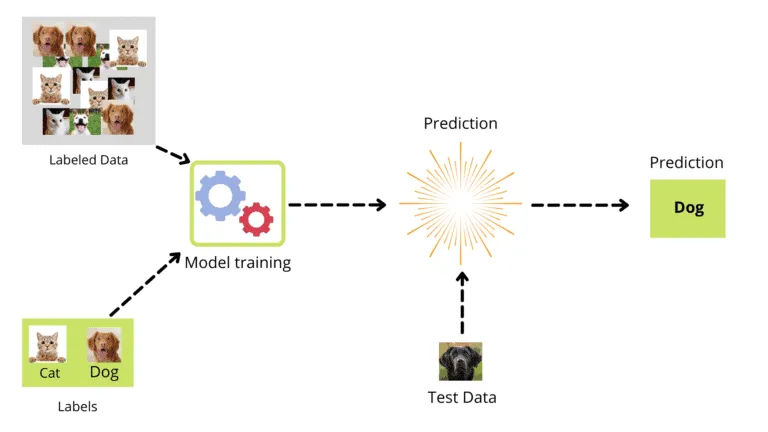

Welcome to our comprehensive guide on choosing the right Amazon EC2 instance types! This article will explore the factors to consider when selecting instance types for your cloud workloads. Whether running web applications, big data processing, or Machine Learning workloads, understanding the different EC2 instance types is crucial for optimizing performance, cost-efficiency, and overall resource utilization.

In this guide, we’ll cover the following topics:

- Understanding Amazon EC2 Instance Types: Get familiar with EC2 instance types and how they influence your application’s performance and capabilities.

- Factors to Consider when Choosing Instance Types: Discover the key factors to consider when selecting the most suitable instance types for your specific workload requirements.

- Performance Metrics: Learn about important performance metrics such as CPU, memory, storage, and network capabilities and how they impact your application’s performance.

- Pricing Options: Explore the options available for EC2 instances, including On-Demand, Reserved, and Spot instances, and understand how they can affect your cost structure.

- Use Cases and Workloads: Dive into different use cases and workloads, such as compute-intensive, memory-intensive, storage-intensive, and GPU-accelerated workloads, to determine the ideal instance types for your specific application requirements.

Throughout the article, we’ll provide illustrative examples using popular tools such as AWS CLI, Terraform, or Python to demonstrate how to leverage these tools to interact with EC2 instances and make informed decisions.

So, whether you’re a developer, a DevOps engineer, or a cloud architect, this guide will equip you with the knowledge and tools necessary to navigate the vast landscape of Amazon EC2 instance types and choose the best options for your unique use cases.

Let’s dive in and explore the world of Amazon EC2 instance types together!

Understanding Amazon EC2 Instance Types

When working with Amazon EC2, it’s essential to have a solid understanding of the different instance types available and their characteristics. Let’s explore the key points you need to know:

- Instance Type Classification: Amazon EC2 offers various instance types, each designed to cater to specific workload requirements. Instance types are classified into families based on their intended use cases, such as general-purpose, compute-optimized, memory-optimized, storage-optimized, and accelerated computing instances.

- Performance Metrics: Instance types come with varying performance characteristics. Here are some important metrics to consider:

- CPU: Instances can have different CPU capabilities, ranging from a few cores to high-performance processors optimized for specific workloads.

- Memory: The amount of RAM available in an instance can greatly impact performance, especially for memory-intensive applications and databases.

- Storage: Instance types offer different storage options, including local instance storage, Amazon EBS volumes, and Amazon EFS file systems.

- Network: Network performance varies across instance types, affecting data transfer rates and latency.

- Instance Size Flexibility: Each instance family typically consists of multiple instance sizes. These sizes vary in vCPUs, memory, and storage options, allowing you to choose the right resources for your application.

- Virtualization Technologies: Amazon EC2 leverages different virtualization technologies, including Xen and Nitro, to provide efficient and secure virtualization of instances. Understanding these technologies can help you make better decisions when selecting instance types.

To illustrate these concepts, consider an example using the AWS CLI. You can use the following command to list all available EC2 instance types:

aws ec2 describe-instance-typesYou can also utilize Terraform or Python to programmatically interact with EC2 instance types and retrieve specific details, such as pricing information or performance metrics.

By understanding Amazon EC2 instance types and their characteristics, you’ll be better equipped to choose the right instances for your specific workloads. In the next section, we’ll delve into the factors you should consider when selecting instance types, ensuring optimal performance and cost-efficiency.

Factors to Consider when Choosing Instance Types

When selecting Amazon EC2 instance types, several important factors must be considered. Let’s explore these factors in detail:

Performance Metrics

To ensure optimal performance for your workloads, pay close attention to the following performance metrics:

- CPU: Evaluate the CPU capabilities of different instance types. Consider the number of cores, clock speed, and whether they are optimized for general-purpose computing or specialized workloads.

- Memory: Assess the amount of RAM available in each instance type. Memory-intensive applications may require larger memory sizes to avoid performance bottlenecks.

- Storage: Consider the storage options provided by each instance type. Determine if local instance storage, Amazon EBS volumes, or Amazon EFS file systems best suit your application’s storage requirements.

- Network: Evaluate the network performance of instance types. Look at the network bandwidth, data transfer rates, and latency to ensure optimal connectivity for your workloads.

You can utilize tools like the AWS CLI to understand these performance metrics further. For example, you can use the following command to retrieve information about CPU and memory for a specific instance type:

aws ec2 describe-instance-types \

--instance-types <instance-type> \

--query "InstanceTypes[0].ProcessorInfo.SustainedClockSpeedInGhz, InstanceTypes[0].MemoryInfo.SizeInMiB"3.2 Pricing Options

Consider the pricing options available for EC2 instances to optimize your cost structure. Here are some key pricing models to explore:

- On-Demand Instances: These instances offer flexibility with no long-term commitments. Depending on the instance type, you pay for the compute capacity by the hour or second.

- Reserved Instances: Reserved Instances provide significant cost savings for steady-state workloads with predictable usage. You can enjoy discounted hourly rates by committing to a specific instance type and term (1 or 3 years).

- Spot Instances: Spot Instances allow you to bid on unused EC2 capacity, potentially providing substantial savings compared to On-Demand instances. However, remember that availability is subject to fluctuations based on demand.

To analyze the pricing options programmatically, you can utilize the AWS CLI. For instance, you can use the following command to list the pricing details for a specific instance type:

aws ec2 describe-reserved-instances-offerings \

--instance-types <instance-type>Use Cases and Workloads

Consider the specific use cases and workloads you intend to run on your EC2 instances. Different applications have varying requirements, and selecting the right instance type can significantly impact performance and efficiency. Here are a few examples:

- Compute-Intensive Workloads: Applications requiring significant processing power and high CPU performance, such as scientific simulations or video encoding, benefit from compute-optimized instance types.

- Memory-Intensive Workloads: Databases, caching systems, and in-memory analytics platforms often require ample memory. Memory-optimized instance types provide higher memory capacity for such workloads.

- Storage-Intensive Workloads: Applications that demand large-scale data processing or require high-throughput storage benefit from storage-optimized instance types with local NVMe storage or high-performance Amazon EBS options.

- GPU-Accelerated Workloads: Machine learning, rendering, and other GPU-accelerated workloads require specialized instances with powerful GPUs.

Consider your specific use case and workload requirements to select the most appropriate instance types. You can leverage tools like Terraform or Python to programmatically match instance types to specific workloads, ensuring optimal performance.

By carefully evaluating performance metrics and pricing options and aligning instance types with your use cases and workloads, you can make well-informed

decisions when choosing Amazon EC2 instances. The next section explores the different Amazon EC2 instance families, each tailored to specific workload characteristics.

Exploring the Different Amazon EC2 Instance Families

Amazon EC2 offers a diverse range of instance families designed to cater to specific workload requirements. Let’s dive into the different families and their characteristics:

General Purpose Instances

- General purpose instances balance compute, memory, and network resources.

- They suit various applications, including web servers, small to medium databases, and development/test environments.

- Popular general-purpose instances include the M5 and T3 instances.

- To list available general-purpose instances using the AWS CLI, you can use the following command:

aws ec2 describe-instance-types \

--filters "Name=fpga-name,Values=m5.*"Compute-Optimized Instances

- Compute-optimized instances provide high-performance CPUs and are ideal for compute-intensive workloads.

- They excel in high-performance web servers, batch processing, and scientific modeling tasks.

- Prominent compute-optimized instance families include C5 and C6g.

- To retrieve compute-optimized instances programmatically using the AWS CLI, you can use the following command:

aws ec2 describe-instance-types \

--filters "Name=fpga-name,Values=c5.*"Memory-Optimized Instances

- Memory-optimized instances are designed for memory-intensive workloads that require substantial RAM.

- They are well-suited for large-scale in-memory databases, real-time analytics, and high-performance caching.

- Notable memory-optimized instance families include R5 and X1.

- You can use the following AWS CLI command to list available memory-optimized instances:

aws ec2 describe-instance-types \

--filters "Name=fpga-name,Values=r5.*"Storage-Optimized Instances

- Storage-optimized instances prioritize high-performance storage for data-intensive workloads.

- They are suitable for applications that require massive data processing, distributed file systems, and data warehousing.

- Prominent storage-optimized instance families include I3 and D2.

- To retrieve storage-optimized instances programmatically using the AWS CLI, you can use the following command:

aws ec2 describe-instance-types \

--filters "Name=fpga-name,Values=i3.*"Accelerated Computing Instances

- Accelerated computing instances are tailored for workloads that benefit from GPU acceleration.

- They are ideal for machine learning, graphics rendering, and video transcoding.

- Prominent accelerated computing instance families include P3 and G4.

- You can use the following AWS CLI command to list available accelerated computing instances:

aws ec2 describe-instance-types \

--filters "Name=fpga-name,Values=p3.*"By understanding the characteristics of each Amazon EC2 instance family, you can narrow down your choices and select the most suitable instances for your workloads. The next section provides a detailed comparison and selection process to help you make informed decisions.

Comparing and Selecting the Right Instance Type

To make an informed decision when choosing Amazon EC2 instance types, it’s essential to compare their capabilities and match them to your specific workloads. Let’s explore the steps to compare and select the right instance type:

Instance Type Comparison Chart

A helpful way to compare different EC2 instance types is by creating a comparison chart that highlights key metrics. Here’s an example of what such a chart could include:

| Instance Type | vCPUs | Memory (GiB) | Storage | Network Performance | Price |

|---|---|---|---|---|---|

| M5.Large | 2 | 8 | EBS Only | Up to 10 Gbps | $0.096/hour |

| C5.Large | 2 | 4 | EBS Only | Up to 10 Gbps | $0.085/hour |

| R5.Large | 2 | 16 | EBS Only | Up to 10 Gbps | $0.126/hour |

| P3.2xlarge | 8 | 61 | EBS Only | Up to 10 Gbps | $3.060/hour |

By comparing instance types, you can easily identify the differences in CPU, memory, storage, network performance, and pricing.

Matching Instance Types to Workloads

To select the right instance type for your specific workload, consider the following steps:

- Identify Workload Requirements: Determine the specific requirements of your workload in terms of CPU, memory, storage, and network performance.

- Review the Comparison Chart: Compare the instance types’ capabilities in the comparison chart and identify the instances that align closely with your workload requirements.

- Consider Cost Efficiency: Assess the pricing of the instance types and evaluate the cost efficiency based on your workload’s performance needs and budget.

- Evaluate Performance vs. Cost: Strike a balance between performance and cost by selecting an instance type that provides the necessary resources while maximizing cost efficiency.

You can use tools like Terraform or Python to match instance types to workloads programmatically. For example, using Terraform, you can define your workload requirements and filter the available EC2 instance types based on those requirements.

data "aws_instance_type_offerings" "example" {

filter {

name = "vcpu"

values = ["4"]

}

filter {

name = "memory"

values = ["8"]

}

}

output "matched_instance_types" {

value = data.aws_instance_type_offerings.example.instance_types

}By leveraging such tools and comparing instance types based on your workload’s requirements, you can confidently select the most suitable EC2 instances.

The next section explores best practices for optimizing EC2 instance selection, including right-sizing instances and utilizing cost-saving options.

Best Practices for Optimizing EC2 Instance Selection

To optimize your EC2 instance selection and ensure cost-efficiency and performance, consider implementing the following best practices:

Right-Sizing Instances

- Analyze Resource Requirements: Thoroughly assess your application’s CPU, memory, and storage resource needs. Avoid overprovisioning or underutilizing resources.

- Use Monitoring and Auto Scaling: Implement monitoring tools like Amazon CloudWatch to gather performance data and utilize Auto Scaling to adjust the number of instances based on workload demands dynamically.

- Consider Burstable Instances: Burstable instances, such as T3 and T4g, provide a baseline level of performance with the ability to burst when required. They are cost-effective for workloads with sporadic bursts of activity.

- Evaluate Instance Size Flexibility: Choose instance families that offer various instance sizes within the same family. This flexibility allows you to scale vertically and precisely match your workload’s resource requirements.

Utilizing Spot Instances and Savings Plans

- Utilize Spot Instances: Spot Instances allow you to bid on unused EC2 capacity, offering significant cost savings. They are suitable for fault-tolerant workloads that can handle interruptions and can be combined with On-Demand instances for reliability.

- Leverage Savings Plans: Savings Plans provide flexible pricing options and savings for long-term usage commitments. They can provide substantial cost reductions for steady-state workloads.

- Combine Spot Instances and Savings Plans: To further optimize costs, consider combining Spot Instances with Savings Plans to achieve additional savings while maintaining availability and flexibility.

To demonstrate the utilization of Spot Instances using the AWS CLI, you can use the following command to request a Spot Instance:

aws ec2 request-spot-instances \

--instance-count 1 \

--launch-specification \

file://specification.jsonMonitoring and Performance Tuning

- Implement Performance Monitoring: Continuously monitor your EC2 instances using tools like Amazon CloudWatch. Monitor key metrics such as CPU utilization, memory usage, and network performance to identify bottlenecks or areas of improvement.

- Optimize Instance Placement: Use features like Placement Groups to enhance network performance and reduce latency for applications requiring high network throughput.

- Fine-Tune Instance Configuration: Configure operating system and application-level optimizations to maximize performance. Adjust parameters, such as TCP window scaling or buffer sizes, to improve network throughput.

- Utilize Performance Optimization Tools: AWS provides various tools like AWS Systems Manager and AWS Trusted Advisor to assess and optimize EC2 instance performance.

By implementing these best practices, you can optimize your EC2 instance selection, minimize costs, and enhance the performance of your applications. The next section explores additional tips for effectively managing and securing your EC2 instances.

Case Studies: Real-world Examples of Instance Type Selection

To illustrate the practical application of instance type selection, let’s dive into real-world case studies where businesses optimized their EC2 instance choices:

- E-commerce Website Scaling:

- A growing e-commerce website experienced high traffic during seasonal sales.

- They utilized Auto Scaling to adjust the number of instances based on demand.

- They chose compute-optimized instances (C5) to handle the compute-intensive nature of their web application.

- Big Data Processing:

- A data analytics company needed to process large volumes of data quickly.

- They opted for memory-optimized instances (R5) to accommodate the in-memory processing requirements of their analytics workloads.

- By utilizing the high RAM capacity of R5 instances, they achieved faster data processing times.

- Machine Learning Workloads:

- A research organization focused on machine learning projects.

- They leveraged GPU-accelerated instances (P3) to accelerate their training and inference workloads.

- With high-performance GPUs, they significantly reduced the time required for model training and achieved better overall efficiency.

- Storage-Intensive Applications:

- A media production company requires high-performance storage for video rendering and editing.

- They selected storage-optimized instances (I3) to handle the data-intensive nature of their applications.

- The fast storage capabilities of I3 instances enabled them to process large video files efficiently.

In each case study, selecting the right EC2 instance types based on workload requirements allowed businesses to optimize their performance, scalability, and cost-effectiveness.

To programmatically determine the appropriate instance types for your specific use cases, you can utilize tools like AWS CLI, Terraform, or Python. You can make data-driven decisions by programmatically analyzing your workload requirements and comparing them against available instance types.

The next section explores security considerations for EC2 instances and best practices to ensure a secure and reliable environment.

FAQ

What are the 3 types of EC2?

What are the different types of EC2 instances in AWS?

What is a general-purpose instance?

How many EC2 instances are there in AWS?

What are EC2 instance types?

How do I know my EC2 instance type?

aws ec2 describe-instances --instance-ids <your-instance-id> --query 'Reservations[].Instances[].InstanceType'. This will provide the instance type information for the specified instance ID. You can also check the EC2 console in the AWS Management Console, where the instance type and other details for your EC2 instances are displayed.Conclusion

This comprehensive guide has explored the various aspects of selecting the right Amazon EC2 instance types. You can make informed decisions that align with your needs by considering factors such as workload requirements, performance metrics, pricing options, and use cases. Let’s recap the key points covered:

- Understand the different Amazon EC2 instance families and their characteristics.

- Evaluate performance metrics, pricing options, and use cases to match instance types to your workloads.

- Create an instance-type comparison chart to compare and analyze different options easily.

- Utilize tools like AWS CLI, Terraform, or Python to match instance types to your workloads programmatically.

- Optimize EC2 instance selection through right-sizing, utilizing spot instances and savings plans, and monitoring and performance tuning.

- Learn from real-world case studies to see how businesses have successfully chosen instance types for their specific use cases.

- Ensure security and reliability considerations when managing EC2 instances.

By following these best practices and considering the unique requirements of your applications, you can effectively leverage Amazon EC2 instance types to achieve optimal performance, scalability, and cost-efficiency.

Remember, AWS offers a wide range of resources and tools to support you in your instance-type selection journey. Explore the AWS documentation, consult the community, and stay updated with the latest features and offerings.

As you continue to leverage Amazon EC2 and its diverse instance types, may you succeed in building scalable and high-performing applications on the AWS cloud.

Happy instance type selection and happy computing!

References

Here are some valuable references and resources to further explore Amazon EC2 instance types and optimize your selection process:

- Amazon EC2 Instance Types: Visit the official AWS documentation for a comprehensive overview of EC2 instance families, sizes, and specifications

- AWS Instance Comparison: Use the AWS Instance Comparison tool to compare different EC2 instance types based on performance, pricing, and specifications

- AWS CLI: Learn how to utilize the AWS Command Line Interface (CLI) to manage EC2 instances, request Spot Instances, and retrieve instance information

- Terraform EC2 Instance Resource: Explore the Terraform EC2 instance resource documentation to provision and manage EC2 instances programmatically

- Python Boto3 SDK: Dive into the Boto3 SDK for Python to programmatically interact with AWS services, including EC2 instances

- AWS Auto Scaling: Discover how to use Auto Scaling to automatically adjust the number of instances in response to changing demand

- Amazon CloudWatch: Learn about CloudWatch, a monitoring service that provides insights into EC2 instance performance and resource utilization

- Amazon EC2 Spot Instances: Explore the benefits of Spot Instances, how to request them, and how they can save costs for your workloads

- AWS Savings Plans: Understand how Savings Plans can provide cost savings for your long-term EC2 usage commitments

- AWS Systems Manager: Utilize Systems Manager to manage and automate operational tasks for EC2 instances

Remember to refer to the official AWS documentation and community forums for the most up-to-date information and insights on Amazon EC2 instance types and best practices.